NEWS

Iobroker instabil und Verzögert Redis Datenbank sehr groß

-

Bei mir läuft seit einigen Wochen iobroker sehr instabil.

Oft dauert es dann einige Sekunden zwischen Schalter betätigen und Reaktion an der Lampe.Ich war bislang nicht wirklich in der Lage die Root Cause dafür zu finden.

Meine Vermutung geht Richtung Arbeitsspeicher.

Kurz zu meinem System:

Raspberry Pi 4 mit 4GB Arbeitsspeicher (Original Netzteil)

SSD mit 128GB von der auch gebootet wird

Neben IoBroker noch InfluxDB und Grafana (zur Visualisierung der Photovoltaikanlage)

An Sensoren und Schaltaktoren Zigbee und Shelly.

Visualisierung: iQontrol

System ist mit den ganzen NPM, Nodejs, Node up to date

States und Files wurden auf Redis gewechseltAn Instanzen hab ich fast alles abgestellt was geht, damit das System überhaupt einigermaßen läuft.

Sobald ich InfluxDB, Modbus, Ring etc aktiviere geht nach sehr kurzer Zeit gar nichts mehr.Was mir aufgefallen ist:

Die Redis Datenbank ist seeeehr groß (2,72 GB) (Wurde über die ca. 10 Wochen, die ich nun dabei bin stetig größer)

Wenn ich mir die Bigkeys anzeige scheinen in der Datenbank Videostreams von meinem Ring.0 Adapter gelandet zu sein.

Ist das normal? Kann man das löschen?Ich weiß langsam echt nicht mehr weiter. Bi

pi@raspberrypi:~ $ redis-cli --bigkeys # Scanning the entire keyspace to find biggest keys as well as # average sizes per key type. You can use -i 0.1 to sleep 0.1 sec # per 100 SCAN commands (not usually needed). [00.00%] Biggest string found so far 'cfg.f.ring.0$%$doorbell_27850292/livestream27850292_1654655026251.mp4$%$meta' with 186 bytes [00.00%] Biggest string found so far 'cfg.f.ring.0$%$doorbell_27850292/livestream27850292_1654294083877.mp4$%$data' with 576342 bytes [00.11%] Biggest string found so far 'cfg.f.ring.0$%$doorbell_27850292/livestream27850292_1653302350714.mp4$%$data' with 879420 bytes [00.34%] Biggest set found so far 'cfg.s.object.type.group' with 2 members [00.51%] Biggest string found so far 'cfg.f.ring.0$%$doorbell_27850292/livestream27850292_1656167220051.mp4$%$data' with 1958223 bytes [01.32%] Biggest string found so far 'cfg.f.ring.0$%$doorbell_27850292/livestream27850292_1656064186291.mp4$%$data' with 2427537 bytes [01.90%] Biggest string found so far 'cfg.f.ring.0$%$doorbell_27850292/livestream27850292_1656124584341.mp4$%$data' with 2781340 bytes [09.31%] Biggest set found so far 'cfg.s.object.common.custom' with 87 members [12.38%] Biggest string found so far 'cfg.f.ring.0$%$doorbell_27850292/livestream27850292_1656123326568.mp4$%$data' with 3021819 bytes [12.52%] Biggest set found so far 'cfg.s.object.type.state' with 3734 members [16.36%] Biggest string found so far 'cfg.f.javascript.admin$%$static/js/2.ea6ad656.chunk.js.map$%$data' with 11953908 bytes -------- summary ------- Sampled 35809 keys in the keyspace! Total key length in bytes is 2365597 (avg len 66.06) Biggest string found 'cfg.f.javascript.admin$%$static/js/2.ea6ad656.chunk.js.map$%$data' has 11953908 bytes Biggest set found 'cfg.s.object.type.state' has 3734 members 0 lists with 0 items (00.00% of keys, avg size 0.00) 0 hashs with 0 fields (00.00% of keys, avg size 0.00) 35795 strings with 2907072774 bytes (99.96% of keys, avg size 81214.49) 0 streams with 0 entries (00.00% of keys, avg size 0.00) 14 sets with 4429 members (00.04% of keys, avg size 316.36) 0 zsets with 0 members (00.00% of keys, avg size 0.00)pi@raspberrypi:~ $ free -m total used free shared buff/cache available Mem: 3837 3471 160 10 206 244 Swap: 2047 2047 0pi@raspberrypi:~ $ iobroker status all iobroker is running on this host. At least one iobroker host is running. Instance "robonect.0" is not running Instance "scenes.0" is running Instance "web.0" is running Instance "net-tools.0" is running Instance "admin.0" is running Instance "tr-064.0" is not running Instance "iqontrol.0" is running Instance "ping.0" is running Instance "text2command.0" is running Instance "backitup.0" is not running Instance "fritzdect.0" is not running Instance "device-reminder.0" is running Instance "discovery.0" is running Instance "iot.0" is not running Instance "shelly.0" is running Instance "zigbee.0" is running Instance "modbus.0" is running Instance "info.0" is running Instance "javascript.0" is running Instance "alexa2.0" is not running Instance "history.0" is not running Instance "cloud.0" is not running Instance "telegram.0" is running Instance "influxdb.0" is not running Instance "simple-api.0" is runningObjekte: 4346, Zustände: 3734Redis Memory usage:

# Memory used_memory:2919147160 used_memory_human:2.72G used_memory_rss:1032699904 used_memory_rss_human:984.86M used_memory_peak:2939070180 used_memory_peak_human:2.74G used_memory_peak_perc:99.32% used_memory_overhead:3957162 used_memory_startup:604088 used_memory_dataset:2915189998 used_memory_dataset_perc:99.89% allocator_allocated:2919113384 allocator_active:1032595456 allocator_resident:1032595456 total_system_memory:4024160256 total_system_memory_human:3.75G used_memory_lua:104448 used_memory_lua_human:102.00K used_memory_scripts:5416 used_memory_scripts_human:5.29K number_of_cached_scripts:8 maxmemory:3221225472 maxmemory_human:3.00G maxmemory_policy:noeviction allocator_frag_ratio:0.35 allocator_frag_bytes:2408449368 allocator_rss_ratio:1.00 allocator_rss_bytes:0 rss_overhead_ratio:1.00 rss_overhead_bytes:104448 mem_fragmentation_ratio:0.35 mem_fragmentation_bytes:-1886413480 mem_not_counted_for_evict:0 mem_replication_backlog:0 mem_clients_slaves:0 mem_clients_normal:1795318 mem_aof_buffer:0 mem_allocator:libc active_defrag_running:0 lazyfree_pending_objects:0Hier noch ein Auszug aus dem Log wenn das System mal wieder spinnt

2022-07-09 10:12:10.540 - info: info.0 (933) cpu Temp res = {"main":40.894,"cores":[],"max":40.894,"socket":[],"chipset":null} 2022-07-09 10:12:30.574 - info: ring.0 (1462) Recieved new Refresh Token. Will use the new one until the token in config gets changed 2022-07-09 11:12:45.382 - info: ring.0 (1462) Recieved new Refresh Token. Will use the new one until the token in config gets changed 2022-07-09 12:12:30.813 - info: ring.0 (1462) Recieved new Refresh Token. Will use the new one until the token in config gets changed 2022-07-09 13:12:47.560 - info: ring.0 (1462) Recieved new Refresh Token. Will use the new one until the token in config gets changed 2022-07-09 14:12:30.580 - info: ring.0 (1462) Recieved new Refresh Token. Will use the new one until the token in config gets changed 2022-07-09 14:48:51.452 - warn: modbus.0 (2648) Poll error count: 1 code: "App Timeout" 2022-07-09 15:03:07.084 - info: shelly.0 (874) [MQTT] Device 192.168.178.109 (shelly1 / shelly1-E8DB84D73D12 / SHSW-1#E8DB84D73D12#1) connected! Polltime set to 61 sec. 2022-07-09 15:08:48.028 - info: shelly.0 (874) [MQTT] Device 192.168.178.109 (shelly1 / shelly1-E8DB84D73D12 / SHSW-1#E8DB84D73D12#1) connected! Polltime set to 61 sec. 2022-07-09 15:12:47.260 - info: ring.0 (1462) Recieved new Refresh Token. Will use the new one until the token in config gets changed 2022-07-09 15:51:47.716 - info: host.raspberrypi Updating repository "stable" under "http://download.iobroker.net/sources-dist.json" 2022-07-09 16:07:58.425 - warn: modbus.0 (2648) Poll error count: 1 code: "App Timeout" 2022-07-09 16:07:58.849 - info: The primary host is no longer active. Checking responsibilities. 2022-07-09 16:08:59.058 - info: The primary host is no longer active. Checking responsibilities. 2022-07-09 16:09:59.341 - info: The primary host is no longer active. Checking responsibilities. 2022-07-09 16:12:30.685 - info: ring.0 (1462) Recieved new Refresh Token. Will use the new one until the token in config gets changed 2022-07-09 16:19:52.549 - info: web.0 (1415) ==> Connected system.user.admin from ::ffff:192.168.178.93 2022-07-09 16:21:54.768 - info: web.0 (1415) <== Disconnect system.user.admin from ::ffff:192.168.178.93 iqontrol.0 2022-07-09 16:23:29.762 - info: web.0 (1415) ==> Connected system.user.admin from ::ffff:192.168.178.93 2022-07-09 16:23:29.774 - info: web.0 (1415) ==> Connected system.user.admin from ::ffff:192.168.178.93 2022-07-09 16:23:29.788 - info: web.0 (1415) ==> Connected system.user.admin from ::ffff:192.168.178.93 2022-07-09 16:25:59.915 - info: web.0 (1415) <== Disconnect system.user.admin from ::ffff:192.168.178.93 2022-07-09 16:25:59.919 - info: web.0 (1415) <== Disconnect system.user.admin from ::ffff:192.168.178.93 2022-07-09 16:26:00.676 - info: web.0 (1415) <== Disconnect system.user.admin from ::ffff:192.168.178.93 iqontrol.0 2022-07-09 16:26:03.116 - info: web.0 (1415) ==> Connected system.user.admin from ::ffff:192.168.178.93 2022-07-09 16:27:20.239 - info: web.0 (1415) <== Disconnect system.user.admin from ::ffff:192.168.178.93 iqontrol.0 2022-07-09 16:33:56.114 - warn: modbus.0 (2648) Poll error count: 1 code: "App Timeout" 2022-07-09 16:35:23.553 - warn: modbus.0 (2648) Poll error count: 1 code: "App Timeout" 2022-07-09 16:36:25.179 - warn: modbus.0 (2648) Poll error count: 1 code: "App Timeout" 2022-07-09 16:36:25.183 - warn: modbus.0 (2648) Error: undefined 2022-07-09 16:36:25.222 - error: modbus.0 (2648) Request timed out. 2022-07-09 16:36:25.223 - error: modbus.0 (2648) Client in error state. 2022-07-09 16:36:25.494 - warn: influxdb.0 (2633) Error on writePoint("{"value":0,"time":"2022-07-09T14:34:43.126Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:36:25.543 - warn: influxdb.0 (2633) Error on writePoint("{"value":153.35,"time":"2022-07-09T14:34:43.249Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:36:25.545 - warn: influxdb.0 (2633) Error on writePoint("{"value":105.96,"time":"2022-07-09T14:34:43.248Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:36:25.547 - warn: influxdb.0 (2633) Error on writePoint("{"value":512.65,"time":"2022-07-09T14:34:43.592Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:36:25.548 - warn: influxdb.0 (2633) Error on writePoint("{"value":1969244.4,"time":"2022-07-09T14:34:43.592Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:36:25.549 - warn: influxdb.0 (2633) Error on writePoint("{"value":240.18,"time":"2022-07-09T14:34:43.249Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:36:36.028 - info: shelly.0 (874) [MQTT] Device 192.168.178.127 (shellyrgbw2 / shellyrgbw2-4A9D38 / SHRGBW2#4A9D38#1) connected! Polltime set to 61 sec. 2022-07-09 16:36:36.039 - info: modbus.0 (2648) Disconnected from slave 192.168.178.63 2022-07-09 16:36:36.200 - info: influxdb.0 (2633) Connecting http://localhost:8086 ... 2022-07-09 16:36:36.201 - info: influxdb.0 (2633) Influx DB Version used: 1.x 2022-07-09 16:37:36.082 - info: modbus.0 (2648) Connected to slave 192.168.178.63 2022-07-09 16:42:30.468 - info: influxdb.0 (2633) Store 6 buffered influxDB history points 2022-07-09 16:51:27.814 - warn: influxdb.0 (2633) Error on writePoint("{"value":198.1,"time":"2022-07-09T14:48:58.784Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:51:27.980 - info: The primary host is no longer active. Checking responsibilities. 2022-07-09 16:51:31.710 - warn: influxdb.0 (2633) Error on writePoint("{"value":241.27,"time":"2022-07-09T14:48:58.784Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:51:33.079 - warn: influxdb.0 (2633) Error on writePoint("{"value":526.41,"time":"2022-07-09T14:48:59.074Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:51:34.020 - warn: influxdb.0 (2633) Error on writePoint("{"value":107.6,"time":"2022-07-09T14:48:58.784Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:51:34.021 - warn: influxdb.0 (2633) Error on writePoint("{"value":1969375.8,"time":"2022-07-09T14:49:03.495Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 16:51:34.022 - warn: influxdb.0 (2633) Error on writePoint("{"value":106.9,"time":"2022-07-09T14:49:03.458Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:51:34.139 - warn: influxdb.0 (2633) Error on writePoint("{"value":198.86,"time":"2022-07-09T14:49:03.461Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:51:34.140 - warn: influxdb.0 (2633) Error on writePoint("{"value":0.38,"time":"2022-07-09T14:49:03.450Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:51:34.141 - warn: influxdb.0 (2633) Error on writePoint("{"value":212.23,"time":"2022-07-09T14:49:03.462Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:51:34.142 - warn: influxdb.0 (2633) Error on writePoint("{"value":517.93,"time":"2022-07-09T14:49:03.494Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:51:37.945 - info: influxdb.0 (2633) Connecting http://localhost:8086 ... 2022-07-09 16:51:37.946 - info: influxdb.0 (2633) Influx DB Version used: 1.x 2022-07-09 16:52:28.151 - info: The primary host is no longer active. Checking responsibilities. 2022-07-09 16:52:30.472 - info: influxdb.0 (2633) Store 17 buffered influxDB history points 2022-07-09 16:58:26.769 - warn: modbus.0 (2648) Poll error count: 1 code: "App Timeout" 2022-07-09 16:58:33.044 - warn: influxdb.0 (2633) Error on writePoint("{"value":192.46,"time":"2022-07-09T14:57:48.367Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:58:32.822 - warn: modbus.0 (2648) Error: undefined 2022-07-09 16:58:32.891 - error: modbus.0 (2648) Request timed out. 2022-07-09 16:58:32.892 - error: modbus.0 (2648) Client in error state. 2022-07-09 16:58:33.057 - warn: influxdb.0 (2633) Error on writePoint("{"value":194.09,"time":"2022-07-09T14:57:48.367Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:58:33.067 - warn: influxdb.0 (2633) Error on writePoint("{"value":229.08,"time":"2022-07-09T14:57:48.367Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 16:58:37.989 - info: modbus.0 (2648) Disconnected from slave 192.168.178.63 2022-07-09 17:00:00.825 - info: modbus.0 (2648) Connected to slave 192.168.178.63 2022-07-09 17:00:00.982 - info: The primary host is no longer active. Checking responsibilities. 2022-07-09 17:00:00.508 - warn: influxdb.0 (2633) Error on writePoint("{"value":195.52,"time":"2022-07-09T14:58:00.717Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:00:00.553 - warn: influxdb.0 (2633) Error on writePoint("{"value":238.81,"time":"2022-07-09T14:58:00.717Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:00:00.570 - warn: influxdb.0 (2633) Error on writePoint("{"value":615.63,"time":"2022-07-09T14:58:00.730Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:00:00.571 - warn: influxdb.0 (2633) Error on writePoint("{"value":194.14,"time":"2022-07-09T14:58:00.716Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:00:00.573 - warn: influxdb.0 (2633) Error on writePoint("{"value":0.49,"time":"2022-07-09T14:58:06.757Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:00:00.779 - info: influxdb.0 (2633) Connecting http://localhost:8086 ... 2022-07-09 17:00:00.780 - info: influxdb.0 (2633) Influx DB Version used: 1.x 2022-07-09 17:01:01.317 - info: The primary host is no longer active. Checking responsibilities. 2022-07-09 17:02:01.668 - info: The primary host is no longer active. Checking responsibilities. 2022-07-09 17:02:31.777 - info: influxdb.0 (2633) Store 8 buffered influxDB history points 2022-07-09 17:02:50.409 - warn: modbus.0 (2648) Poll error count: 1 code: "App Timeout" 2022-07-09 17:03:05.357 - warn: influxdb.0 (2633) Error on writePoint("{"value":191.74,"time":"2022-07-09T15:02:29.242Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:03:42.617 - warn: modbus.0 (2648) Poll error count: 1 code: "App Timeout" 2022-07-09 17:03:05.368 - warn: influxdb.0 (2633) Error on writePoint("{"value":287,"time":"2022-07-09T15:02:29.242Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:03:05.369 - warn: influxdb.0 (2633) Error on writePoint("{"value":110.84,"time":"2022-07-09T15:02:29.242Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:03:05.369 - warn: influxdb.0 (2633) Error on writePoint("{"value":585.38,"time":"2022-07-09T15:02:29.291Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:03:05.370 - warn: influxdb.0 (2633) Error on writeSeries: Error: ESOCKETTIMEDOUT 2022-07-09 17:03:05.370 - info: influxdb.0 (2633) Host not available, move all points back in the Buffer 2022-07-09 17:03:42.697 - warn: influxdb.0 (2633) Error on writePoint("{"value":1969509.4,"time":"2022-07-09T15:02:29.297Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:03:42.899 - warn: modbus.0 (2648) Error: undefined 2022-07-09 17:03:42.900 - error: modbus.0 (2648) Request timed out. 2022-07-09 17:03:42.900 - error: modbus.0 (2648) Client in error state. 2022-07-09 17:03:43.046 - info: influxdb.0 (2633) Connecting http://localhost:8086 ... 2022-07-09 17:03:43.047 - info: influxdb.0 (2633) Influx DB Version used: 1.x 2022-07-09 17:03:43.238 - warn: influxdb.0 (2633) Error on writePoint("{"value":0.48,"time":"2022-07-09T15:02:32.716Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:03:43.239 - info: influxdb.0 (2633) Add point that had error for shelly.0.SHPLG-S#FDDB42#1.Relay0.Power to buffer again, error-count=1 2022-07-09 17:03:43.239 - warn: influxdb.0 (2633) Error on writePoint("{"value":107.98,"time":"2022-07-09T15:02:33.211Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:03:43.240 - info: influxdb.0 (2633) Add point that had error for shelly.0.SHEM-3#C45BBE78A685#1.Emeter0.Power to buffer again, error-count=1 2022-07-09 17:03:43.241 - warn: influxdb.0 (2633) Error on writePoint("{"value":193.6,"time":"2022-07-09T15:02:33.212Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:03:43.241 - info: influxdb.0 (2633) Add point that had error for shelly.0.SHEM-3#C45BBE78A685#1.Emeter1.Power to buffer again, error-count=1 2022-07-09 17:03:43.242 - warn: influxdb.0 (2633) Error on writePoint("{"value":285.87,"time":"2022-07-09T15:02:33.212Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:03:43.243 - info: influxdb.0 (2633) Add point that had error for shelly.0.SHEM-3#C45BBE78A685#1.Emeter2.Power to buffer again, error-count=1 2022-07-09 17:03:43.243 - warn: influxdb.0 (2633) Error on writePoint("{"value":586.89,"time":"2022-07-09T15:02:33.255Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:03:43.244 - info: influxdb.0 (2633) Add point that had error for shelly.0.SHEM-3#C45BBE78A685#1.Total.InstantPower to buffer again, error-count=1 2022-07-09 17:03:43.902 - info: modbus.0 (2648) Disconnected from slave 192.168.178.63 2022-07-09 17:04:44.093 - info: modbus.0 (2648) Connected to slave 192.168.178.63 2022-07-09 17:07:16.394 - warn: influxdb.0 (2633) Error on writePoint("{"value":541.06,"time":"2022-07-09T15:06:53.046Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: {"error":"timeout"} / "{\"error\":\"timeout\"}\n"" 2022-07-09 17:07:16.395 - info: influxdb.0 (2633) Add point that had error for shelly.0.SHEM-3#C45BBE78A685#1.Total.InstantPower to buffer again, error-count=2 2022-07-09 17:07:16.402 - warn: influxdb.0 (2633) Error on writePoint("{"value":187.14,"time":"2022-07-09T15:06:53.038Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: {"error":"timeout"} / "{\"error\":\"timeout\"}\n"" 2022-07-09 17:07:16.402 - info: influxdb.0 (2633) Add point that had error for shelly.0.SHEM-3#C45BBE78A685#1.Emeter1.Power to buffer again, error-count=2 2022-07-09 17:12:30.840 - info: ring.0 (1462) Recieved new Refresh Token. Will use the new one until the token in config gets changed 2022-07-09 17:12:32.217 - info: influxdb.0 (2633) Store 19 buffered influxDB history points 2022-07-09 17:14:18.634 - warn: influxdb.0 (2633) Error on writePoint("{"value":107,"time":"2022-07-09T15:13:32.044Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:14:18.636 - warn: influxdb.0 (2633) Error on writePoint("{"value":128,"time":"2022-07-09T15:13:32.044Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:14:18.637 - warn: influxdb.0 (2633) Error on writePoint("{"value":241.7,"time":"2022-07-09T15:13:32.045Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:14:18.652 - warn: influxdb.0 (2633) Error on writePoint("{"value":476.91,"time":"2022-07-09T15:13:32.061Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ETIMEDOUT / "ETIMEDOUT"" 2022-07-09 17:14:29.084 - info: influxdb.0 (2633) Connecting http://localhost:8086 ... 2022-07-09 17:14:29.605 - info: influxdb.0 (2633) Influx DB Version used: 1.x 2022-07-09 17:15:00.542 - warn: modbus.0 (2648) Poll error count: 1 code: "App Timeout" 2022-07-09 17:15:02.711 - warn: modbus.0 (2648) Error: undefined 2022-07-09 17:15:02.714 - error: modbus.0 (2648) Request timed out. 2022-07-09 17:15:02.715 - error: modbus.0 (2648) Client in error state. 2022-07-09 17:15:04.257 - info: modbus.0 (2648) Disconnected from slave 192.168.178.63 2022-07-09 17:15:36.311 - warn: influxdb.0 (2633) Error on writePoint("{"value":124,"time":"2022-07-09T15:15:03.069Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:15:36.313 - warn: influxdb.0 (2633) Error on writePoint("{"value":245.57,"time":"2022-07-09T15:15:03.070Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:15:36.314 - warn: influxdb.0 (2633) Error on writePoint("{"value":107.18,"time":"2022-07-09T15:15:03.069Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:15:36.315 - warn: influxdb.0 (2633) Error on writePoint("{"value":0.38,"time":"2022-07-09T15:15:03.071Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:15:36.315 - warn: influxdb.0 (2633) Error on writePoint("{"value":482.88,"time":"2022-07-09T15:15:03.163Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:15:36.316 - warn: influxdb.0 (2633) Error on writePoint("{"value":1969616.1,"time":"2022-07-09T15:15:03.169Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: ESOCKETTIMEDOUT / "ESOCKETTIMEDOUT"" 2022-07-09 17:15:46.313 - info: influxdb.0 (2633) Connecting http://localhost:8086 ... 2022-07-09 17:15:46.315 - info: influxdb.0 (2633) Influx DB Version used: 1.x 2022-07-09 17:16:04.743 - info: modbus.0 (2648) Connected to slave 192.168.178.63 2022-07-09 17:16:23.641 - info: shelly.0 (874) [MQTT] Device 192.168.178.109 (shelly1 / shelly1-E8DB84D73D12 / SHSW-1#E8DB84D73D12#1) connected! Polltime set to 61 sec. 2022-07-09 17:21:52.120 - warn: influxdb.0 (2633) Error on writePoint("{"value":106.72,"time":"2022-07-09T15:21:17.014Z","from":"system.adapter.shelly.0","q":0,"ack":true}): Error: {"error":"timeout"} / "{\"error\":\"timeout\"}\n"" 2022-07-09 17:21:54.553 - info: influxdb.0 (2633) Add point that had error for shelly.0.SHEM-3#C45BBE78A685#1.Emeter0.Power to buffer again, error-count=2 -

@nash1975 sagte in Iobroker instabil und Verzögert Redis Datenbank sehr groß:

Meine Vermutung geht Richtung Arbeitsspeicher

Ist auch so

@nash1975 sagte in Iobroker instabil und Verzögert Redis Datenbank sehr groß:

Swap: 2047 2047

-

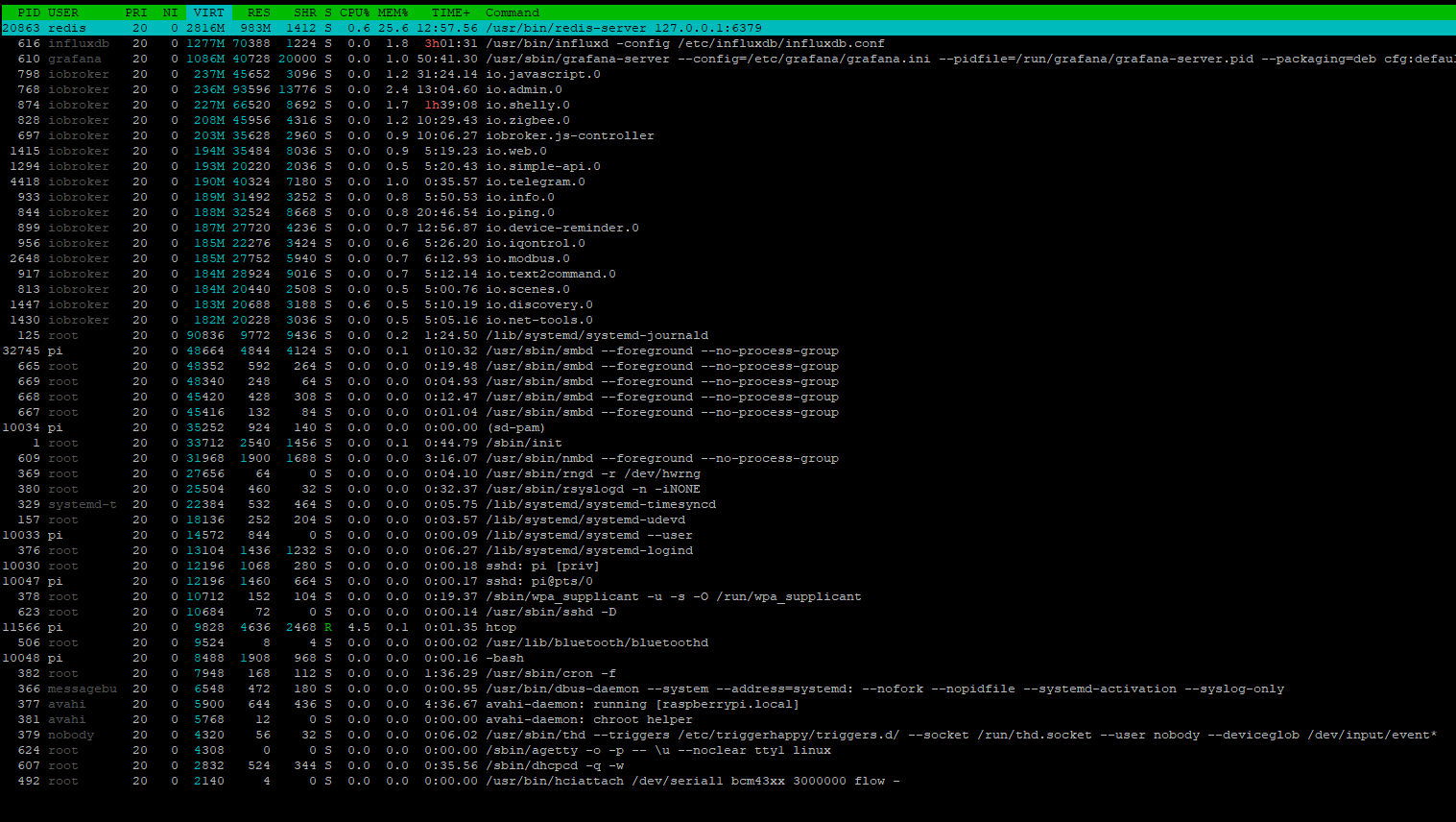

So sieht übrigens es aus, wenn ich htop mal laufen lasse.

Wie kriegt man denn die Redis DB aufgeräumt. Kann man alte Einträge löschen? Oder wie sonst alte ungenutzte Einträge rauskriegen. Sonst wird die ja immer größer mit der Zeit.

VG,

Jens -

@nash1975 mit Redis kenn ich mich nicht so gut aus, generell zu Redis gibt es https://forum.iobroker.net/topic/26327/redis-in-iobroker-überblick oder auch https://www.iobroker.net/#de/documentation/config/redis.md

oder

https://redis.io/docs/manual/eviction/hast du states und objects auf redis umgestellt? kannst du prüfen mittels

iobroker setup custom -

@crunchip

Ja. States und Objects laufen auf Redis.

Ich hatte zu Beginn meiner ioBroker Karriere den ioBroker Kurs von Haus-Automatisierung durchgearbeitet. Da wurde das so empfohlen.Hatte (als meine Probleme begannen) schon gelesen, dass die Objects vielleicht besser auf "Files" geblieben wären.

Macht ein Wechsel jetzt noch Sinn? Oder ärgere ich mich dann nur über die Latenzen aufgrund einer riesigen Datei-Datenbank?Den verlinkten Redis Artikel hatte ich bereits gelesen. Aber der hilft mir nicht wirklich.

pi@raspberrypi:~ $ iobroker setup custom Current configuration: - Objects database: - Type: redis - Host/Unix Socket: 127.0.0.1 - Port: 6379 - States database: - Type: redis - Host/Unix Socket: 127.0.0.1 - Port: 6379VG,

Jens -

@nash1975 sagte in Iobroker instabil und Verzögert Redis Datenbank sehr groß:

Objekte: 4346, Zustände: 3734

@nash1975 sagte in Iobroker instabil und Verzögert Redis Datenbank sehr groß:

Hatte (als meine Probleme begannen) schon gelesen, dass die Objects vielleicht besser auf "Files" geblieben wären.

bei den Zahlen, wäre es meiner Meinung nach gar nicht nötig gewesen umzustellen

@nash1975 sagte in Iobroker instabil und Verzögert Redis Datenbank sehr groß:

Macht ein Wechsel jetzt noch Sinn?

das kann ich dir nicht sagen, ich hatte auch redis laufen, bin allerdings umgestiegen auf jsonl und habe bisher keine Probleme.

habe aktuell Objekte: 46836, Zustände: 43513 -

@nash1975 sagte in Iobroker instabil und Verzögert Redis Datenbank sehr groß:

2907072774

ok, die Redis-Datenpunkte fressen so um 2,7 GByte, bei mir sind es 16Mbyte (bei auch fast 40.000 States).

Den Befehlredis-cli --bigkeyskannte ich noch nicht, danke dafür.Und der hat die ja schon aufgeführt welche Datenpunkte so "fett" sind also nicht alle, aber den dicksten. Bei mir ist es ein GIF des Wetterradars, bei dir ist es wohl der

ring.0Adapter (Könnte man ja noch genauer untersuchen)Also, weg damit (Soweit ich das verstehe müssten die Datenpunkte in REDIS gelöscht werden sobald diese in ioBroker gelöscht werden) oder flucht nach vorne - Raspi mit 8 statt 4 GByte.

-

@bananajoe Gerade mal nachgeschaut - der Ring Adapter nutzt fleißig BinaryStates. Im Falle von Redis landet das alles im Arbeitsspeicher:

-

@haus-automatisierung je nachdem wie oft sich die Datenpunkte ändern ist das ja auch an sich keine schlechte Sache

Außer bei so vielen Rings an einem Raspberry. Der scheint da Filmchen abzuspeichern, eventuell kann man das im Adapter begrenzen? -

@bananajoe said in Iobroker instabil und Verzögert Redis Datenbank sehr groß:

l

Erstmal danke an alle für die Hilfe.

Die Ring Instanz hab ich gestern Abend gelöscht.

Den kompletten Ring.0 Verzeichnisbaum unter "Objekte" ebenfalls.Jetzt nach 24h ist die Redis Datenbank aber immer noch genauso groß.

Gibt es denn nicht einen automatischen Mechanismus der so eine Datenbank "aufräumt" und Objekte löscht die nicht mehr benutzt werden?Wie kann ich sowas denn manuell machen?

Wenn es sowas nicht gibt wäre es ja auch nur eine Frage der Zeit bis die 8GB von einem größeren Raspi voll wären.

Es gibt ja den Redis Flushall Befehl. Kann ich den benutzen und die Datenbank baut sich wieder mit den States im Einsatz auf und ioBroker bleibt einsatzfähig?

Oder läuft danach gar nichts mehr?# Memory used_memory:2919110636 used_memory_human:2.72G used_memory_rss:1025953792 used_memory_rss_human:978.43M used_memory_peak:2939070180 used_memory_peak_human:2.74G used_memory_peak_perc:99.32% used_memory_overhead:3924390 used_memory_startup:604088 used_memory_dataset:2915186246 used_memory_dataset_perc:99.89% allocator_allocated:2919125336 allocator_active:1025872896 allocator_resident:1025872896 total_system_memory:4024160256 total_system_memory_human:3.75G used_memory_lua:80896 used_memory_lua_human:79.00K used_memory_scripts:5416 used_memory_scripts_human:5.29K number_of_cached_scripts:8 maxmemory:3221225472 maxmemory_human:3.00G maxmemory_policy:noeviction allocator_frag_ratio:0.35 allocator_frag_bytes:2401714856 allocator_rss_ratio:1.00 allocator_rss_bytes:0 rss_overhead_ratio:1.00 rss_overhead_bytes:80896 mem_fragmentation_ratio:0.35 mem_fragmentation_bytes:-1893171544 mem_not_counted_for_evict:0 mem_replication_backlog:0 mem_clients_slaves:0 mem_clients_normal:1762546 mem_aof_buffer:0 mem_allocator:libc active_defrag_running:0 lazyfree_pending_objects:0Viele Grüße,

Jens -

@nash1975 so genau weis ich das nicht bzw. kann ich dir nicht benantworten.

Vermutlich nicht - weil die Daten eben alle im Redis liegen.

Es könnte etwas gehen wir alles im Redis löschen und Backup wieder einspielen.Oder im Redis löschen:

redis-cli --scan --pattern cfg.f.ring.0*sollte die ausschließlich die Ring-Datenpunkte anzeigen (im ioBroker gibt es unter Objekte ja kein

ring.0.mehr, richtig?

und mitredis-cli --scan --pattern cfg.f.ring.0* | xargs redis-cli delkönnte man die dann löschen (von hier geklaut: https://linuxhint.com/delete-keys-redis-database/)

Wenn er keine findet, prima, sind die schon weg.

Wie viel RAM nutzt redis denn jetzt? Kann sein das er die Datei nicht kleiner macht sondern den freien Platz später wieder benutzt. Aber eben nicht alles lädt. Laut Google könnte es sein das die eben nicht schrumpft - aber der RAM Verbrauch war dein Problem, nicht was es auf der Festplatte belegt.

-

@bananajoe

Hallo,

also das mit dem Löschen der Ring Objekte hat schonmal gut geklapptIch hab jetzt wieder 1,7GB available.

Als nächstes installier ich wieder den Ring Adapter und schau mal, ob ich irgendwo einstellen kann, dass nicht so viel nach Redis geschrieben wird.

Danke an alle.

Die Community ist echt topp.pi@raspberrypi:~ $ free -m total used free shared buff/cache available Mem: 3837 1973 1518 10 345 1763 Swap: 2047 1106 941# Memory used_memory:163474536 used_memory_human:155.90M used_memory_rss:567595008 used_memory_rss_human:541.30M used_memory_peak:2939070180 used_memory_peak_human:2.74G used_memory_peak_perc:5.56% used_memory_overhead:3343806 used_memory_startup:604088 used_memory_dataset:160130730 used_memory_dataset_perc:98.32% allocator_allocated:163440760 allocator_active:567526400 allocator_resident:567526400 total_system_memory:4024160256 total_system_memory_human:3.75G used_memory_lua:68608 used_memory_lua_human:67.00K used_memory_scripts:5416 used_memory_scripts_human:5.29K number_of_cached_scripts:8 maxmemory:3221225472 maxmemory_human:3.00G maxmemory_policy:noeviction allocator_frag_ratio:3.47 allocator_frag_bytes:404085640 allocator_rss_ratio:1.00 allocator_rss_bytes:0 rss_overhead_ratio:1.00 rss_overhead_bytes:68608 mem_fragmentation_ratio:3.47 mem_fragmentation_bytes:404154248 mem_not_counted_for_evict:0 mem_replication_backlog:0 mem_clients_slaves:0 mem_clients_normal:1961674 mem_aof_buffer:0 mem_allocator:libc active_defrag_running:0 lazyfree_pending_objects:0 -

@nash1975 Vielen Dank für diese Infos. Genau dasselbe ist mir scheinbar auch passiert. Ich habe 20GB RAM auf eminer NAS, die durch redis zugelaufen sind. Nach Löschen der ring-Daten läuft er jetzt wieder einigermaßen.

Wie hast Du das Problem gelöst, dass das immer wieder voll läuft? -

@manuxi

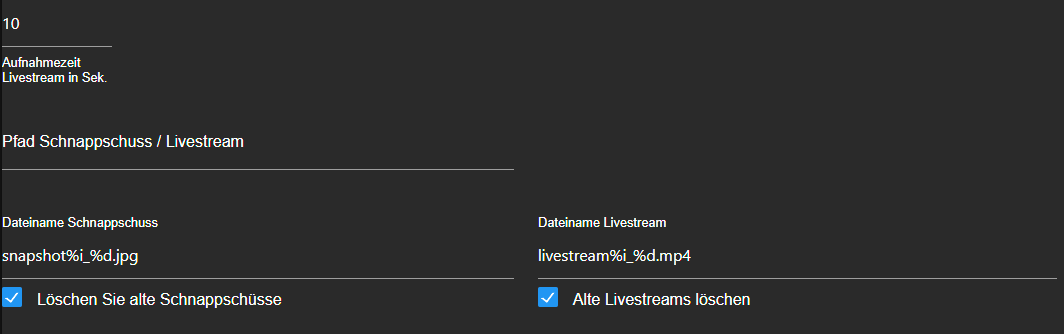

Ich glaube man konnte im Ring Adapter Einstellungen zum permanenten Speichern der Ring Mediendateien machen.

Bin mir aber nicht sicher und nutze ioBroker nicht mehr. Bin vor einigen Monaten zu Home Assistant gewechselt.VG

-

@nash1975 Hm, so sieht das bei mir aus, daran habe ich nichts geändert. Scheint also nicht zu funktionieren...

Vielleicht hat ja jemand anderes noch eine Idee... -

@manuxi kannst du da nicht direkten pfad angeben

also opt/iobroker/iobroker-data und dann den dateinamen

ansonste mach ein isseu bei adapter in GIT auf

-

@arteck Es war mir nicht klar, wie sich der Pfad bildet. Werd ich mir mal anschauen. Danke für den Hinweis!