NEWS

[gelöst] Fehler mit HMIP Adapter

-

Ich bekomme immer wieder diese Fehlermeldungen und der hm-rpc wird beendet.

Nach einem Neustart der HM läuft es wieder für eine Zeit.Einstellungen habe ich nicht verändert.

hm-rpc.1 2022-03-19 15:58:34.497 error Init not possible, going to stop: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-19 15:57:53.535 error Ping error [TinkerboardS:hm-rpc.1:4922f854741899559ac6a004cdeeb06d]: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-19 15:57:24.862 error Ping error [TinkerboardS:hm-rpc.1:4922f854741899559ac6a004cdeeb06d]: Unknown XML-RPC tag 'TITLE'Ich hoffe, jemand hat eine gute Idee.

Bisher lief die HM einwandfrei. -

@gregors sagte in Fehler mit HMIP Adapter:

Init not possible, going to stop: Unknown XML-RPC tag

Wie sieht Dein System aus?

-

@meister-mopper

Was meinst Du?

Ist ein Tinkerboard mit piVCCU3 und RPI-RF-MOD auf den GPIO´s.

Das System (Homeatic) habe ich nie wieder geändert.

Lief bis dato immer einwandfrei.

Hatte vor einiger Zeit ein defektes Netzteil mit Absturz des ioBrokers.

Aber das System hat nach 2-3 Anläufen wieder einwandrei gebootet. -

@gregors Es gab hier schon mal dieses Problem.

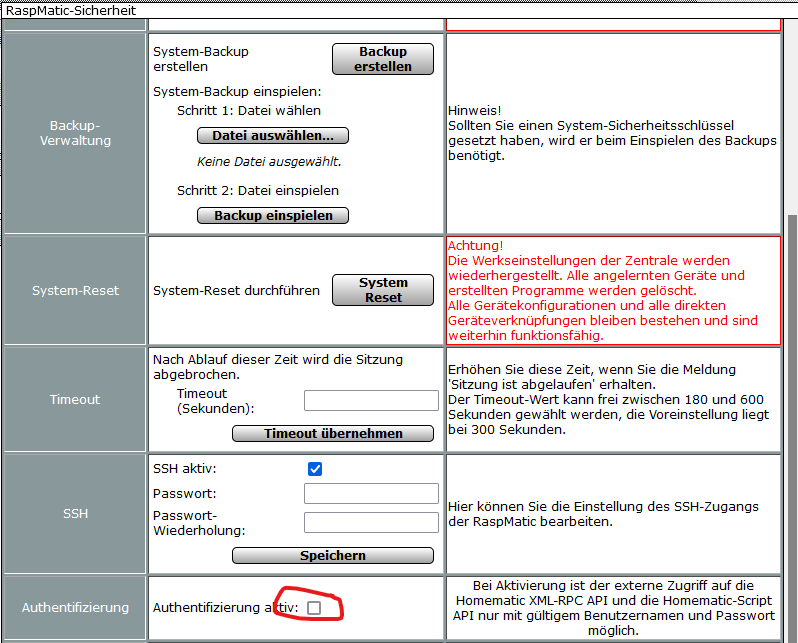

Damals wurde in der CCU unter Einstellungen/Systemsteuerung/Sicherheit die Authentifizierung deaktiviert.

-

Ist bei mir schon immer deaktiviert gewesen

-

@gregors Welche Adapter Version?

Kannst du das loggen mal auf Debug stellen, dann steht da möglicherweise mehr drin.

-

@wendy2702

Werde ich machen, aber im Augenblick läuft wieder alles.

Habe die HM neu gestartet.

Denke aber, morgen habe ich wieder die Fehler. -

Hier mal der aktuelle Debug:

hm-rpc.1 2022-03-19 19:33:18.961 debug hm-rpc.1.001F5A49940021.0.CARRIER_SENSE_LEVEL ==> UNIT: "%" (min: 0, max: 100) From "30" => "30" hm-rpc.1 2022-03-19 19:33:18.959 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","001F5A49940021:0","CARRIER_SENSE_LEVEL",30] hm-rpc.1 2022-03-19 19:33:12.756 debug hm-rpc.1.000A9BE990CFC3.0.UNREACH ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.752 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","UNREACH",true] hm-rpc.1 2022-03-19 19:33:12.427 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.414 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.401 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.401 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.391 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.379 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.369 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.369 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.354 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.353 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.344 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.343 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.335 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.334 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.325 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.324 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.314 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.313 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.303 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.302 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.253 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.252 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.211 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.210 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.184 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.183 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.148 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.147 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.089 debug hm-rpc.1.000A9BE990C35D.0.UNREACH ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.088 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","UNREACH",true] hm-rpc.1 2022-03-19 19:33:12.057 debug hm-rpc.1.000A9BE990CFC3.0.RSSI_DEVICE ==> UNIT: "undefined" (min: -128, max: 127) From "-63" => "-63" hm-rpc.1 2022-03-19 19:33:12.056 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","RSSI_DEVICE",-63] hm-rpc.1 2022-03-19 19:33:12.054 debug xml multicall <event>: TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0,000A9BE990CFC3:0,RSSI_DEVICE,-63 hm-rpc.1 2022-03-19 19:33:12.053 debug hm-rpc.1.000A9BE990CFC3.0.UNREACH ==> UNIT: "undefined" (min: undefined, max: undefined) From "false" => "false" hm-rpc.1 2022-03-19 19:33:12.050 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","UNREACH",false] hm-rpc.1 2022-03-19 19:33:12.049 debug xml multicall <event>: TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0,000A9BE990CFC3:0,UNREACH,false hm-rpc.1 2022-03-19 19:33:12.049 debug hm-rpc.1.000A9BE990CFC3.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:12.048 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990CFC3:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:12.047 debug xml multicall <event>: TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0,000A9BE990CFC3:0,CONFIG_PENDING,true hm-rpc.1 2022-03-19 19:33:11.642 debug hm-rpc.1.000A9BE990C35D.0.UNREACH ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:11.641 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","UNREACH",true] hm-rpc.1 2022-03-19 19:33:10.984 debug hm-rpc.1.000A9BE990C35D.0.UNREACH ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.971 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","UNREACH",true] hm-rpc.1 2022-03-19 19:33:10.509 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.508 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.501 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.500 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.491 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.491 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.479 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.478 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.466 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.465 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.450 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.449 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.435 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.434 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.421 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.420 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.393 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.392 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.360 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.359 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.344 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.343 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.296 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.282 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.262 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.257 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.237 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.236 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.213 debug hm-rpc.1.000A9BE990C35D.0.RSSI_DEVICE ==> UNIT: "undefined" (min: -128, max: 127) From "-71" => "-71" hm-rpc.1 2022-03-19 19:33:10.213 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","RSSI_DEVICE",-71] hm-rpc.1 2022-03-19 19:33:10.212 debug xml multicall <event>: TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0,000A9BE990C35D:0,RSSI_DEVICE,-71 hm-rpc.1 2022-03-19 19:33:10.211 debug hm-rpc.1.000A9BE990C35D.0.UNREACH ==> UNIT: "undefined" (min: undefined, max: undefined) From "false" => "false" hm-rpc.1 2022-03-19 19:33:10.210 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","UNREACH",false] hm-rpc.1 2022-03-19 19:33:10.210 debug xml multicall <event>: TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0,000A9BE990C35D:0,UNREACH,false hm-rpc.1 2022-03-19 19:33:10.209 debug hm-rpc.1.000A9BE990C35D.0.CONFIG_PENDING ==> UNIT: "undefined" (min: undefined, max: undefined) From "true" => "true" hm-rpc.1 2022-03-19 19:33:10.202 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","000A9BE990C35D:0","CONFIG_PENDING",true] hm-rpc.1 2022-03-19 19:33:10.198 debug xml multicall <event>: TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0,000A9BE990C35D:0,CONFIG_PENDING,true hm-rpc.1 2022-03-19 19:33:04.824 debug hm-rpc.1.001F5A49940021.0.CARRIER_SENSE_LEVEL ==> UNIT: "%" (min: 0, max: 100) From "40" => "40" hm-rpc.1 2022-03-19 19:33:04.822 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","001F5A49940021:0","CARRIER_SENSE_LEVEL",40] hm-rpc.1 2022-03-19 19:33:04.646 debug hm-rpc.1.001F5A49940021.0.DUTY_CYCLE_LEVEL ==> UNIT: "%" (min: 0, max: 100) From "2.5" => "2.5" hm-rpc.1 2022-03-19 19:33:04.645 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","001F5A49940021:0","DUTY_CYCLE_LEVEL",2.5] hm-rpc.1 2022-03-19 19:33:03.957 debug hm-rpc.1.001F5A49940021.0.CARRIER_SENSE_LEVEL ==> UNIT: "%" (min: 0, max: 100) From "40" => "40" hm-rpc.1 2022-03-19 19:33:03.956 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","001F5A49940021:0","CARRIER_SENSE_LEVEL",40] hm-rpc.1 2022-03-19 19:32:52.901 debug xmlrpc <- event: hm-rpc.1.CENTRAL.0.PONG:TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0 discarded, no matching device hm-rpc.1 2022-03-19 19:32:52.900 debug xmlrpc <- event ["TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0","CENTRAL:0","PONG","TinkerboardS:hm-rpc.1:ba680bd58c67f291c17ab3d927898aa0"] hm-rpc.1 2022-03-19 19:32:52.851 debug PING ok hm-rpc.1 2022-03-19 19:32:52.828 debug Send PING... hm-rpc.1 2022-03-19 19:32:52.827 debug [KEEPALIVE] Check if connection is alive hm-rpc.1 2022-03-19 19:32:38.069 info Loglevel changed from "error" to "debug"Hier gibt es auch keine Probleme mit dem XML-Tag.

-

Hier nun der Fehlerbericht nachdem der hm-rpc wieder abgeschmiert war.

host.TinkerboardS 2022-03-21 08:10:47.428 info instance system.adapter.hm-rpc.1 started with pid 26845 host.TinkerboardS 2022-03-21 08:10:17.105 info Restart adapter system.adapter.hm-rpc.1 because enabled host.TinkerboardS 2022-03-21 08:10:17.103 info instance system.adapter.hm-rpc.1 terminated with code 0 (NO_ERROR) hm-rpc.1 2022-03-21 08:10:16.255 info Terminated (NO_ERROR): Without reason hm-rpc.1 2022-03-21 08:10:16.254 debug Plugin sentry destroyed hm-rpc.1 2022-03-21 08:10:16.251 info terminating hm-rpc.1 2022-03-21 08:10:15.940 error Init not possible, going to stop: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-21 08:10:15.245 info xmlrpc -> 192.168.10.94:2010/ init ["http://192.168.10.95:2010",""] hm-rpc.1 2022-03-21 08:10:05.906 debug xmlrpc -> 192.168.10.94:2010/ init ["http://192.168.10.95:2010","TinkerboardS:hm-rpc.1:a7e25b72b50e29f420f7ed3738b27d29"] hm-rpc.1 2022-03-21 08:09:45.242 error Init not possible, going to stop: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-21 08:09:40.941 info Loglevel changed from "error" to "debug"Mehr kommt nicht an Infos.

-

@gregors welche Adapter Version?

Was steht zu dem Zeitpunkt im CCU log und im Syslog?

Iobroker und PiVCCU läuft beides auf den tinkerboard?

-

Adapter HM-RPC 1.15.11

Beides läuft zusammen auf dem Tinkerboard.Habe keine Daten zum passenden Termin.

Vor 08:20:54 habe ich keine Einträge im hmserver.logWerde morgen mal schauen wenn er wieder Down ist und die Dateien sichern.

Syslog habe ich nicht -

@gregors sagte in Fehler mit HMIP Adapter:

Unknown XML-RPC tag 'TITLE'

such mal nach dem Fehler. Hat was mit Auth zu tun glaube ich.

oder war deine CCU zu dem Zeitpunkt neu gestartet?

-

@gregors sagte in [Fehler mit HMIP Adapter]

Syslog habe ich nicht

Jedes Linux System hat im Standard ein Syslog

-

@wendy2702

Hier nun die Logeinträge zum entsprechenden Zeitpunkt:ioBroker

hm-rpc.1 2022-03-22 13:13:39.607 error Init not possible, going to stop: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-22 13:13:10.961 error Init not possible, going to stop: Unknown XML-RPC tag 'TITLE' host.TinkerboardS 2022-03-22 13:10:54.342 info instance system.adapter.hm-rpc.1 started with pid 21751 host.TinkerboardS 2022-03-22 13:10:24.014 info Restart adapter system.adapter.hm-rpc.1 because enabled host.TinkerboardS 2022-03-22 13:10:24.012 info instance system.adapter.hm-rpc.1 terminated with code 0 (NO_ERROR) hm-rpc.1 2022-03-22 13:10:23.012 error Init not possible, going to stop: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-22 13:09:52.286 error Init not possible, going to stop: Unknown XML-RPC tag 'TITLE' host.TinkerboardS 2022-03-22 13:05:07.568 info instance system.adapter.hm-rpc.1 started with pid 8959 host.TinkerboardS 2022-03-22 13:04:37.307 info Restart adapter system.adapter.hm-rpc.1 because enabled host.TinkerboardS 2022-03-22 13:04:37.306 info instance system.adapter.hm-rpc.1 terminated with code 0 (NO_ERROR) hm-rpc.1 2022-03-22 13:04:34.816 error Ping error [TinkerboardS:hm-rpc.1:51dcc5ed69e2a2a1112f1fb8201bf1a2]: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-22 13:04:05.448 error Init not possible, going to stop: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-22 13:04:04.097 error Ping error [TinkerboardS:hm-rpc.1:51dcc5ed69e2a2a1112f1fb8201bf1a2]: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-22 13:03:35.430 error Ping error [TinkerboardS:hm-rpc.1:51dcc5ed69e2a2a1112f1fb8201bf1a2]: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-22 13:03:04.717 error Ping error [TinkerboardS:hm-rpc.1:51dcc5ed69e2a2a1112f1fb8201bf1a2]: Unknown XML-RPC tag 'TITLE' hm-rpc.1 2022-03-22 13:02:33.989 error Ping error [TinkerboardS:hm-rpc.1:51dcc5ed69e2a2a1112f1fb8201bf1a2]: Unknown XML-RPC tag 'TITLE'Tinkerboard CCU3

Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 de.eq3.cbcs.server.core.persistence.AbstractPersistency ERROR [vert.x-worker-thread-4] The config file of the device 3014F711A0000A9BE990C516 could not be read java.nio.file.FileSystemException: /etc/config/crRFD/data/3014F711A0000A9BE990C516.dev: Too many open files at sun.nio.fs.UnixException.translateToIOException(UnixException.java:91) at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:102) at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:107) at sun.nio.fs.UnixFileSystemProvider.newByteChannel(UnixFileSystemProvider.java:214) at java.nio.file.Files.newByteChannel(Files.java:361) at java.nio.file.Files.newByteChannel(Files.java:407) at java.nio.file.spi.FileSystemProvider.newInputStream(FileSystemProvider.java:384) at java.nio.file.Files.newInputStream(Files.java:152) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.loadSpecificDevice(KryoPersistenceWorker.java:1723) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.loadSpecificDevice(KryoPersistenceWorker.java:1696) at de.eq3.cbcs.server.core.persistence.AbstractPersistency.handleGetDevicePendingConfigurationCommand(AbstractPersistency.java:295) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.handleDeviceSpecificCommand(KryoPersistenceWorker.java:432) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.handle(KryoPersistenceWorker.java:309) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.handle(KryoPersistenceWorker.java:89) at io.vertx.core.eventbus.impl.HandlerRegistration.deliver(HandlerRegistration.java:212) at io.vertx.core.eventbus.impl.HandlerRegistration.handle(HandlerRegistration.java:191) at io.vertx.core.eventbus.impl.EventBusImpl.lambda$deliverToHandler$3(EventBusImpl.java:505) at io.vertx.core.impl.ContextImpl.lambda$wrapTask$2(ContextImpl.java:337) at io.vertx.core.impl.TaskQueue.lambda$new$0(TaskQueue.java:60) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Mar 22 13:01:32 de.eq3.cbcs.server.core.persistence.AbstractPersistency ERROR [vert.x-worker-thread-4] The config file of the device 3014F711A0000A9BE990C516 could not be read java.nio.file.FileSystemException: /etc/config/crRFD/data/3014F711A0000A9BE990C516.dev: Too many open files at sun.nio.fs.UnixException.translateToIOException(UnixException.java:91) at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:102) at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:107) at sun.nio.fs.UnixFileSystemProvider.newByteChannel(UnixFileSystemProvider.java:214) at java.nio.file.Files.newByteChannel(Files.java:361) at java.nio.file.Files.newByteChannel(Files.java:407) at java.nio.file.spi.FileSystemProvider.newInputStream(FileSystemProvider.java:384) at java.nio.file.Files.newInputStream(Files.java:152) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.loadSpecificDevice(KryoPersistenceWorker.java:1723) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.loadSpecificDevice(KryoPersistenceWorker.java:1696) at de.eq3.cbcs.server.core.persistence.AbstractPersistency.handleGetDevicePendingConfigurationCommand(AbstractPersistency.java:295) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.handleDeviceSpecificCommand(KryoPersistenceWorker.java:432) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.handle(KryoPersistenceWorker.java:309) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.handle(KryoPersistenceWorker.java:89) at io.vertx.core.eventbus.impl.HandlerRegistration.deliver(HandlerRegistration.java:212) at io.vertx.core.eventbus.impl.HandlerRegistration.handle(HandlerRegistration.java:191) at io.vertx.core.eventbus.impl.EventBusImpl.lambda$deliverToHandler$3(EventBusImpl.java:505) at io.vertx.core.impl.ContextImpl.lambda$wrapTask$2(ContextImpl.java:337) at io.vertx.core.impl.TaskQueue.lambda$new$0(TaskQueue.java:60) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] I/O exception (java.net.SocketException) caught when connecting to {}->http://192.168.10.95:2010: Too many open files Mar 22 13:01:32 org.apache.http.impl.client.DefaultHttpClient INFO [TinkerboardS:hm-rpc.1:c7416048ecb12d8a5c8edb34030a96c9_WorkerPool-1] Retrying connect to {}->http://192.168.10.95:2010 Mar 22 13:01:32 de.eq3.cbcs.server.core.persistence.AbstractPersistency ERROR [vert.x-worker-thread-4] The config file of the device 3014F711A0000A9BE990C516 could not be read java.nio.file.FileSystemException: /etc/config/crRFD/data/3014F711A0000A9BE990C516.dev: Too many open files at sun.nio.fs.UnixException.translateToIOException(UnixException.java:91) at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:102) at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:107) at sun.nio.fs.UnixFileSystemProvider.newByteChannel(UnixFileSystemProvider.java:214) at java.nio.file.Files.newByteChannel(Files.java:361) at java.nio.file.Files.newByteChannel(Files.java:407) at java.nio.file.spi.FileSystemProvider.newInputStream(FileSystemProvider.java:384) at java.nio.file.Files.newInputStream(Files.java:152) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.loadSpecificDevice(KryoPersistenceWorker.java:1723) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.loadSpecificDevice(KryoPersistenceWorker.java:1696) at de.eq3.cbcs.server.core.persistence.AbstractPersistency.handleGetDevicePendingConfigurationCommand(AbstractPersistency.java:295) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.handleDeviceSpecificCommand(KryoPersistenceWorker.java:432) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.handle(KryoPersistenceWorker.java:309) at de.eq3.cbcs.persistence.kryo.KryoPersistenceWorker.handle(KryoPersistenceWorker.java:89) at io.vertx.core.eventbus.impl.HandlerRegistration.deliver(HandlerRegistration.java:212) at io.vertx.core.eventbus.impl.HandlerRegistration.handle(HandlerRegistration.java:191) at io.vertx.core.eventbus.impl.EventBusImpl.lambda$deliverToHandler$3(EventBusImpl.java:505) at io.vertx.core.impl.ContextImpl.lambda$wrapTask$2(ContextImpl.java:337) at io.vertx.core.impl.TaskQueue.lambda$new$0(TaskQueue.java:60) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748)Syslog

Mar 22 13:00:01 localhost CRON[29949]: (root) CMD (/usr/lib/armbian/armbian-truncate-logs) Mar 22 13:05:01 localhost CRON[8841]: (root) CMD (command -v debian-sa1 > /dev/null && debian-sa1 1 1) Mar 22 13:15:01 localhost CRON[30745]: (root) CMD (/usr/lib/armbian/armbian-truncate-logs) Mar 22 13:15:01 localhost CRON[30751]: (root) CMD (command -v debian-sa1 > /dev/null && debian-sa1 1 1)Was halt verwunderlich ist, dass es zig Stunden läuft und danach nicht mehr.

-

@gregors sagte in Fehler mit HMIP Adapter:

Too many open files

Naja,

wenn du neu startest sind vermutlich alle Files wieder closed.

Ich habe aber gerade keine Idee welche(s) file(s) da open sind.

Vielleicht hat @foxriver76 eine Idee.

-

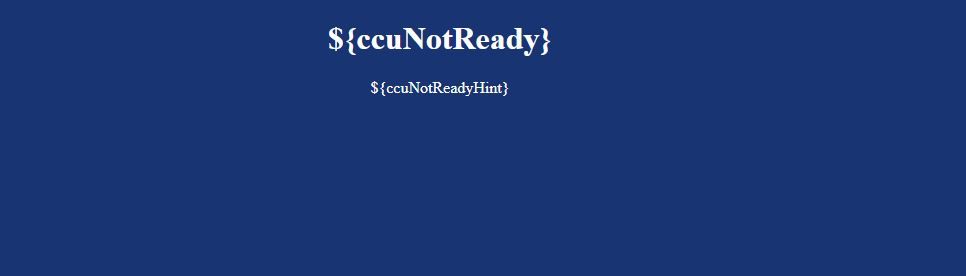

Wenn die Fehlermeldung kommt, heißt das, dass er da eine HTML Seite zurück bekommt. Die einfach mal unter IP + Port aufrufen und schauen was da steht.

-

Wie mache ich das genau, im Browser?

Welchen Port muss ich angeben? -

@gregors Ip der CCU:Port (der den du auch im Adapter angegeben hast, normal)

-

@foxriver76

Habe die Seite aufgerufen.

Dies ist das Ergebnis:

Die HM WebUI meldet sich sofort, während diese Abfrage gut 5 Minuten gedauert hat.

-

Hier noch der Quelltext:

<!DOCTYPE html> <html lang="de"> <head> <meta http-equiv="Content-Type" content="text/html; charset=iso-8859-1"> <title>HomeMatic</title> <style rel="stylesheet" type="text/css"> .Invisible { display: none; } </style> <script type="text/javascript" src="/webui/js/extern/jquery.js?_version_=2.0pre1"></script> <script type="text/javascript" src="/webui/js/extern/jqueryURLPlugin.js?_version_=2.0pre1"></script> <script type="text/javascript" src="/webui/js/lang/loadTextResource.js"></script> <script type="text/javascript" src="/webui/js/lang/translate.js"></script> <script type="text/javascript"> CHECK_INTERVAL = 3000; // Intervall, in dem geprüft wird, ob der ReGa Webserver aktiv ist /** * Erzeugt eine Instanz des XMLHttpRequest-Objekts */ createXMLHttpRequest = function() { var xmlHttp = null; if ( window.XMLHttpRequest ) { xmlHttp = new XMLHttpRequest(); } else if ( window.ActiveXObject ) { try { xmlHttp = new ActiveXObject("Msxml2.XMLHTTP"); } catch (ex) { try { xmlHttp = new ActiveXObject("Microsoft.XMLHTTP"); } catch (ex) { // leer } } } return xmlHttp; }; /** * Prüft zyklsich, ob der ReGa Webserver verfügbar ist. */ check = function() { var request = createXMLHttpRequest(); if (request) { request.open("GET", "/ise/checkrega.cgi", false); // synchrone Anfrage request.send(null); if ("OK" == request.responseText) { window.setTimeout("window.location.href='/index.htm'", 1000); } else { window.setTimeout("check();", CHECK_INTERVAL); } } }; /** * Wird beim Laden der Seite aufgerufen. **/ startup = function() { var content = document.getElementById("content"); content.className = ""; check(); }; </script> </head> <body style="background-color: #183473;color: #FFFFFF;" onload="startup();"> <div id="content" class="Invisible"> <div id="content_" align="center" > <h1>${ccuNotReady}</h1> <p>${ccuNotReadyHint}</p> </div> <div align="center" style="padding-top:250px;"> <img id="imgLogo" src="/ise/img/hm-logo.png" alt=""/> </div> </div> <script type="text/javascript"> translatePage(); </script> <noscript> <div align="center"> <!-- <p>Um die HomeMatic WebUI nutzen zu können, muss JavaScript in Ihrem Browser aktiviert sein.</p> --> <p>Please activate JavaScript in your browser</p> </div> </noscript> </body> </html>