NEWS

-

@crunchip Aktuell werde Shellys wegen dem Sonderzeichen # noch nicht angezeigt/unterstützt, siehe GitHub Issues #49.

@MGK ok wusste ich nicht

Danke für die info -

@nisio Nach meheren Stunden ist jetzt die Anzahl der States auf mittlerweile 814 gestiegen, obwohl ich keine hinzugefügt habe. Die Werte der States ist immer noch"null".

@MGK 814 States sind schon jede Menge. Was steht im Log von iobroker während die States geladen werden? Wenn die Meldung "database initialized with xyz states" erscheint, sind danach immer noch die Werte alle null in der App?

-

Wenn ich Raum bzw Funktion in der App neu anordnen möchte, verschieben sich die Symbole davor nicht mit

-

@nisio das Anzahl raum und funktion nicht übereinstimmen, doppelte Einträge , der Räume und Funktionen, Symbole wandern nicht mit beim verschieben,

-

@nisio Hier ein Debug Log vom ioGo Adapter während des Sync:

2019-02-13 22:30:26.203 - debug: iogo.0 objectDB connected 2019-02-13 22:30:26.246 - debug: iogo.0 statesDB connected 2019-02-13 22:30:26.253 - info: iogo.0 States connected to redis: 0.0.0.0:6379 2019-02-13 22:30:26.412 - info: iogo.0 starting. Version 0.3.2 in /opt/iobroker/node_modules/iobroker.iogo, node: v8.15.0 2019-02-13 22:30:26.466 - info: iogo.0 initialize app devices 2019-02-13 22:30:26.812 - debug: iogo.0 redis pmessage io.* io.hm-rega.0.1978 {"val":-45.4,"ack":true,"ts":1550093426810,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093426810} 2019-02-13 22:30:26.816 - debug: iogo.0 redis pmessage io.* io.hm-rega.0.1979 {"val":315.1,"ack":true,"ts":1550093426810,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093426810} 2019-02-13 22:30:26.816 - debug: iogo.0 redis pmessage io.* io.hm-rega.0.10139 {"val":-0.760965,"ack":true,"ts":1550093426810,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093426810} 2019-02-13 22:30:26.817 - debug: iogo.0 redis pmessage io.* io.hm-rega.0.22572 {"val":1,"ack":true,"ts":1550093426811,"q":0,"from":"system.adapter.hm-rega.0","lc":1550090726684} 2019-02-13 22:30:26.817 - debug: iogo.0 redis pmessage io.* io.hm-rega.0.22746 {"val":0,"ack":true,"ts":1550093426811,"q":0,"from":"system.adapter.hm-rega.0","lc":1550089526584} 2019-02-13 22:30:27.401 - info: iogo.0 logged in as: *************************** 2019-02-13 22:30:27.405 - info: iogo.0 PRO features enabled 2019-02-13 22:30:27.424 - info: iogo.0 removed states from remote database 2019-02-13 22:30:27.430 - info: iogo.0 removed objects from remote database 2019-02-13 22:30:27.432 - info: iogo.0 removed enums from remote database 2019-02-13 22:30:27.465 - debug: iogo.0 redis pmessage io.* io.iogo.0.info.connection {"val":true,"ack":true,"ts":1550093427459,"q":0,"from":"system.adapter.iogo.0","lc":1550093427459} 2019-02-13 22:30:27.697 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.alive {"val":true,"ack":true,"ts":1550093427696,"q":0,"from":"system.host.ioBroker","lc":1550023555058} 2019-02-13 22:30:27.699 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.load {"val":0.56,"ack":true,"ts":1550093427698,"q":0,"from":"system.host.ioBroker","lc":1550093427698} 2019-02-13 22:30:27.701 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.mem {"val":18,"ack":true,"ts":1550093427700,"q":0,"from":"system.host.ioBroker","lc":1550093427700} 2019-02-13 22:30:27.702 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.memRss {"val":72.21,"ack":true,"ts":1550093427701,"q":0,"from":"system.host.ioBroker","lc":1550093427701} 2019-02-13 22:30:27.704 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.memHeapTotal {"val":41.26,"ack":true,"ts":1550093427703,"q":0,"from":"system.host.ioBroker","lc":1550093427703} 2019-02-13 22:30:27.706 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.memHeapUsed {"val":33.33,"ack":true,"ts":1550093427705,"q":0,"from":"system.host.ioBroker","lc":1550093427705} 2019-02-13 22:30:27.707 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.uptime {"val":69875,"ack":true,"ts":1550093427706,"q":0,"from":"system.host.ioBroker","lc":1550093427706} 2019-02-13 22:30:27.709 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.freemem {"val":169,"ack":true,"ts":1550093427708,"q":0,"from":"system.host.ioBroker","lc":1550093427708} 2019-02-13 22:30:27.711 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.inputCount {"val":13,"ack":true,"ts":1550093427710,"q":0,"from":"system.host.ioBroker","lc":1550093412569} 2019-02-13 22:30:27.713 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.outputCount {"val":12,"ack":true,"ts":1550093427712,"q":0,"from":"system.host.ioBroker","lc":1550093427712} 2019-02-13 22:30:27.952 - debug: iogo.0 redis pmessage io.*.logging io.system.adapter.admin.0.logging {"val":true,"ack":true,"ts":1550093427923,"q":0,"from":"system.adapter.admin.0","lc":1550093427923} 2019-02-13 22:30:27.952 - debug: iogo.0 system.adapter.admin.0: logging true 2019-02-13 22:30:27.954 - debug: iogo.0 redis pmessage io.* io.system.adapter.admin.0.logging {"val":true,"ack":true,"ts":1550093427923,"q":0,"from":"system.adapter.admin.0","lc":1550093427923} 2019-02-13 22:30:27.954 - debug: iogo.0 system.adapter.admin.0: logging true 2019-02-13 22:30:28.478 - info: iogo.0 database initialized with 22 enums 2019-02-13 22:30:29.118 - error: iogo.0 forbidden path: shelly.0.SHSW-1#554877#1.Relay0.Switch 2019-02-13 22:30:29.118 - error: iogo.0 forbidden path: shelly.0.SHSW-1#554877#1.Relay0.Switch 2019-02-13 22:30:29.152 - info: iogo.0 database initialized with 0 states 2019-02-13 22:30:29.242 - info: iogo.0 database initialized with 22 enums 2019-02-13 22:30:29.746 - info: iogo.0 database initialized with 67 objects 2019-02-13 22:30:30.593 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.alive {"val":true,"ack":true,"ts":1550093430590,"q":0,"from":"system.adapter.web.0","lc":1550023577289} 2019-02-13 22:30:30.594 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.connected {"val":true,"ack":true,"ts":1550093430590,"q":0,"from":"system.adapter.web.0","lc":1550023577290} 2019-02-13 22:30:30.595 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.memRss {"val":51.25,"ack":true,"ts":1550093430591,"q":0,"from":"system.adapter.web.0","lc":1550093430591} 2019-02-13 22:30:30.595 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.memHeapTotal {"val":14.53,"ack":true,"ts":1550093430591,"q":0,"from":"system.adapter.web.0","lc":1550091044112} 2019-02-13 22:30:30.595 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.memHeapUsed {"val":10.89,"ack":true,"ts":1550093430591,"q":0,"from":"system.adapter.web.0","lc":1550093430591} 2019-02-13 22:30:30.596 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.uptime {"val":69856,"ack":true,"ts":1550093430591,"q":0,"from":"system.adapter.web.0","lc":1550093430591} 2019-02-13 22:30:30.596 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.inputCount {"val":1,"ack":true,"ts":1550093430591,"q":0,"from":"system.adapter.web.0","lc":1550093430591} 2019-02-13 22:30:30.596 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.outputCount {"val":8,"ack":true,"ts":1550093430592,"q":0,"from":"system.adapter.web.0","lc":1550090143506} 2019-02-13 22:30:31.644 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.alive {"val":true,"ack":true,"ts":1550093431640,"q":0,"from":"system.adapter.socketio.0","lc":1550023562966} 2019-02-13 22:30:31.646 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.connected {"val":true,"ack":true,"ts":1550093431641,"q":0,"from":"system.adapter.socketio.0","lc":1550023562969} 2019-02-13 22:30:31.649 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.memRss {"val":47.51,"ack":true,"ts":1550093431643,"q":0,"from":"system.adapter.socketio.0","lc":1550093401634} 2019-02-13 22:30:31.651 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.memHeapTotal {"val":12.53,"ack":true,"ts":1550093431645,"q":0,"from":"system.adapter.socketio.0","lc":1550037161790} 2019-02-13 22:30:31.654 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.memHeapUsed {"val":9.65,"ack":true,"ts":1550093431647,"q":0,"from":"system.adapter.socketio.0","lc":1550093431647} 2019-02-13 22:30:31.655 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.uptime {"val":69873,"ack":true,"ts":1550093431648,"q":0,"from":"system.adapter.socketio.0","lc":1550093431648} 2019-02-13 22:30:31.656 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.inputCount {"val":1,"ack":true,"ts":1550093431650,"q":0,"from":"system.adapter.socketio.0","lc":1550093431650} 2019-02-13 22:30:31.656 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.outputCount {"val":8,"ack":true,"ts":1550093431651,"q":0,"from":"system.adapter.socketio.0","lc":1550023592893} 2019-02-13 22:30:31.855 - debug: iogo.0 redis pmessage io.* io.shelly.0.SHSW-1#554877#1.rssi {"val":-69,"ack":true,"ts":1550093431853,"q":0,"from":"system.adapter.shelly.0","lc":1550093431853} 2019-02-13 22:30:32.261 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.alive {"val":true,"ack":true,"ts":1550093432257,"q":0,"from":"system.adapter.ebus.0","lc":1550093402257} 2019-02-13 22:30:32.263 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.connected {"val":true,"ack":true,"ts":1550093432258,"q":0,"from":"system.adapter.ebus.0","lc":1550093402258} 2019-02-13 22:30:32.271 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.memRss {"val":34.77,"ack":true,"ts":1550093432260,"q":0,"from":"system.adapter.ebus.0","lc":1550093432260} 2019-02-13 22:30:32.274 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.memHeapTotal {"val":13.53,"ack":true,"ts":1550093432261,"q":0,"from":"system.adapter.ebus.0","lc":1550093432261} 2019-02-13 22:30:32.276 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.memHeapUsed {"val":9.75,"ack":true,"ts":1550093432262,"q":0,"from":"system.adapter.ebus.0","lc":1550093432262} 2019-02-13 22:30:32.277 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.uptime {"val":32,"ack":true,"ts":1550093432264,"q":0,"from":"system.adapter.ebus.0","lc":1550093432264} 2019-02-13 22:30:32.277 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.inputCount {"val":1,"ack":true,"ts":1550093432265,"q":0,"from":"system.adapter.ebus.0","lc":1550093432265} 2019-02-13 22:30:32.279 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.outputCount {"val":8,"ack":true,"ts":1550093432266,"q":0,"from":"system.adapter.ebus.0","lc":1550093432266} 2019-02-13 22:30:32.888 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.alive {"val":true,"ack":true,"ts":1550093432884,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550023570838} 2019-02-13 22:30:32.889 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.connected {"val":true,"ack":true,"ts":1550093432885,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550023570839} 2019-02-13 22:30:32.889 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.memRss {"val":44.09,"ack":true,"ts":1550093432885,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093417883} 2019-02-13 22:30:32.890 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.memHeapTotal {"val":21.03,"ack":true,"ts":1550093432885,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550060715601} 2019-02-13 22:30:32.890 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.memHeapUsed {"val":10.62,"ack":true,"ts":1550093432885,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093432885} 2019-02-13 22:30:32.890 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.uptime {"val":69865,"ack":true,"ts":1550093432885,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093432885} 2019-02-13 22:30:32.891 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.inputCount {"val":1,"ack":true,"ts":1550093432886,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093417885} 2019-02-13 22:30:32.891 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.outputCount {"val":8,"ack":true,"ts":1550093432886,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093432886} 2019-02-13 22:30:36.204 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.alive {"val":true,"ack":true,"ts":1550093436202,"q":0,"from":"system.adapter.influxdb.0","lc":1550023581735} 2019-02-13 22:30:36.206 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.connected {"val":true,"ack":true,"ts":1550093436203,"q":0,"from":"system.adapter.influxdb.0","lc":1550023581736} 2019-02-13 22:30:36.207 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.memRss {"val":57.2,"ack":true,"ts":1550093436203,"q":0,"from":"system.adapter.influxdb.0","lc":1550093436203} 2019-02-13 22:30:36.208 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.memHeapTotal {"val":23.07,"ack":true,"ts":1550093436204,"q":0,"from":"system.adapter.influxdb.0","lc":1550091980835} 2019-02-13 22:30:36.209 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.memHeapUsed {"val":15.38,"ack":true,"ts":1550093436204,"q":0,"from":"system.adapter.influxdb.0","lc":1550093436204} 2019-02-13 22:30:36.210 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.uptime {"val":69857,"ack":true,"ts":1550093436204,"q":0,"from":"system.adapter.influxdb.0","lc":1550093436204} 2019-02-13 22:30:36.210 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.inputCount {"val":109,"ack":true,"ts":1550093436205,"q":0,"from":"system.adapter.influxdb.0","lc":1550093436205} 2019-02-13 22:30:36.212 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.outputCount {"val":8,"ack":true,"ts":1550093436206,"q":0,"from":"system.adapter.influxdb.0","lc":1550023686721} 2019-02-13 22:30:36.742 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.alive {"val":true,"ack":true,"ts":1550093436740,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550023573675} 2019-02-13 22:30:36.744 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.connected {"val":true,"ack":true,"ts":1550093436740,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550023573676} 2019-02-13 22:30:36.745 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.memRss {"val":55.23,"ack":true,"ts":1550093436741,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093421730} 2019-02-13 22:30:36.747 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.memHeapTotal {"val":18.53,"ack":true,"ts":1550093436742,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550086998586} 2019-02-13 22:30:36.747 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.memHeapUsed {"val":10.85,"ack":true,"ts":1550093436742,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093436742} 2019-02-13 22:30:36.749 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.uptime {"val":69866,"ack":true,"ts":1550093436743,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093436743} 2019-02-13 22:30:36.749 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.inputCount {"val":1,"ack":true,"ts":1550093436744,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093406731} 2019-02-13 22:30:36.750 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.outputCount {"val":8,"ack":true,"ts":1550093436745,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093436745} 2019-02-13 22:30:37.225 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.alive {"val":true,"ack":true,"ts":1550093437221,"q":0,"from":"system.adapter.hm-rega.0","lc":1550053581043} 2019-02-13 22:30:37.226 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.connected {"val":true,"ack":true,"ts":1550093437222,"q":0,"from":"system.adapter.hm-rega.0","lc":1550053581045} 2019-02-13 22:30:37.227 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.memRss {"val":53.71,"ack":true,"ts":1550093437222,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093437222} 2019-02-13 22:30:37.228 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.memHeapTotal {"val":17.03,"ack":true,"ts":1550093437223,"q":0,"from":"system.adapter.hm-rega.0","lc":1550092866945} 2019-02-13 22:30:37.229 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.memHeapUsed {"val":12.22,"ack":true,"ts":1550093437223,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093437223} 2019-02-13 22:30:37.230 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.uptime {"val":39858,"ack":true,"ts":1550093437223,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093437223} 2019-02-13 22:30:37.231 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.inputCount {"val":6,"ack":true,"ts":1550093437224,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093437224} 2019-02-13 22:30:37.231 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.outputCount {"val":13,"ack":true,"ts":1550093437224,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093437224} 2019-02-13 22:30:38.804 - debug: iogo.0 redis pmessage io.* io.system.adapter.shelly.0.alive {"val":true,"ack":true,"ts":1550093438797,"q":0,"from":"system.adapter.shelly.0","lc":1550086386801} 2019-02-13 22:30:38.806 - debug: iogo.0 redis pmessage io.* io.system.adapter.shelly.0.connected {"val":true,"ack":true,"ts":1550093438798,"q":0,"from":"system.adapter.shelly.0","lc":1550086386803} 2019-02-13 22:30:38.806 - debug: iogo.0 redis pmessage io.* io.system.adapter.shelly.0.memRss {"val":40.28,"ack":true,"ts":1550093438798,"q":0,"from":"system.adapter.shelly.0","lc":1550093438798} 2019-02-13 22:30:38.807 - debug: iogo.0 redis pmessage io.* io.system.adapter.shelly.0.memHeapTotal {"val":12.53,"ack":true,"ts":1550093438799,"q":0,"from":"system.adapter.shelly.0","lc":1550093138741} 2019-02-13 22:30:38.808 - debug: iogo.0 redis pmessage io.* io.system.adapter.shelly.0.memHeapUsed {"val":10.57,"ack":true,"ts":1550093438799,"q":0,"from":"system.adapter.shelly.0","lc":1550093438799} 2019-02-13 22:30:38.809 - debug: iogo.0 redis pmessage io.* io.system.adapter.shelly.0.uptime {"val":7054,"ack":true,"ts":1550093438800,"q":0,"from":"system.adapter.shelly.0","lc":1550093438800} 2019-02-13 22:30:38.809 - debug: iogo.0 redis pmessage io.* io.system.adapter.shelly.0.inputCount {"val":2,"ack":true,"ts":1550093438800,"q":0,"from":"system.adapter.shelly.0","lc":1550093408805} 2019-02-13 22:30:38.810 - debug: iogo.0 redis pmessage io.* io.system.adapter.shelly.0.outputCount {"val":9,"ack":true,"ts":1550093438801,"q":0,"from":"system.adapter.shelly.0","lc":1550093438801} 2019-02-13 22:30:39.805 - debug: iogo.0 redis pmessage io.* io.system.adapter.cloud.0.alive {"val":true,"ack":true,"ts":1550093439803,"q":0,"from":"system.adapter.cloud.0","lc":1550023585494} 2019-02-13 22:30:39.807 - debug: iogo.0 redis pmessage io.* io.system.adapter.cloud.0.connected {"val":true,"ack":true,"ts":1550093439804,"q":0,"from":"system.adapter.cloud.0","lc":1550023585495} 2019-02-13 22:30:39.808 - debug: iogo.0 redis pmessage io.* io.system.adapter.cloud.0.memRss {"val":40.06,"ack":true,"ts":1550093439805,"q":0,"from":"system.adapter.cloud.0","lc":1550093439805} 2019-02-13 22:30:39.810 - debug: iogo.0 redis pmessage io.* io.system.adapter.cloud.0.memHeapTotal {"val":15.03,"ack":true,"ts":1550093439806,"q":0,"from":"system.adapter.cloud.0","lc":1550093394799} 2019-02-13 22:30:39.812 - debug: iogo.0 redis pmessage io.* io.system.adapter.cloud.0.memHeapUsed {"val":11.62,"ack":true,"ts":1550093439807,"q":0,"from":"system.adapter.cloud.0","lc":1550093439807} 2019-02-13 22:30:39.813 - debug: iogo.0 redis pmessage io.* io.system.adapter.cloud.0.uptime {"val":69857,"ack":true,"ts":1550093439808,"q":0,"from":"system.adapter.cloud.0","lc":1550093439808} 2019-02-13 22:30:39.814 - debug: iogo.0 redis pmessage io.* io.system.adapter.cloud.0.inputCount {"val":1,"ack":true,"ts":1550093439809,"q":0,"from":"system.adapter.cloud.0","lc":1550093439809} 2019-02-13 22:30:39.815 - debug: iogo.0 redis pmessage io.* io.system.adapter.cloud.0.outputCount {"val":8,"ack":true,"ts":1550093439810,"q":0,"from":"system.adapter.cloud.0","lc":1550093439810} 2019-02-13 22:30:40.110 - debug: iogo.0 redis pmessage io.* io.system.adapter.admin.0.alive {"val":true,"ack":true,"ts":1550093440109,"q":0,"from":"system.adapter.admin.0","lc":1550023558992} 2019-02-13 22:30:40.113 - debug: iogo.0 redis pmessage io.* io.system.adapter.admin.0.connected {"val":true,"ack":true,"ts":1550093440111,"q":0,"from":"system.adapter.admin.0","lc":1550023558995} 2019-02-13 22:30:40.116 - debug: iogo.0 redis pmessage io.* io.system.adapter.admin.0.memRss {"val":46.64,"ack":true,"ts":1550093440113,"q":0,"from":"system.adapter.admin.0","lc":1550093440113} 2019-02-13 22:30:40.117 - debug: iogo.0 redis pmessage io.* io.system.adapter.admin.0.memHeapTotal {"val":22.03,"ack":true,"ts":1550093440115,"q":0,"from":"system.adapter.admin.0","lc":1550093425108} 2019-02-13 22:30:40.119 - debug: iogo.0 redis pmessage io.* io.system.adapter.admin.0.memHeapUsed {"val":18.73,"ack":true,"ts":1550093440117,"q":0,"from":"system.adapter.admin.0","lc":1550093440117} 2019-02-13 22:30:40.124 - debug: iogo.0 redis pmessage io.* io.system.adapter.admin.0.uptime {"val":69885,"ack":true,"ts":1550093440119,"q":0,"from":"system.adapter.admin.0","lc":1550093440119} 2019-02-13 22:30:40.125 - debug: iogo.0 redis pmessage io.* io.system.adapter.admin.0.inputCount {"val":85,"ack":true,"ts":1550093440121,"q":0,"from":"system.adapter.admin.0","lc":1550093440121} 2019-02-13 22:30:40.127 - debug: iogo.0 redis pmessage io.* io.system.adapter.admin.0.outputCount {"val":9,"ack":true,"ts":1550093440123,"q":0,"from":"system.adapter.admin.0","lc":1550093440123} 2019-02-13 22:30:41.432 - debug: iogo.0 redis pmessage io.* io.system.adapter.iogo.0.alive {"val":true,"ack":true,"ts":1550093441421,"q":0,"from":"system.adapter.iogo.0","lc":1550093426590} 2019-02-13 22:30:41.433 - debug: iogo.0 redis pmessage io.* io.system.adapter.iogo.0.connected {"val":true,"ack":true,"ts":1550093441422,"q":0,"from":"system.adapter.iogo.0","lc":1550093426591} 2019-02-13 22:30:41.434 - debug: iogo.0 redis pmessage io.* io.system.adapter.iogo.0.memRss {"val":54.26,"ack":true,"ts":1550093441424,"q":0,"from":"system.adapter.iogo.0","lc":1550093441424} 2019-02-13 22:30:41.435 - debug: iogo.0 redis pmessage io.* io.system.adapter.iogo.0.memHeapTotal {"val":35.44,"ack":true,"ts":1550093441425,"q":0,"from":"system.adapter.iogo.0","lc":1550093441425} 2019-02-13 22:30:41.435 - debug: iogo.0 redis pmessage io.* io.system.adapter.iogo.0.memHeapUsed {"val":19.05,"ack":true,"ts":1550093441426,"q":0,"from":"system.adapter.iogo.0","lc":1550093441426} 2019-02-13 22:30:41.436 - debug: iogo.0 redis pmessage io.* io.system.adapter.iogo.0.uptime {"val":18,"ack":true,"ts":1550093441427,"q":0,"from":"system.adapter.iogo.0","lc":1550093441427} 2019-02-13 22:30:41.437 - debug: iogo.0 redis pmessage io.* io.system.adapter.iogo.0.inputCount {"val":99,"ack":true,"ts":1550093441428,"q":0,"from":"system.adapter.iogo.0","lc":1550093441428} 2019-02-13 22:30:41.437 - debug: iogo.0 redis pmessage io.* io.system.adapter.iogo.0.outputCount {"val":10,"ack":true,"ts":1550093441429,"q":0,"from":"system.adapter.iogo.0","lc":1550093441429} 2019-02-13 22:30:42.046 - debug: iogo.0 redis pmessage io.* io.shelly.0.SHSW-1#554877#1.rssi {"val":-70,"ack":true,"ts":1550093442044,"q":0,"from":"system.adapter.shelly.0","lc":1550093442044} 2019-02-13 22:30:42.594 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.alive {"val":true,"ack":true,"ts":1550093442593,"q":0,"from":"system.host.ioBroker","lc":1550023555058} 2019-02-13 22:30:42.596 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.load {"val":0.43,"ack":true,"ts":1550093442594,"q":0,"from":"system.host.ioBroker","lc":1550093442594} 2019-02-13 22:30:42.598 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.mem {"val":17,"ack":true,"ts":1550093442596,"q":0,"from":"system.host.ioBroker","lc":1550093442596} 2019-02-13 22:30:42.600 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.memRss {"val":76.46,"ack":true,"ts":1550093442598,"q":0,"from":"system.host.ioBroker","lc":1550093442598} 2019-02-13 22:30:42.602 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.memHeapTotal {"val":44.6,"ack":true,"ts":1550093442600,"q":0,"from":"system.host.ioBroker","lc":1550093442600} 2019-02-13 22:30:42.603 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.memHeapUsed {"val":37.32,"ack":true,"ts":1550093442602,"q":0,"from":"system.host.ioBroker","lc":1550093442602} 2019-02-13 22:30:42.605 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.uptime {"val":69890,"ack":true,"ts":1550093442604,"q":0,"from":"system.host.ioBroker","lc":1550093442604} 2019-02-13 22:30:42.607 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.freemem {"val":154,"ack":true,"ts":1550093442605,"q":0,"from":"system.host.ioBroker","lc":1550093442605} 2019-02-13 22:30:42.609 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.inputCount {"val":13,"ack":true,"ts":1550093442607,"q":0,"from":"system.host.ioBroker","lc":1550093412569} 2019-02-13 22:30:42.611 - debug: iogo.0 redis pmessage io.* io.system.host.ioBroker.outputCount {"val":10,"ack":true,"ts":1550093442609,"q":0,"from":"system.host.ioBroker","lc":1550093442609} 2019-02-13 22:30:45.597 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.alive {"val":true,"ack":true,"ts":1550093445597,"q":0,"from":"system.adapter.web.0","lc":1550023577289} 2019-02-13 22:30:45.599 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.connected {"val":true,"ack":true,"ts":1550093445597,"q":0,"from":"system.adapter.web.0","lc":1550023577290} 2019-02-13 22:30:45.600 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.memRss {"val":51.25,"ack":true,"ts":1550093445597,"q":0,"from":"system.adapter.web.0","lc":1550093430591} 2019-02-13 22:30:45.601 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.memHeapTotal {"val":14.53,"ack":true,"ts":1550093445598,"q":0,"from":"system.adapter.web.0","lc":1550091044112} 2019-02-13 22:30:45.602 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.memHeapUsed {"val":10.93,"ack":true,"ts":1550093445598,"q":0,"from":"system.adapter.web.0","lc":1550093445598} 2019-02-13 22:30:45.603 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.uptime {"val":69871,"ack":true,"ts":1550093445598,"q":0,"from":"system.adapter.web.0","lc":1550093445598} 2019-02-13 22:30:45.603 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.inputCount {"val":0,"ack":true,"ts":1550093445599,"q":0,"from":"system.adapter.web.0","lc":1550093445599} 2019-02-13 22:30:45.603 - debug: iogo.0 redis pmessage io.* io.system.adapter.web.0.outputCount {"val":8,"ack":true,"ts":1550093445599,"q":0,"from":"system.adapter.web.0","lc":1550090143506} 2019-02-13 22:30:46.502 - info: cloud.0 User accessed from cloud 2019-02-13 22:30:46.506 - debug: iogo.0 redis pmessage io.* io.cloud.0.info.userOnCloud {"val":true,"ack":true,"ts":1550093446504,"q":0,"from":"system.adapter.cloud.0","lc":1550093446504} 2019-02-13 22:30:46.647 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.alive {"val":true,"ack":true,"ts":1550093446645,"q":0,"from":"system.adapter.socketio.0","lc":1550023562966} 2019-02-13 22:30:46.652 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.connected {"val":true,"ack":true,"ts":1550093446646,"q":0,"from":"system.adapter.socketio.0","lc":1550023562969} 2019-02-13 22:30:46.654 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.memRss {"val":47.51,"ack":true,"ts":1550093446647,"q":0,"from":"system.adapter.socketio.0","lc":1550093401634} 2019-02-13 22:30:46.655 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.memHeapTotal {"val":12.53,"ack":true,"ts":1550093446647,"q":0,"from":"system.adapter.socketio.0","lc":1550037161790} 2019-02-13 22:30:46.656 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.memHeapUsed {"val":9.7,"ack":true,"ts":1550093446648,"q":0,"from":"system.adapter.socketio.0","lc":1550093446648} 2019-02-13 22:30:46.656 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.uptime {"val":69888,"ack":true,"ts":1550093446648,"q":0,"from":"system.adapter.socketio.0","lc":1550093446648} 2019-02-13 22:30:46.657 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.inputCount {"val":0,"ack":true,"ts":1550093446649,"q":0,"from":"system.adapter.socketio.0","lc":1550093446649} 2019-02-13 22:30:46.658 - debug: iogo.0 redis pmessage io.* io.system.adapter.socketio.0.outputCount {"val":8,"ack":true,"ts":1550093446649,"q":0,"from":"system.adapter.socketio.0","lc":1550023592893} 2019-02-13 22:30:47.279 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.alive {"val":true,"ack":true,"ts":1550093447275,"q":0,"from":"system.adapter.ebus.0","lc":1550093402257} 2019-02-13 22:30:47.281 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.connected {"val":true,"ack":true,"ts":1550093447276,"q":0,"from":"system.adapter.ebus.0","lc":1550093402258} 2019-02-13 22:30:47.283 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.memRss {"val":34.77,"ack":true,"ts":1550093447278,"q":0,"from":"system.adapter.ebus.0","lc":1550093432260} 2019-02-13 22:30:47.284 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.memHeapTotal {"val":13.53,"ack":true,"ts":1550093447279,"q":0,"from":"system.adapter.ebus.0","lc":1550093432261} 2019-02-13 22:30:47.285 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.memHeapUsed {"val":9.8,"ack":true,"ts":1550093447280,"q":0,"from":"system.adapter.ebus.0","lc":1550093447280} 2019-02-13 22:30:47.288 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.uptime {"val":47,"ack":true,"ts":1550093447281,"q":0,"from":"system.adapter.ebus.0","lc":1550093447281} 2019-02-13 22:30:47.289 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.inputCount {"val":0,"ack":true,"ts":1550093447283,"q":0,"from":"system.adapter.ebus.0","lc":1550093447283} 2019-02-13 22:30:47.289 - debug: iogo.0 redis pmessage io.* io.system.adapter.ebus.0.outputCount {"val":8,"ack":true,"ts":1550093447285,"q":0,"from":"system.adapter.ebus.0","lc":1550093432266} 2019-02-13 22:30:47.892 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.alive {"val":true,"ack":true,"ts":1550093447890,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550023570838} 2019-02-13 22:30:47.895 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.connected {"val":true,"ack":true,"ts":1550093447891,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550023570839} 2019-02-13 22:30:47.896 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.memRss {"val":44.09,"ack":true,"ts":1550093447891,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093417883} 2019-02-13 22:30:47.897 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.memHeapTotal {"val":21.03,"ack":true,"ts":1550093447892,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550060715601} 2019-02-13 22:30:47.898 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.memHeapUsed {"val":10.66,"ack":true,"ts":1550093447893,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093447893} 2019-02-13 22:30:47.899 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.uptime {"val":69880,"ack":true,"ts":1550093447893,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093447893} 2019-02-13 22:30:47.901 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.inputCount {"val":0,"ack":true,"ts":1550093447894,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093447894} 2019-02-13 22:30:47.902 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.0.outputCount {"val":8,"ack":true,"ts":1550093447894,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093432886} 2019-02-13 22:30:51.212 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.alive {"val":true,"ack":true,"ts":1550093451207,"q":0,"from":"system.adapter.influxdb.0","lc":1550023581735} 2019-02-13 22:30:51.217 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.connected {"val":true,"ack":true,"ts":1550093451208,"q":0,"from":"system.adapter.influxdb.0","lc":1550023581736} 2019-02-13 22:30:51.218 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.memRss {"val":57.46,"ack":true,"ts":1550093451208,"q":0,"from":"system.adapter.influxdb.0","lc":1550093451208} 2019-02-13 22:30:51.219 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.memHeapTotal {"val":23.07,"ack":true,"ts":1550093451208,"q":0,"from":"system.adapter.influxdb.0","lc":1550091980835} 2019-02-13 22:30:51.220 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.memHeapUsed {"val":15.56,"ack":true,"ts":1550093451209,"q":0,"from":"system.adapter.influxdb.0","lc":1550093451209} 2019-02-13 22:30:51.221 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.uptime {"val":69872,"ack":true,"ts":1550093451209,"q":0,"from":"system.adapter.influxdb.0","lc":1550093451209} 2019-02-13 22:30:51.221 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.inputCount {"val":100,"ack":true,"ts":1550093451209,"q":0,"from":"system.adapter.influxdb.0","lc":1550093451209} 2019-02-13 22:30:51.222 - debug: iogo.0 redis pmessage io.* io.system.adapter.influxdb.0.outputCount {"val":8,"ack":true,"ts":1550093451210,"q":0,"from":"system.adapter.influxdb.0","lc":1550023686721} 2019-02-13 22:30:51.770 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.alive {"val":true,"ack":true,"ts":1550093451764,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550023573675} 2019-02-13 22:30:51.771 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.connected {"val":true,"ack":true,"ts":1550093451764,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550023573676} 2019-02-13 22:30:51.772 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.memRss {"val":55.23,"ack":true,"ts":1550093451765,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093421730} 2019-02-13 22:30:51.773 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.memHeapTotal {"val":18.53,"ack":true,"ts":1550093451766,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550086998586} 2019-02-13 22:30:51.774 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.memHeapUsed {"val":10.91,"ack":true,"ts":1550093451766,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093451766} 2019-02-13 22:30:51.774 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.uptime {"val":69881,"ack":true,"ts":1550093451767,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093451767} 2019-02-13 22:30:51.775 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.inputCount {"val":0,"ack":true,"ts":1550093451768,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093451768} 2019-02-13 22:30:51.776 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rpc.1.outputCount {"val":8,"ack":true,"ts":1550093451768,"q":0,"from":"system.adapter.hm-rpc.1","lc":1550093436745} 2019-02-13 22:30:52.227 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.alive {"val":true,"ack":true,"ts":1550093452225,"q":0,"from":"system.adapter.hm-rega.0","lc":1550053581043} 2019-02-13 22:30:52.229 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.connected {"val":true,"ack":true,"ts":1550093452225,"q":0,"from":"system.adapter.hm-rega.0","lc":1550053581045} 2019-02-13 22:30:52.231 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.memRss {"val":53.71,"ack":true,"ts":1550093452226,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093437222} 2019-02-13 22:30:52.232 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.memHeapTotal {"val":17.03,"ack":true,"ts":1550093452227,"q":0,"from":"system.adapter.hm-rega.0","lc":1550092866945} 2019-02-13 22:30:52.232 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.memHeapUsed {"val":12.27,"ack":true,"ts":1550093452227,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093452227} 2019-02-13 22:30:52.234 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.uptime {"val":39873,"ack":true,"ts":1550093452228,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093452228} 2019-02-13 22:30:52.235 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.inputCount {"val":0,"ack":true,"ts":1550093452228,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093452228} 2019-02-13 22:30:52.235 - debug: iogo.0 redis pmessage io.* io.system.adapter.hm-rega.0.outputCount {"val":8,"ack":true,"ts":1550093452229,"q":0,"from":"system.adapter.hm-rega.0","lc":1550093452229} 2019-02-13 22:30:52.261 - debug: iogo.0 redis pmessage io.* io.shelly.0.SHSW-1#554877#1.rssi {"val":-69,"ack":true,"ts":1550093452259,"q":0,"from":"system.adapter.shelly.0","lc":1550093452259} 2019-02-13 22:30:52.440 - debug: iogo.0 redis pmessage io.* io.hm-rpc.0.OEQ0349245.1.TEMPERATURE {"val":0.9,"ack":true,"ts":1550093452438,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550093331718} 2019-02-13 22:30:52.444 - debug: iogo.0 send state: hm-rpc.0.OEQ0349245.1.TEMPERATURE state.val:0.9 stateValues[id]:undefined state.from:-1 2019-02-13 22:30:52.445 - debug: iogo.0 redis pmessage io.* io.hm-rpc.0.OEQ0349245.1.HUMIDITY {"val":79,"ack":true,"ts":1550093452439,"q":0,"from":"system.adapter.hm-rpc.0","lc":1550091979910} 2019-02-13 22:30:52.446 - debug: iogo.0 send state: hm-rpc.0.OEQ0349245.1.HUMIDITY state.val:79 stateValues[id]:undefined state.from:-1 -

@nisio das Anzahl raum und funktion nicht übereinstimmen, doppelte Einträge , der Räume und Funktionen, Symbole wandern nicht mit beim verschieben,

@crunchip sagte in [Projekt] ioGo # Native Android App:

@nisio das Anzahl raum und funktion nicht übereinstimmen

bitte mal einen RAW von einem der fehlenden enum Objekte posten

doppelte Einträge , der Räume und Funktionen

ich dachte das war nur bei @MGK , wie seiht das bei dir aus? Bitte mal auch hier einen RAW von einem der zu vielen Objekte posten

Symbole wandern nicht mit beim verschieben,

bitte Screenshots posten

-

@crunchip sagte in [Projekt] ioGo # Native Android App:

@nisio das Anzahl raum und funktion nicht übereinstimmen

bitte mal einen RAW von einem der fehlenden enum Objekte posten

doppelte Einträge , der Räume und Funktionen

ich dachte das war nur bei @MGK , wie seiht das bei dir aus? Bitte mal auch hier einen RAW von einem der zu vielen Objekte posten

Symbole wandern nicht mit beim verschieben,

bitte Screenshots posten

@nisio

So langsam komme ich zurecht - auch wenn Geräte noch nicht gruppiert werden können. Auch dimmen usw. funktioniert, super!Ich habe aber auch ein paar Geräte in Deiner App denen in ioBroker definitiv (oder ich sehe es nicht) kein Raum und keine Funktion zugewiesen ist. zB ein Sonoff S20, ein LED Stripe von Magic Home oder auch eine Android TV-Box.

Werden noch irgendwo Einstellungen gezogen die ich ggf. ändern kann?

PS: Ich glaube seit letztem UpDate wird ein State auch zurückgespielt, PERFEKT!

Dank und Gruß

-

@MGK 814 States sind schon jede Menge. Was steht im Log von iobroker während die States geladen werden? Wenn die Meldung "database initialized with xyz states" erscheint, sind danach immer noch die Werte alle null in der App?

@nisio sagte in [Projekt] ioGo # Native Android App:

@MGK 814 States sind schon jede Menge. Was steht im Log von iobroker während die States geladen werden? Wenn die Meldung "database initialized with xyz states" erscheint, sind danach immer noch die Werte alle null in der App?

Das Log habe ich oben gepostet.

-

@crunchip sagte in [Projekt] ioGo # Native Android App:

@nisio das Anzahl raum und funktion nicht übereinstimmen

bitte mal einen RAW von einem der fehlenden enum Objekte posten

doppelte Einträge , der Räume und Funktionen

ich dachte das war nur bei @MGK , wie seiht das bei dir aus? Bitte mal auch hier einen RAW von einem der zu vielen Objekte posten

Symbole wandern nicht mit beim verschieben,

bitte Screenshots posten

@nisio bin aktuell nicht zu hause und muss zu meiner Schande gestehen, das ich Grad nicht weiss wie ich hier Screenshots vom Handy hinein bekomme😫

-

@nisio sagte in [Projekt] ioGo # Native Android App:

@MGK 814 States sind schon jede Menge. Was steht im Log von iobroker während die States geladen werden? Wenn die Meldung "database initialized with xyz states" erscheint, sind danach immer noch die Werte alle null in der App?

Das Log habe ich oben gepostet.

@MGK sagte in [Projekt] ioGo # Native Android App:

@nisio sagte in [Projekt] ioGo # Native Android App:

@MGK 814 States sind schon jede Menge. Was steht im Log von iobroker während die States geladen werden? Wenn die Meldung "database initialized with xyz states" erscheint, sind danach immer noch die Werte alle null in der App?

Das Log habe ich oben gepostet.

hihi, oben ist bei mir unten.

Ich vermute es liegt an der nicht gesicherten Reihenfolge der Synchronisation von enum, object und state.

Habe mal die Synchronisation der enums zwingend als ersten Sync festgelegt.

v0.3.3 ist gerade unterwegs ins GitHub. Kannst du von Github installieren? -

@MGK sagte in [Projekt] ioGo # Native Android App:

@nisio sagte in [Projekt] ioGo # Native Android App:

@MGK 814 States sind schon jede Menge. Was steht im Log von iobroker während die States geladen werden? Wenn die Meldung "database initialized with xyz states" erscheint, sind danach immer noch die Werte alle null in der App?

Das Log habe ich oben gepostet.

hihi, oben ist bei mir unten.

Ich vermute es liegt an der nicht gesicherten Reihenfolge der Synchronisation von enum, object und state.

Habe mal die Synchronisation der enums zwingend als ersten Sync festgelegt.

v0.3.3 ist gerade unterwegs ins GitHub. Kannst du von Github installieren? -

@nisio ach und vllt noch eine Verbesseung. Man müsste die Reihenfolge innerhalb der App manuell ändern können. Durch schieben vllt

@Gerni sagte in [Projekt] ioGo # Native Android App:

@nisio ach und vllt noch eine Verbesseung. Man müsste die Reihenfolge innerhalb der App manuell ändern können. Durch schieben vllt

das geht bereits bei Räumen und Funktionen, lange gedrückt halten und dann verschieben.

Bei states geht es noch nicht -

@crunchip sagte in [Projekt] ioGo # Native Android App:

@nisio das Anzahl raum und funktion nicht übereinstimmen

bitte mal einen RAW von einem der fehlenden enum Objekte posten

doppelte Einträge , der Räume und Funktionen

ich dachte das war nur bei @MGK , wie seiht das bei dir aus? Bitte mal auch hier einen RAW von einem der zu vielen Objekte posten

Symbole wandern nicht mit beim verschieben,

bitte Screenshots posten

@nisio ich vermute das standardmässig alle Räume und Funktionen im original übernommen werden, die angelegt sind und die doppelten Einträge sind die, welche ich manuel geändert habe. Aber als Original bleiben sie trotzdem drin.

-

@nisio ich vermute das standardmässig alle Räume und Funktionen im original übernommen werden, die angelegt sind und die doppelten Einträge sind die, welche ich manuel geändert habe. Aber als Original bleiben sie trotzdem drin.

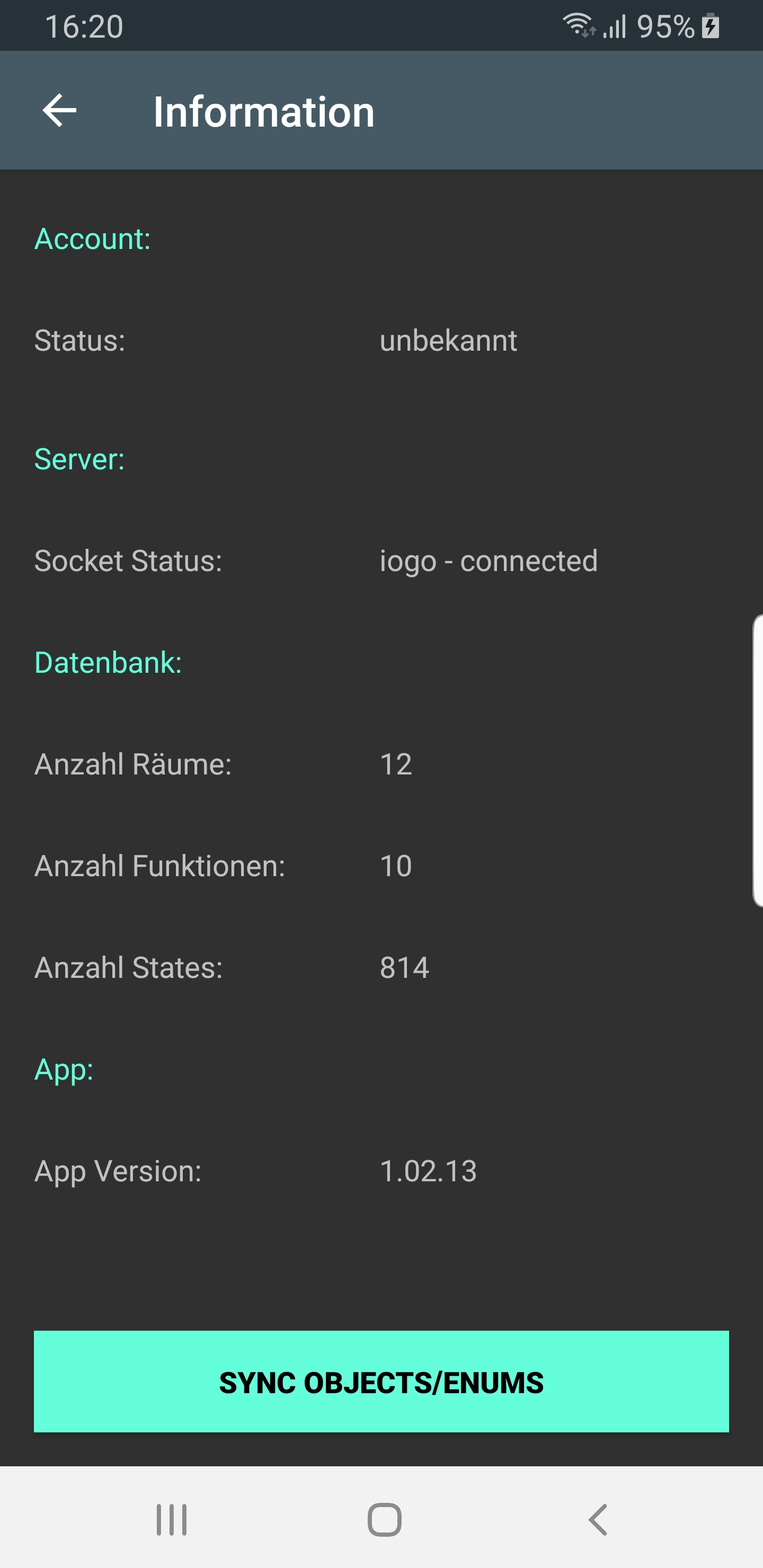

@crunchip das sollte mittlerweile gelöst sein in der aktuellen Version (App v1.02.13 / Adapter v0.3.2). Wenn möglich bitte mal testen ob es noch auftritt nach einer frischen Installation.

-

@crunchip das sollte mittlerweile gelöst sein in der aktuellen Version (App v1.02.13 / Adapter v0.3.2). Wenn möglich bitte mal testen ob es noch auftritt nach einer frischen Installation.

@nisio daher weht der Wind 🤔 app version bei mir 1.02.12

Hatte ich gestern de/neu installiert -

@nisio daher weht der Wind 🤔 app version bei mir 1.02.12

Hatte ich gestern de/neu installiert@crunchip v1.02.13 meinte ich, ist als Beta verfügbar im Playstore

-

@crunchip v1.02.13 meinte ich, ist als Beta verfügbar im Playstore

@nisio ok, probier ich gleich mal

-

@crunchip v1.02.13 meinte ich, ist als Beta verfügbar im Playstore

@nisio aktueller Stand

Raum und Funktion Anzahl stimmt jetzt.

Was nach wie vor fehlerhaft ist, das Symbole sich verschieben beim neu anordnen.

Passiert aber nur, wenn ein Raum bzw Funktion dabei ist, bei denen kein Symbol hinterlegt ist.Nach Synchronisation ist die Anordnung wieder auf Ursprung.

Was hat das mit sichtbar/nicht sichtbar und und Favoriten auf sich?

Da kann ich nichts auswählen