NEWS

iobroker plötzlich nicht mehr oder schwer erreichbar

-

Hier die iob diag in der gewünschten Form:

======== Start marking the full check here ========= Skript v.2023-10-10 *** BASE SYSTEM *** Static hostname: rpi4-iob Icon name: computer Operating System: Debian GNU/Linux 11 (bullseye) Kernel: Linux 6.1.21-v8+ Architecture: arm64 Model : Raspberry Pi 4 Model B Rev 1.5 Docker : false Virtualization : none Kernel : aarch64 Userland : arm64 Systemuptime and Load: 17:39:08 up 23:41, 1 user, load average: 3.13, 3.31, 4.20 CPU threads: 4 *** RASPBERRY THROTTLING *** Current issues: No throttling issues detected. Previously detected issues: ~ Under-voltage has occurred ~ Arm frequency capping has occurred ~ Throttling has occurred ~ Soft temperature limit has occurred *** Time and Time Zones *** Local time: Wed 2024-03-13 17:39:08 CET Universal time: Wed 2024-03-13 16:39:08 UTC RTC time: n/a Time zone: Europe/Berlin (CET, +0100) System clock synchronized: yes NTP service: active RTC in local TZ: no *** User and Groups *** pi /home/pi pi adm dialout cdrom sudo audio video plugdev games users input render netdev gpio i2c spi iobroker *** X-Server-Setup *** X-Server: false Desktop: Terminal: tty Boot Target: multi-user.target *** MEMORY *** total used free shared buff/cache available Mem: 3.8G 2.7G 285M 0.0K 762M 1.4G Swap: 99M 10M 89M Total: 3.9G 2.8G 374M 3793 M total memory 2745 M used memory 2130 M active memory 1198 M inactive memory 285 M free memory 52 M buffer memory 710 M swap cache 99 M total swap 10 M used swap 89 M free swap Raspberry only: oom events: 0 lifetime oom required: 0 Mbytes total time in oom handler: 0 ms max time spent in oom handler: 0 ms *** FAILED SERVICES *** UNIT LOAD ACTIVE SUB DESCRIPTION 0 loaded units listed. *** FILESYSTEM *** Filesystem Type Size Used Avail Use% Mounted on /dev/root ext4 459G 24G 417G 6% / devtmpfs devtmpfs 1.7G 0 1.7G 0% /dev tmpfs tmpfs 1.9G 0 1.9G 0% /dev/shm tmpfs tmpfs 759M 1.1M 758M 1% /run tmpfs tmpfs 5.0M 4.0K 5.0M 1% /run/lock /dev/sda1 vfat 255M 31M 225M 13% /boot tmpfs tmpfs 380M 0 380M 0% /run/user/1000 Messages concerning ext4 filesystem in dmesg: [Tue Mar 12 17:58:07 2024] Kernel command line: coherent_pool=1M 8250.nr_uarts=0 snd_bcm2835.enable_headphones=0 snd_bcm2835.enable_headphones=1 snd_bcm2835.enable_hdmi=1 snd_bcm2835.enable_hdmi=0 smsc95xx.macaddr=D8:3A:DD:0A:52:C5 vc_mem.mem_base=0x3eb00000 vc_mem.mem_size=0x3ff00000 console=ttyS0,115200 console=tty1 root=PARTUUID=f052dfb4-02 rootfstype=ext4 fsck.repair=yes rootwait [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): INFO: recovery required on readonly filesystem [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): write access will be enabled during recovery [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): orphan cleanup on readonly fs [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): 3 orphan inodes deleted [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): recovery complete [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): mounted filesystem with ordered data mode. Quota mode: none. [Tue Mar 12 17:58:09 2024] VFS: Mounted root (ext4 filesystem) readonly on device 8:2. [Tue Mar 12 17:58:11 2024] EXT4-fs (sda2): re-mounted. Quota mode: none. Show mounted filesystems \(real ones only\): TARGET SOURCE FSTYPE OPTIONS / /dev/sda2 ext4 rw,noatime `-/boot /dev/sda1 vfat rw,relatime,fmask=0022,dmask=0022,codepage=437,iocharset=ascii,shortname=mixed,errors=remount-ro Files in neuralgic directories: /var: 12G /var/ 11G /var/log 4.0G /var/log/journal/93e81a7a4f294d7ca561dd2805eb2080 4.0G /var/log/journal 998M /var/lib Archived and active journals take up 3.9G in the file system. /opt/iobroker/backups: 3.2G /opt/iobroker/backups/ /opt/iobroker/iobroker-data: 291M /opt/iobroker/iobroker-data/ 159M /opt/iobroker/iobroker-data/files 76M /opt/iobroker/iobroker-data/backup-objects 57M /opt/iobroker/iobroker-data/files/javascript.admin 38M /opt/iobroker/iobroker-data/files/javascript.admin/static The five largest files in iobroker-data are: 40M /opt/iobroker/iobroker-data/states.jsonl 22M /opt/iobroker/iobroker-data/files/web.admin/static/js/main.c05ba1d3.js.map 22M /opt/iobroker/iobroker-data/files/modbus.admin/static/js/main.578d79d9.js.map 16M /opt/iobroker/iobroker-data/objects.jsonl 8.8M /opt/iobroker/iobroker-data/files/modbus.admin/static/js/main.578d79d9.js USB-Devices by-id: USB-Sticks - Avoid direct links to /dev/* in your adapter setups, please always prefer the links 'by-id': find: '/dev/serial/by-id/': No such file or directory *** NodeJS-Installation *** /home/iobroker/.diag.sh: line 277: nodejs: command not found /usr/bin/node v18.16.0 /usr/bin/npm 9.5.1 /usr/bin/npx 9.5.1 /usr/bin/corepack 0.17.0 /home/iobroker/.diag.sh: line 288: nodejs: command not found *** nodejs is NOT correctly installed *** nodejs: Installed: 18.16.0-deb-1nodesource1 Candidate: 18.17.1-deb-1nodesource1 Version table: 18.17.1-deb-1nodesource1 500 500 https://deb.nodesource.com/node_18.x bullseye/main arm64 Packages *** 18.16.0-deb-1nodesource1 100 100 /var/lib/dpkg/status 12.22.12~dfsg-1~deb11u4 500 500 http://deb.debian.org/debian bullseye/main arm64 Packages 500 http://security.debian.org/debian-security bullseye-security/main arm64 Packages Temp directories causing npm8 problem: 0 No problems detected Errors in npm tree: *** ioBroker-Installation *** ioBroker Status /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at close (/opt/iobroker/node_modules/ioredis/built/redis/event_handler.js:184:25) at Socket.<anonymous> (/opt/iobroker/node_modules/ioredis/built/redis/event_handler.js:151:20) at Object.onceWrapper (node:events:628:26) at Socket.emit (node:events:513:28) at TCP.<anonymous> (node:net:322:12) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Core adapters versions js-controller: 5.0.12 admin: 6.13.16 javascript: 7.8.0 Adapters from github: 0 Adapter State Cannot read system.config: undefined (OK when migrating or restoring) /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Enabled adapters with bindings /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 ioBroker-Repositories Cannot read system.config: undefined (OK when migrating or restoring) /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Installed ioBroker-Instances Cannot load "custom": Connection is closed. Cannot initialize database scripts: Cannot load "custom" into objects database: Connection is closed. Server Cannot start inMem-objects on port 9001: Failed to lock DB file "/opt/iobroker/iobroker-data/objects.jsonl"! Objects and States Please stand by - This may take a while Objects: 0 States: 1 *** OS-Repositories and Updates *** W: GPG error: https://repos.influxdata.com/debian stretch InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY D8FF8E1F7DF8B07E E: The repository 'https://repos.influxdata.com/debian stretch InRelease' is not signed. Pending Updates: 100 *** Listening Ports *** Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State User Inode PID/Program name tcp 0 0 127.0.0.1:8088 0.0.0.0:* LISTEN 110 14436 512/influxd tcp 0 0 192.168.0.113:42001 0.0.0.0:* LISTEN 1001 79460428 75886/io.hm-rpc.0 tcp 0 0 192.168.0.113:42010 0.0.0.0:* LISTEN 1001 79457255 75901/io.hm-rpc.1 tcp 0 0 0.0.0.0:1882 0.0.0.0:* LISTEN 1001 79462608 75997/io.shelly.0 tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 0 12067 544/sshd: /usr/sbin tcp 0 0 127.0.0.1:9000 0.0.0.0:* LISTEN 1001 79459907 75774/iobroker.js-c tcp 0 0 127.0.0.1:9001 0.0.0.0:* LISTEN 1001 79459900 75774/iobroker.js-c tcp6 0 0 :::8082 :::* LISTEN 1001 79462674 76111/io.web.0 tcp6 0 0 :::8081 :::* LISTEN 1001 79451097 75792/io.admin.0 tcp6 0 0 :::8086 :::* LISTEN 110 14472 512/influxd tcp6 0 0 :::22 :::* LISTEN 0 12069 544/sshd: /usr/sbin udp 0 0 0.0.0.0:5353 0.0.0.0:* 108 13632 394/avahi-daemon: r udp 0 0 0.0.0.0:46520 0.0.0.0:* 108 13634 394/avahi-daemon: r udp 0 0 0.0.0.0:68 0.0.0.0:* 0 12077 812/dhcpcd udp6 0 0 :::5353 :::* 108 13633 394/avahi-daemon: r udp6 0 0 :::42768 :::* 108 13635 394/avahi-daemon: r udp6 0 0 :::546 :::* 0 12193 812/dhcpcd *** Log File - Last 25 Lines *** 2024-03-13 17:41:46.384 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.385 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.4alllivevalues[openWB/graph/4alllivevalues] 2024-03-13 17:41:46.388 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.389 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.390 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.391 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.2alllivevalues[openWB/graph/2alllivevalues] 2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.15alllivevalues[openWB/graph/15alllivevalues] 2024-03-13 17:41:46.397 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.3alllivevalues[openWB/graph/3alllivevalues] 2024-03-13 17:41:46.400 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.11alllivevalues[openWB/graph/11alllivevalues] 2024-03-13 17:41:46.401 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.404 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.405 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.406 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.407 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.410 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.12alllivevalues[openWB/graph/12alllivevalues] 2024-03-13 17:41:46.411 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.412 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.416 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.417 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.420 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.421 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.8alllivevalues[openWB/graph/8alllivevalues] 2024-03-13 17:41:46.422 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.426 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.9alllivevalues[openWB/graph/9alllivevalues] 2024-03-13 17:41:46.427 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] ============ Mark until here for C&P =============Wie repariere ich das Dateisystem?

Ist es üblich, dass ein schwaches Netzteil sich nach rd. einem Jahr meldet?

-

Hier die iob diag in der gewünschten Form:

======== Start marking the full check here ========= Skript v.2023-10-10 *** BASE SYSTEM *** Static hostname: rpi4-iob Icon name: computer Operating System: Debian GNU/Linux 11 (bullseye) Kernel: Linux 6.1.21-v8+ Architecture: arm64 Model : Raspberry Pi 4 Model B Rev 1.5 Docker : false Virtualization : none Kernel : aarch64 Userland : arm64 Systemuptime and Load: 17:39:08 up 23:41, 1 user, load average: 3.13, 3.31, 4.20 CPU threads: 4 *** RASPBERRY THROTTLING *** Current issues: No throttling issues detected. Previously detected issues: ~ Under-voltage has occurred ~ Arm frequency capping has occurred ~ Throttling has occurred ~ Soft temperature limit has occurred *** Time and Time Zones *** Local time: Wed 2024-03-13 17:39:08 CET Universal time: Wed 2024-03-13 16:39:08 UTC RTC time: n/a Time zone: Europe/Berlin (CET, +0100) System clock synchronized: yes NTP service: active RTC in local TZ: no *** User and Groups *** pi /home/pi pi adm dialout cdrom sudo audio video plugdev games users input render netdev gpio i2c spi iobroker *** X-Server-Setup *** X-Server: false Desktop: Terminal: tty Boot Target: multi-user.target *** MEMORY *** total used free shared buff/cache available Mem: 3.8G 2.7G 285M 0.0K 762M 1.4G Swap: 99M 10M 89M Total: 3.9G 2.8G 374M 3793 M total memory 2745 M used memory 2130 M active memory 1198 M inactive memory 285 M free memory 52 M buffer memory 710 M swap cache 99 M total swap 10 M used swap 89 M free swap Raspberry only: oom events: 0 lifetime oom required: 0 Mbytes total time in oom handler: 0 ms max time spent in oom handler: 0 ms *** FAILED SERVICES *** UNIT LOAD ACTIVE SUB DESCRIPTION 0 loaded units listed. *** FILESYSTEM *** Filesystem Type Size Used Avail Use% Mounted on /dev/root ext4 459G 24G 417G 6% / devtmpfs devtmpfs 1.7G 0 1.7G 0% /dev tmpfs tmpfs 1.9G 0 1.9G 0% /dev/shm tmpfs tmpfs 759M 1.1M 758M 1% /run tmpfs tmpfs 5.0M 4.0K 5.0M 1% /run/lock /dev/sda1 vfat 255M 31M 225M 13% /boot tmpfs tmpfs 380M 0 380M 0% /run/user/1000 Messages concerning ext4 filesystem in dmesg: [Tue Mar 12 17:58:07 2024] Kernel command line: coherent_pool=1M 8250.nr_uarts=0 snd_bcm2835.enable_headphones=0 snd_bcm2835.enable_headphones=1 snd_bcm2835.enable_hdmi=1 snd_bcm2835.enable_hdmi=0 smsc95xx.macaddr=D8:3A:DD:0A:52:C5 vc_mem.mem_base=0x3eb00000 vc_mem.mem_size=0x3ff00000 console=ttyS0,115200 console=tty1 root=PARTUUID=f052dfb4-02 rootfstype=ext4 fsck.repair=yes rootwait [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): INFO: recovery required on readonly filesystem [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): write access will be enabled during recovery [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): orphan cleanup on readonly fs [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): 3 orphan inodes deleted [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): recovery complete [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): mounted filesystem with ordered data mode. Quota mode: none. [Tue Mar 12 17:58:09 2024] VFS: Mounted root (ext4 filesystem) readonly on device 8:2. [Tue Mar 12 17:58:11 2024] EXT4-fs (sda2): re-mounted. Quota mode: none. Show mounted filesystems \(real ones only\): TARGET SOURCE FSTYPE OPTIONS / /dev/sda2 ext4 rw,noatime `-/boot /dev/sda1 vfat rw,relatime,fmask=0022,dmask=0022,codepage=437,iocharset=ascii,shortname=mixed,errors=remount-ro Files in neuralgic directories: /var: 12G /var/ 11G /var/log 4.0G /var/log/journal/93e81a7a4f294d7ca561dd2805eb2080 4.0G /var/log/journal 998M /var/lib Archived and active journals take up 3.9G in the file system. /opt/iobroker/backups: 3.2G /opt/iobroker/backups/ /opt/iobroker/iobroker-data: 291M /opt/iobroker/iobroker-data/ 159M /opt/iobroker/iobroker-data/files 76M /opt/iobroker/iobroker-data/backup-objects 57M /opt/iobroker/iobroker-data/files/javascript.admin 38M /opt/iobroker/iobroker-data/files/javascript.admin/static The five largest files in iobroker-data are: 40M /opt/iobroker/iobroker-data/states.jsonl 22M /opt/iobroker/iobroker-data/files/web.admin/static/js/main.c05ba1d3.js.map 22M /opt/iobroker/iobroker-data/files/modbus.admin/static/js/main.578d79d9.js.map 16M /opt/iobroker/iobroker-data/objects.jsonl 8.8M /opt/iobroker/iobroker-data/files/modbus.admin/static/js/main.578d79d9.js USB-Devices by-id: USB-Sticks - Avoid direct links to /dev/* in your adapter setups, please always prefer the links 'by-id': find: '/dev/serial/by-id/': No such file or directory *** NodeJS-Installation *** /home/iobroker/.diag.sh: line 277: nodejs: command not found /usr/bin/node v18.16.0 /usr/bin/npm 9.5.1 /usr/bin/npx 9.5.1 /usr/bin/corepack 0.17.0 /home/iobroker/.diag.sh: line 288: nodejs: command not found *** nodejs is NOT correctly installed *** nodejs: Installed: 18.16.0-deb-1nodesource1 Candidate: 18.17.1-deb-1nodesource1 Version table: 18.17.1-deb-1nodesource1 500 500 https://deb.nodesource.com/node_18.x bullseye/main arm64 Packages *** 18.16.0-deb-1nodesource1 100 100 /var/lib/dpkg/status 12.22.12~dfsg-1~deb11u4 500 500 http://deb.debian.org/debian bullseye/main arm64 Packages 500 http://security.debian.org/debian-security bullseye-security/main arm64 Packages Temp directories causing npm8 problem: 0 No problems detected Errors in npm tree: *** ioBroker-Installation *** ioBroker Status /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at close (/opt/iobroker/node_modules/ioredis/built/redis/event_handler.js:184:25) at Socket.<anonymous> (/opt/iobroker/node_modules/ioredis/built/redis/event_handler.js:151:20) at Object.onceWrapper (node:events:628:26) at Socket.emit (node:events:513:28) at TCP.<anonymous> (node:net:322:12) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Core adapters versions js-controller: 5.0.12 admin: 6.13.16 javascript: 7.8.0 Adapters from github: 0 Adapter State Cannot read system.config: undefined (OK when migrating or restoring) /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Enabled adapters with bindings /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 ioBroker-Repositories Cannot read system.config: undefined (OK when migrating or restoring) /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Installed ioBroker-Instances Cannot load "custom": Connection is closed. Cannot initialize database scripts: Cannot load "custom" into objects database: Connection is closed. Server Cannot start inMem-objects on port 9001: Failed to lock DB file "/opt/iobroker/iobroker-data/objects.jsonl"! Objects and States Please stand by - This may take a while Objects: 0 States: 1 *** OS-Repositories and Updates *** W: GPG error: https://repos.influxdata.com/debian stretch InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY D8FF8E1F7DF8B07E E: The repository 'https://repos.influxdata.com/debian stretch InRelease' is not signed. Pending Updates: 100 *** Listening Ports *** Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State User Inode PID/Program name tcp 0 0 127.0.0.1:8088 0.0.0.0:* LISTEN 110 14436 512/influxd tcp 0 0 192.168.0.113:42001 0.0.0.0:* LISTEN 1001 79460428 75886/io.hm-rpc.0 tcp 0 0 192.168.0.113:42010 0.0.0.0:* LISTEN 1001 79457255 75901/io.hm-rpc.1 tcp 0 0 0.0.0.0:1882 0.0.0.0:* LISTEN 1001 79462608 75997/io.shelly.0 tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 0 12067 544/sshd: /usr/sbin tcp 0 0 127.0.0.1:9000 0.0.0.0:* LISTEN 1001 79459907 75774/iobroker.js-c tcp 0 0 127.0.0.1:9001 0.0.0.0:* LISTEN 1001 79459900 75774/iobroker.js-c tcp6 0 0 :::8082 :::* LISTEN 1001 79462674 76111/io.web.0 tcp6 0 0 :::8081 :::* LISTEN 1001 79451097 75792/io.admin.0 tcp6 0 0 :::8086 :::* LISTEN 110 14472 512/influxd tcp6 0 0 :::22 :::* LISTEN 0 12069 544/sshd: /usr/sbin udp 0 0 0.0.0.0:5353 0.0.0.0:* 108 13632 394/avahi-daemon: r udp 0 0 0.0.0.0:46520 0.0.0.0:* 108 13634 394/avahi-daemon: r udp 0 0 0.0.0.0:68 0.0.0.0:* 0 12077 812/dhcpcd udp6 0 0 :::5353 :::* 108 13633 394/avahi-daemon: r udp6 0 0 :::42768 :::* 108 13635 394/avahi-daemon: r udp6 0 0 :::546 :::* 0 12193 812/dhcpcd *** Log File - Last 25 Lines *** 2024-03-13 17:41:46.384 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.385 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.4alllivevalues[openWB/graph/4alllivevalues] 2024-03-13 17:41:46.388 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.389 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.390 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.391 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.2alllivevalues[openWB/graph/2alllivevalues] 2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.15alllivevalues[openWB/graph/15alllivevalues] 2024-03-13 17:41:46.397 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.3alllivevalues[openWB/graph/3alllivevalues] 2024-03-13 17:41:46.400 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.11alllivevalues[openWB/graph/11alllivevalues] 2024-03-13 17:41:46.401 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.404 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.405 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.406 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.407 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.410 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.12alllivevalues[openWB/graph/12alllivevalues] 2024-03-13 17:41:46.411 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.412 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.416 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.417 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.420 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.421 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.8alllivevalues[openWB/graph/8alllivevalues] 2024-03-13 17:41:46.422 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.426 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.9alllivevalues[openWB/graph/9alllivevalues] 2024-03-13 17:41:46.427 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] ============ Mark until here for C&P =============Wie repariere ich das Dateisystem?

Ist es üblich, dass ein schwaches Netzteil sich nach rd. einem Jahr meldet?

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Ist es üblich, dass ein schwaches Netzteil sich nach rd. einem Jahr meldet?

- Auch solche Teile altern.

- Wenn der Leistungshunger der Maschine wächst, kommt irgendwann das Netzteil nicht mehr mit.

Mehr Scripte, zusätzliche Komponenten wie Influx, Grafana oder Kamerastreaming etc.

Nur als Hinweis:

Dass da der Expertenmodus an ist, hat einen bestimmten Grund? -

Hier die iob diag in der gewünschten Form:

======== Start marking the full check here ========= Skript v.2023-10-10 *** BASE SYSTEM *** Static hostname: rpi4-iob Icon name: computer Operating System: Debian GNU/Linux 11 (bullseye) Kernel: Linux 6.1.21-v8+ Architecture: arm64 Model : Raspberry Pi 4 Model B Rev 1.5 Docker : false Virtualization : none Kernel : aarch64 Userland : arm64 Systemuptime and Load: 17:39:08 up 23:41, 1 user, load average: 3.13, 3.31, 4.20 CPU threads: 4 *** RASPBERRY THROTTLING *** Current issues: No throttling issues detected. Previously detected issues: ~ Under-voltage has occurred ~ Arm frequency capping has occurred ~ Throttling has occurred ~ Soft temperature limit has occurred *** Time and Time Zones *** Local time: Wed 2024-03-13 17:39:08 CET Universal time: Wed 2024-03-13 16:39:08 UTC RTC time: n/a Time zone: Europe/Berlin (CET, +0100) System clock synchronized: yes NTP service: active RTC in local TZ: no *** User and Groups *** pi /home/pi pi adm dialout cdrom sudo audio video plugdev games users input render netdev gpio i2c spi iobroker *** X-Server-Setup *** X-Server: false Desktop: Terminal: tty Boot Target: multi-user.target *** MEMORY *** total used free shared buff/cache available Mem: 3.8G 2.7G 285M 0.0K 762M 1.4G Swap: 99M 10M 89M Total: 3.9G 2.8G 374M 3793 M total memory 2745 M used memory 2130 M active memory 1198 M inactive memory 285 M free memory 52 M buffer memory 710 M swap cache 99 M total swap 10 M used swap 89 M free swap Raspberry only: oom events: 0 lifetime oom required: 0 Mbytes total time in oom handler: 0 ms max time spent in oom handler: 0 ms *** FAILED SERVICES *** UNIT LOAD ACTIVE SUB DESCRIPTION 0 loaded units listed. *** FILESYSTEM *** Filesystem Type Size Used Avail Use% Mounted on /dev/root ext4 459G 24G 417G 6% / devtmpfs devtmpfs 1.7G 0 1.7G 0% /dev tmpfs tmpfs 1.9G 0 1.9G 0% /dev/shm tmpfs tmpfs 759M 1.1M 758M 1% /run tmpfs tmpfs 5.0M 4.0K 5.0M 1% /run/lock /dev/sda1 vfat 255M 31M 225M 13% /boot tmpfs tmpfs 380M 0 380M 0% /run/user/1000 Messages concerning ext4 filesystem in dmesg: [Tue Mar 12 17:58:07 2024] Kernel command line: coherent_pool=1M 8250.nr_uarts=0 snd_bcm2835.enable_headphones=0 snd_bcm2835.enable_headphones=1 snd_bcm2835.enable_hdmi=1 snd_bcm2835.enable_hdmi=0 smsc95xx.macaddr=D8:3A:DD:0A:52:C5 vc_mem.mem_base=0x3eb00000 vc_mem.mem_size=0x3ff00000 console=ttyS0,115200 console=tty1 root=PARTUUID=f052dfb4-02 rootfstype=ext4 fsck.repair=yes rootwait [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): INFO: recovery required on readonly filesystem [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): write access will be enabled during recovery [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): orphan cleanup on readonly fs [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): 3 orphan inodes deleted [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): recovery complete [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): mounted filesystem with ordered data mode. Quota mode: none. [Tue Mar 12 17:58:09 2024] VFS: Mounted root (ext4 filesystem) readonly on device 8:2. [Tue Mar 12 17:58:11 2024] EXT4-fs (sda2): re-mounted. Quota mode: none. Show mounted filesystems \(real ones only\): TARGET SOURCE FSTYPE OPTIONS / /dev/sda2 ext4 rw,noatime `-/boot /dev/sda1 vfat rw,relatime,fmask=0022,dmask=0022,codepage=437,iocharset=ascii,shortname=mixed,errors=remount-ro Files in neuralgic directories: /var: 12G /var/ 11G /var/log 4.0G /var/log/journal/93e81a7a4f294d7ca561dd2805eb2080 4.0G /var/log/journal 998M /var/lib Archived and active journals take up 3.9G in the file system. /opt/iobroker/backups: 3.2G /opt/iobroker/backups/ /opt/iobroker/iobroker-data: 291M /opt/iobroker/iobroker-data/ 159M /opt/iobroker/iobroker-data/files 76M /opt/iobroker/iobroker-data/backup-objects 57M /opt/iobroker/iobroker-data/files/javascript.admin 38M /opt/iobroker/iobroker-data/files/javascript.admin/static The five largest files in iobroker-data are: 40M /opt/iobroker/iobroker-data/states.jsonl 22M /opt/iobroker/iobroker-data/files/web.admin/static/js/main.c05ba1d3.js.map 22M /opt/iobroker/iobroker-data/files/modbus.admin/static/js/main.578d79d9.js.map 16M /opt/iobroker/iobroker-data/objects.jsonl 8.8M /opt/iobroker/iobroker-data/files/modbus.admin/static/js/main.578d79d9.js USB-Devices by-id: USB-Sticks - Avoid direct links to /dev/* in your adapter setups, please always prefer the links 'by-id': find: '/dev/serial/by-id/': No such file or directory *** NodeJS-Installation *** /home/iobroker/.diag.sh: line 277: nodejs: command not found /usr/bin/node v18.16.0 /usr/bin/npm 9.5.1 /usr/bin/npx 9.5.1 /usr/bin/corepack 0.17.0 /home/iobroker/.diag.sh: line 288: nodejs: command not found *** nodejs is NOT correctly installed *** nodejs: Installed: 18.16.0-deb-1nodesource1 Candidate: 18.17.1-deb-1nodesource1 Version table: 18.17.1-deb-1nodesource1 500 500 https://deb.nodesource.com/node_18.x bullseye/main arm64 Packages *** 18.16.0-deb-1nodesource1 100 100 /var/lib/dpkg/status 12.22.12~dfsg-1~deb11u4 500 500 http://deb.debian.org/debian bullseye/main arm64 Packages 500 http://security.debian.org/debian-security bullseye-security/main arm64 Packages Temp directories causing npm8 problem: 0 No problems detected Errors in npm tree: *** ioBroker-Installation *** ioBroker Status /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at close (/opt/iobroker/node_modules/ioredis/built/redis/event_handler.js:184:25) at Socket.<anonymous> (/opt/iobroker/node_modules/ioredis/built/redis/event_handler.js:151:20) at Object.onceWrapper (node:events:628:26) at Socket.emit (node:events:513:28) at TCP.<anonymous> (node:net:322:12) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Core adapters versions js-controller: 5.0.12 admin: 6.13.16 javascript: 7.8.0 Adapters from github: 0 Adapter State Cannot read system.config: undefined (OK when migrating or restoring) /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Enabled adapters with bindings /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 ioBroker-Repositories Cannot read system.config: undefined (OK when migrating or restoring) /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Installed ioBroker-Instances Cannot load "custom": Connection is closed. Cannot initialize database scripts: Cannot load "custom" into objects database: Connection is closed. Server Cannot start inMem-objects on port 9001: Failed to lock DB file "/opt/iobroker/iobroker-data/objects.jsonl"! Objects and States Please stand by - This may take a while Objects: 0 States: 1 *** OS-Repositories and Updates *** W: GPG error: https://repos.influxdata.com/debian stretch InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY D8FF8E1F7DF8B07E E: The repository 'https://repos.influxdata.com/debian stretch InRelease' is not signed. Pending Updates: 100 *** Listening Ports *** Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State User Inode PID/Program name tcp 0 0 127.0.0.1:8088 0.0.0.0:* LISTEN 110 14436 512/influxd tcp 0 0 192.168.0.113:42001 0.0.0.0:* LISTEN 1001 79460428 75886/io.hm-rpc.0 tcp 0 0 192.168.0.113:42010 0.0.0.0:* LISTEN 1001 79457255 75901/io.hm-rpc.1 tcp 0 0 0.0.0.0:1882 0.0.0.0:* LISTEN 1001 79462608 75997/io.shelly.0 tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 0 12067 544/sshd: /usr/sbin tcp 0 0 127.0.0.1:9000 0.0.0.0:* LISTEN 1001 79459907 75774/iobroker.js-c tcp 0 0 127.0.0.1:9001 0.0.0.0:* LISTEN 1001 79459900 75774/iobroker.js-c tcp6 0 0 :::8082 :::* LISTEN 1001 79462674 76111/io.web.0 tcp6 0 0 :::8081 :::* LISTEN 1001 79451097 75792/io.admin.0 tcp6 0 0 :::8086 :::* LISTEN 110 14472 512/influxd tcp6 0 0 :::22 :::* LISTEN 0 12069 544/sshd: /usr/sbin udp 0 0 0.0.0.0:5353 0.0.0.0:* 108 13632 394/avahi-daemon: r udp 0 0 0.0.0.0:46520 0.0.0.0:* 108 13634 394/avahi-daemon: r udp 0 0 0.0.0.0:68 0.0.0.0:* 0 12077 812/dhcpcd udp6 0 0 :::5353 :::* 108 13633 394/avahi-daemon: r udp6 0 0 :::42768 :::* 108 13635 394/avahi-daemon: r udp6 0 0 :::546 :::* 0 12193 812/dhcpcd *** Log File - Last 25 Lines *** 2024-03-13 17:41:46.384 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.385 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.4alllivevalues[openWB/graph/4alllivevalues] 2024-03-13 17:41:46.388 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.389 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.390 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.391 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.2alllivevalues[openWB/graph/2alllivevalues] 2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.15alllivevalues[openWB/graph/15alllivevalues] 2024-03-13 17:41:46.397 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.3alllivevalues[openWB/graph/3alllivevalues] 2024-03-13 17:41:46.400 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.11alllivevalues[openWB/graph/11alllivevalues] 2024-03-13 17:41:46.401 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.404 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.405 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.406 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.407 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.410 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.12alllivevalues[openWB/graph/12alllivevalues] 2024-03-13 17:41:46.411 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.412 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.416 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.417 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.420 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.421 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.8alllivevalues[openWB/graph/8alllivevalues] 2024-03-13 17:41:46.422 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.426 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.9alllivevalues[openWB/graph/9alllivevalues] 2024-03-13 17:41:46.427 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] ============ Mark until here for C&P =============Wie repariere ich das Dateisystem?

Ist es üblich, dass ein schwaches Netzteil sich nach rd. einem Jahr meldet?

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

load average: 3.13, 3.31, 4.20

viel zu hoch!

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Under-voltage has occurred

Netzteil zu schwach. Wahrscheinlich wegen

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

einer externen SSD-Platte.

ohne eigene Stromversorgung.

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Soft temperature limit has occurred

wird zu warm.

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

orphan cleanup on readonly fs

Dateisystem defekt, da sind Dateiinhalte nicht mehr zuordbar.

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

nodejs is NOT correctly installed

spricht für sich selbst

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

GPG error: https://repos.influxdata.com/debian stretch InRelease: The following sign

falsches Influx Repo

läuft Influx auf dem selben Pi?

sonst noch was? -

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Ist es üblich, dass ein schwaches Netzteil sich nach rd. einem Jahr meldet?

- Auch solche Teile altern.

- Wenn der Leistungshunger der Maschine wächst, kommt irgendwann das Netzteil nicht mehr mit.

Mehr Scripte, zusätzliche Komponenten wie Influx, Grafana oder Kamerastreaming etc.

Nur als Hinweis:

Dass da der Expertenmodus an ist, hat einen bestimmten Grund?Das hier ist auch suboptimal:

Pending Updates: 100

und mqtt loggt sich auch zu tode

2024-03-13 17:41:46.384 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues]

2024-03-13 17:41:46.385 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.4alllivevalues[openWB/graph/4alllivevalues]

2024-03-13 17:41:46.388 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues]

2024-03-13 17:41:46.389 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime]

2024-03-13 17:41:46.390 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date]

2024-03-13 17:41:46.391 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp]

2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.2alllivevalues[openWB/graph/2alllivevalues]

2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.15alllivevalues[openWB/graph/15alllivevalues]

2024-03-13 17:41:46.397 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.3alllivevalues[openWB/graph/3alllivevalues]

2024-03-13 17:41:46.400 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.11alllivevalues[openWB/graph/11alllivevalues]

2024-03-13 17:41:46.401 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime]

2024-03-13 17:41:46.404 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date]

2024-03-13 17:41:46.405 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp]

2024-03-13 17:41:46.406 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues]

2024-03-13 17:41:46.407 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime]

2024-03-13 17:41:46.410 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.12alllivevalues[openWB/graph/12alllivevalues]

2024-03-13 17:41:46.411 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues]

2024-03-13 17:41:46.412 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime]

2024-03-13 17:41:46.416 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date]

2024-03-13 17:41:46.417 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp]

2024-03-13 17:41:46.420 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues]

2024-03-13 17:41:46.421 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.8alllivevalues[openWB/graph/8alllivevalues]

2024-03-13 17:41:46.422 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues]

2024-03-13 17:41:46.426 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.9alllivevalues[openWB/graph/9alllivevalues]

2024-03-13 17:41:46.427 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date]Und das:

/usr/bin/node v18.16.0

/usr/bin/npm 9.5.1

/usr/bin/npx 9.5.1

/usr/bin/corepack 0.17.0

/home/iobroker/.diag.sh: line 288: nodejs: command not found

*** nodejs is NOT correctly installed ***nodejs:

Installed: 18.16.0-deb-1nodesource1

Candidate: 18.17.1-deb-1nodesource1

Version table: -

Hier die iob diag in der gewünschten Form:

======== Start marking the full check here ========= Skript v.2023-10-10 *** BASE SYSTEM *** Static hostname: rpi4-iob Icon name: computer Operating System: Debian GNU/Linux 11 (bullseye) Kernel: Linux 6.1.21-v8+ Architecture: arm64 Model : Raspberry Pi 4 Model B Rev 1.5 Docker : false Virtualization : none Kernel : aarch64 Userland : arm64 Systemuptime and Load: 17:39:08 up 23:41, 1 user, load average: 3.13, 3.31, 4.20 CPU threads: 4 *** RASPBERRY THROTTLING *** Current issues: No throttling issues detected. Previously detected issues: ~ Under-voltage has occurred ~ Arm frequency capping has occurred ~ Throttling has occurred ~ Soft temperature limit has occurred *** Time and Time Zones *** Local time: Wed 2024-03-13 17:39:08 CET Universal time: Wed 2024-03-13 16:39:08 UTC RTC time: n/a Time zone: Europe/Berlin (CET, +0100) System clock synchronized: yes NTP service: active RTC in local TZ: no *** User and Groups *** pi /home/pi pi adm dialout cdrom sudo audio video plugdev games users input render netdev gpio i2c spi iobroker *** X-Server-Setup *** X-Server: false Desktop: Terminal: tty Boot Target: multi-user.target *** MEMORY *** total used free shared buff/cache available Mem: 3.8G 2.7G 285M 0.0K 762M 1.4G Swap: 99M 10M 89M Total: 3.9G 2.8G 374M 3793 M total memory 2745 M used memory 2130 M active memory 1198 M inactive memory 285 M free memory 52 M buffer memory 710 M swap cache 99 M total swap 10 M used swap 89 M free swap Raspberry only: oom events: 0 lifetime oom required: 0 Mbytes total time in oom handler: 0 ms max time spent in oom handler: 0 ms *** FAILED SERVICES *** UNIT LOAD ACTIVE SUB DESCRIPTION 0 loaded units listed. *** FILESYSTEM *** Filesystem Type Size Used Avail Use% Mounted on /dev/root ext4 459G 24G 417G 6% / devtmpfs devtmpfs 1.7G 0 1.7G 0% /dev tmpfs tmpfs 1.9G 0 1.9G 0% /dev/shm tmpfs tmpfs 759M 1.1M 758M 1% /run tmpfs tmpfs 5.0M 4.0K 5.0M 1% /run/lock /dev/sda1 vfat 255M 31M 225M 13% /boot tmpfs tmpfs 380M 0 380M 0% /run/user/1000 Messages concerning ext4 filesystem in dmesg: [Tue Mar 12 17:58:07 2024] Kernel command line: coherent_pool=1M 8250.nr_uarts=0 snd_bcm2835.enable_headphones=0 snd_bcm2835.enable_headphones=1 snd_bcm2835.enable_hdmi=1 snd_bcm2835.enable_hdmi=0 smsc95xx.macaddr=D8:3A:DD:0A:52:C5 vc_mem.mem_base=0x3eb00000 vc_mem.mem_size=0x3ff00000 console=ttyS0,115200 console=tty1 root=PARTUUID=f052dfb4-02 rootfstype=ext4 fsck.repair=yes rootwait [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): INFO: recovery required on readonly filesystem [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): write access will be enabled during recovery [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): orphan cleanup on readonly fs [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): 3 orphan inodes deleted [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): recovery complete [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): mounted filesystem with ordered data mode. Quota mode: none. [Tue Mar 12 17:58:09 2024] VFS: Mounted root (ext4 filesystem) readonly on device 8:2. [Tue Mar 12 17:58:11 2024] EXT4-fs (sda2): re-mounted. Quota mode: none. Show mounted filesystems \(real ones only\): TARGET SOURCE FSTYPE OPTIONS / /dev/sda2 ext4 rw,noatime `-/boot /dev/sda1 vfat rw,relatime,fmask=0022,dmask=0022,codepage=437,iocharset=ascii,shortname=mixed,errors=remount-ro Files in neuralgic directories: /var: 12G /var/ 11G /var/log 4.0G /var/log/journal/93e81a7a4f294d7ca561dd2805eb2080 4.0G /var/log/journal 998M /var/lib Archived and active journals take up 3.9G in the file system. /opt/iobroker/backups: 3.2G /opt/iobroker/backups/ /opt/iobroker/iobroker-data: 291M /opt/iobroker/iobroker-data/ 159M /opt/iobroker/iobroker-data/files 76M /opt/iobroker/iobroker-data/backup-objects 57M /opt/iobroker/iobroker-data/files/javascript.admin 38M /opt/iobroker/iobroker-data/files/javascript.admin/static The five largest files in iobroker-data are: 40M /opt/iobroker/iobroker-data/states.jsonl 22M /opt/iobroker/iobroker-data/files/web.admin/static/js/main.c05ba1d3.js.map 22M /opt/iobroker/iobroker-data/files/modbus.admin/static/js/main.578d79d9.js.map 16M /opt/iobroker/iobroker-data/objects.jsonl 8.8M /opt/iobroker/iobroker-data/files/modbus.admin/static/js/main.578d79d9.js USB-Devices by-id: USB-Sticks - Avoid direct links to /dev/* in your adapter setups, please always prefer the links 'by-id': find: '/dev/serial/by-id/': No such file or directory *** NodeJS-Installation *** /home/iobroker/.diag.sh: line 277: nodejs: command not found /usr/bin/node v18.16.0 /usr/bin/npm 9.5.1 /usr/bin/npx 9.5.1 /usr/bin/corepack 0.17.0 /home/iobroker/.diag.sh: line 288: nodejs: command not found *** nodejs is NOT correctly installed *** nodejs: Installed: 18.16.0-deb-1nodesource1 Candidate: 18.17.1-deb-1nodesource1 Version table: 18.17.1-deb-1nodesource1 500 500 https://deb.nodesource.com/node_18.x bullseye/main arm64 Packages *** 18.16.0-deb-1nodesource1 100 100 /var/lib/dpkg/status 12.22.12~dfsg-1~deb11u4 500 500 http://deb.debian.org/debian bullseye/main arm64 Packages 500 http://security.debian.org/debian-security bullseye-security/main arm64 Packages Temp directories causing npm8 problem: 0 No problems detected Errors in npm tree: *** ioBroker-Installation *** ioBroker Status /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at close (/opt/iobroker/node_modules/ioredis/built/redis/event_handler.js:184:25) at Socket.<anonymous> (/opt/iobroker/node_modules/ioredis/built/redis/event_handler.js:151:20) at Object.onceWrapper (node:events:628:26) at Socket.emit (node:events:513:28) at TCP.<anonymous> (node:net:322:12) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Core adapters versions js-controller: 5.0.12 admin: 6.13.16 javascript: 7.8.0 Adapters from github: 0 Adapter State Cannot read system.config: undefined (OK when migrating or restoring) /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Enabled adapters with bindings /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 ioBroker-Repositories Cannot read system.config: undefined (OK when migrating or restoring) /opt/iobroker/node_modules/standard-as-callback/built/index.js:6 throw e; ^ Error: Connection is closed. at Redis.sendCommand (/opt/iobroker/node_modules/ioredis/built/redis/index.js:636:24) at Redis.scan (/opt/iobroker/node_modules/ioredis/built/commander.js:122:25) at ScanStream._read (/opt/iobroker/node_modules/ioredis/built/ScanStream.js:36:41) at Readable.read (node:internal/streams/readable:496:12) at resume_ (node:internal/streams/readable:999:12) at process.processTicksAndRejections (node:internal/process/task_queues:82:21) Emitted 'error' event on ScanStream instance at: at /opt/iobroker/node_modules/ioredis/built/ScanStream.js:38:22 at tryCatcher (/opt/iobroker/node_modules/standard-as-callback/built/utils.js:12:23) at /opt/iobroker/node_modules/standard-as-callback/built/index.js:33:51 at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Node.js v18.16.0 Installed ioBroker-Instances Cannot load "custom": Connection is closed. Cannot initialize database scripts: Cannot load "custom" into objects database: Connection is closed. Server Cannot start inMem-objects on port 9001: Failed to lock DB file "/opt/iobroker/iobroker-data/objects.jsonl"! Objects and States Please stand by - This may take a while Objects: 0 States: 1 *** OS-Repositories and Updates *** W: GPG error: https://repos.influxdata.com/debian stretch InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY D8FF8E1F7DF8B07E E: The repository 'https://repos.influxdata.com/debian stretch InRelease' is not signed. Pending Updates: 100 *** Listening Ports *** Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State User Inode PID/Program name tcp 0 0 127.0.0.1:8088 0.0.0.0:* LISTEN 110 14436 512/influxd tcp 0 0 192.168.0.113:42001 0.0.0.0:* LISTEN 1001 79460428 75886/io.hm-rpc.0 tcp 0 0 192.168.0.113:42010 0.0.0.0:* LISTEN 1001 79457255 75901/io.hm-rpc.1 tcp 0 0 0.0.0.0:1882 0.0.0.0:* LISTEN 1001 79462608 75997/io.shelly.0 tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 0 12067 544/sshd: /usr/sbin tcp 0 0 127.0.0.1:9000 0.0.0.0:* LISTEN 1001 79459907 75774/iobroker.js-c tcp 0 0 127.0.0.1:9001 0.0.0.0:* LISTEN 1001 79459900 75774/iobroker.js-c tcp6 0 0 :::8082 :::* LISTEN 1001 79462674 76111/io.web.0 tcp6 0 0 :::8081 :::* LISTEN 1001 79451097 75792/io.admin.0 tcp6 0 0 :::8086 :::* LISTEN 110 14472 512/influxd tcp6 0 0 :::22 :::* LISTEN 0 12069 544/sshd: /usr/sbin udp 0 0 0.0.0.0:5353 0.0.0.0:* 108 13632 394/avahi-daemon: r udp 0 0 0.0.0.0:46520 0.0.0.0:* 108 13634 394/avahi-daemon: r udp 0 0 0.0.0.0:68 0.0.0.0:* 0 12077 812/dhcpcd udp6 0 0 :::5353 :::* 108 13633 394/avahi-daemon: r udp6 0 0 :::42768 :::* 108 13635 394/avahi-daemon: r udp6 0 0 :::546 :::* 0 12193 812/dhcpcd *** Log File - Last 25 Lines *** 2024-03-13 17:41:46.384 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.385 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.4alllivevalues[openWB/graph/4alllivevalues] 2024-03-13 17:41:46.388 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.389 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.390 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.391 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.2alllivevalues[openWB/graph/2alllivevalues] 2024-03-13 17:41:46.395 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.15alllivevalues[openWB/graph/15alllivevalues] 2024-03-13 17:41:46.397 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.3alllivevalues[openWB/graph/3alllivevalues] 2024-03-13 17:41:46.400 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.11alllivevalues[openWB/graph/11alllivevalues] 2024-03-13 17:41:46.401 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.404 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.405 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.406 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.407 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.410 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.12alllivevalues[openWB/graph/12alllivevalues] 2024-03-13 17:41:46.411 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.412 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Uptime[openWB/system/Uptime] 2024-03-13 17:41:46.416 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] 2024-03-13 17:41:46.417 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Timestamp[openWB/system/Timestamp] 2024-03-13 17:41:46.420 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.6alllivevalues[openWB/graph/6alllivevalues] 2024-03-13 17:41:46.421 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.8alllivevalues[openWB/graph/8alllivevalues] 2024-03-13 17:41:46.422 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.13alllivevalues[openWB/graph/13alllivevalues] 2024-03-13 17:41:46.426 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.graph.9alllivevalues[openWB/graph/9alllivevalues] 2024-03-13 17:41:46.427 - info: mqtt.0 (75982) send2Server mqtt.0.openWB.system.Date[openWB/system/Date] ============ Mark until here for C&P =============Wie repariere ich das Dateisystem?

Ist es üblich, dass ein schwaches Netzteil sich nach rd. einem Jahr meldet?

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Wie repariere ich das Dateisystem?

gar nicht!

Das System hat es versucht und ist gescheitert. -

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Wie repariere ich das Dateisystem?

gar nicht!

Das System hat es versucht und ist gescheitert.@homoran said in iobroker plötzlich nicht mehr oder schwer erreichbar:

gar nicht!

Das System hat es versucht und ist gescheitert.???

[Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): INFO: recovery required on readonly filesystem [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): write access will be enabled during recovery [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): orphan cleanup on readonly fs [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): 3 orphan inodes deleted [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): recovery completeEs ist wohl bei der Reparatur zu 3 Orphans gekommen, aber Prinzipiell hat Linux das File System doch wieder ans Laufen bekommen...

-

Ich tippe, hier hat der eine Fehler zum nächsten geführt.

@vol907

Kiste so gut es geht aktualisieren, Backup ziehen und dann sauber neu aufsetzen. Mit 'nem fetteren Netzteil.

Reparieren kann man da vermutlich nicht mehr viel. -

Ich tippe, hier hat der eine Fehler zum nächsten geführt.

@vol907

Kiste so gut es geht aktualisieren, Backup ziehen und dann sauber neu aufsetzen. Mit 'nem fetteren Netzteil.

Reparieren kann man da vermutlich nicht mehr viel.Und "neu aufsetzen" nicht mit Bullseye, sondern mit Bookworm ...

Eine Frage noch: Kann man irgendwo im iob diag Hinweise auf ein schwaches Netzteil finden? Oder ist das eine Vermutung, dass das korrupte Dateisystem von einem schwachen Netzteil verursacht wurde?

-

Und "neu aufsetzen" nicht mit Bullseye, sondern mit Bookworm ...

Eine Frage noch: Kann man irgendwo im iob diag Hinweise auf ein schwaches Netzteil finden? Oder ist das eine Vermutung, dass das korrupte Dateisystem von einem schwachen Netzteil verursacht wurde?

*** RASPBERRY THROTTLING *** Current issues: No throttling issues detected. Previously detected issues: ~ Under-voltage has occurred ~ Arm frequency capping has occurred ~ Throttling has occurred ~ Soft temperature limit has occurred -

Influx ist von Anfang an drauf, allerdings nehmen die aufzuzeichnenden States zu. Grafana habe ich auf einem anderen Pi, Kamerastreaming gar nicht.

Kurz bevor die Probleme anfingen, habe ich einige Blocklys erstellt, die per mqtt auf openWB zugreifen. Ob das die Last dermaßen erhöht hat? Zwei openWB's laufen auf zwei weiteren Pi's.

Die Daten, die iobroker sammelt, stammen teilweise aus Geräten, die im Ausland sind. Dort habe ich auch eine FirtzBox und beide habe ich per VPN miteinander verbunden.

Achso, der RPi4 sitzt in einem UPS von Geekworm.

Sage das alles nur, um Hinweise zu sammeln, was ich beim Neuaufsetzen besser mache oder lieber gar nicht mache.

Backups habe ich (Backup-Adapter oder hieß der Backitup?).

Und da war noch die Frage mit dem Expertenmodus. Ja, den habe ich bewusst eingeschaltet, ist aber schon länger her und kann mich nicht genau an den Auslöser erinnern. Ich glaube, das hatte mit einem Adapter zu tun, den ich sonst nicht installieren konnte.

Vielen Dank für das umfangreiche und ultraschnelle Feedback!

-

*** RASPBERRY THROTTLING *** Current issues: No throttling issues detected. Previously detected issues: ~ Under-voltage has occurred ~ Arm frequency capping has occurred ~ Throttling has occurred ~ Soft temperature limit has occurred@codierknecht wieder was gelernt ;-)

Angesichts der anderen Meldungen wäre ggfs auch eine bessere Kühlung nicht schlecht ... wenn gar kein Kühlkörper montiert ist, muss es ja nicht gleich ein Kühlkörper mit Lüfter sein ...

-

@homoran said in iobroker plötzlich nicht mehr oder schwer erreichbar:

gar nicht!

Das System hat es versucht und ist gescheitert.???

[Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): INFO: recovery required on readonly filesystem [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): write access will be enabled during recovery [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): orphan cleanup on readonly fs [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): 3 orphan inodes deleted [Tue Mar 12 17:58:09 2024] EXT4-fs (sda2): recovery completeEs ist wohl bei der Reparatur zu 3 Orphans gekommen, aber Prinzipiell hat Linux das File System doch wieder ans Laufen bekommen...

@martinp sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

hat Linux das File System doch wieder ans Laufen bekommen...

was immer das bedeuten soll.

jedenfalls sind

@martinp sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

3 orphan inodes deleted

3 verwaiste inodes gelöscht worden und nicht wiederhergestellt.

Also fehlt irgend wo Teile von Datei(en) -

In dem Geekworm Teil (ich meine das heißt C728 o.ä.) ist eigentlich ein Lüfter drinnen. Der ist mir auber unglaublich auf den Wecker gegangen, sodass ich ihn nach Monaten abgeschaltet habe. Anfangs habe ich die CPU-Temperatur mit dem Rpi-Monitor beobachtet, es schien alles im Lot zu sein.

Würdet ihr empfehlen, Influx auf einen anderen Pi "outzusourcen"?

-

Und "neu aufsetzen" nicht mit Bullseye, sondern mit Bookworm ...

Eine Frage noch: Kann man irgendwo im iob diag Hinweise auf ein schwaches Netzteil finden? Oder ist das eine Vermutung, dass das korrupte Dateisystem von einem schwachen Netzteil verursacht wurde?

@martinp sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Kann man irgendwo im iob diag Hinweise auf ein schwaches Netzteil finden

hatte ich zitiert!

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Under-voltage has occurred

-

noch etwas.

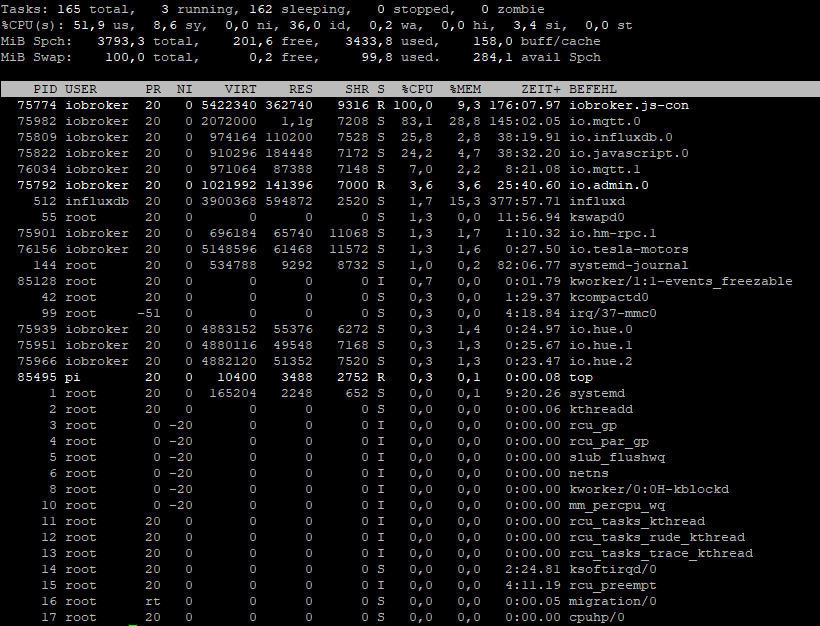

Du könntest mal mittopnach der CPU Last sehen, wo diese besonders hoch ist.Die Load average enthält noch weitere Parameter, u.a. die I/O Vorgänge.

Wenn du Probleme mit dem Zugriff auf die SSD hast, kann das auch die Load hochtreiben.

Hängt die SSD etwa am USB 3? -

In dem Geekworm Teil (ich meine das heißt C728 o.ä.) ist eigentlich ein Lüfter drinnen. Der ist mir auber unglaublich auf den Wecker gegangen, sodass ich ihn nach Monaten abgeschaltet habe. Anfangs habe ich die CPU-Temperatur mit dem Rpi-Monitor beobachtet, es schien alles im Lot zu sein.

Würdet ihr empfehlen, Influx auf einen anderen Pi "outzusourcen"?

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Würdet ihr empfehlen, Influx auf einen anderen Pi "outzusourcen"?

Naja ... mit < 300 MB freiem Speicher scheint mir die Kiste ziemlich ausgereizt.

Da wäre auslagern ein mögliches Mittel. Oder aber ein Pi mit 8GB.Da ich Influx aber selbst nicht verwende, kann ich nix dazu sagen wie ressourcenhungrig das ist.

-

noch etwas.

Du könntest mal mittopnach der CPU Last sehen, wo diese besonders hoch ist.Die Load average enthält noch weitere Parameter, u.a. die I/O Vorgänge.

Wenn du Probleme mit dem Zugriff auf die SSD hast, kann das auch die Load hochtreiben.

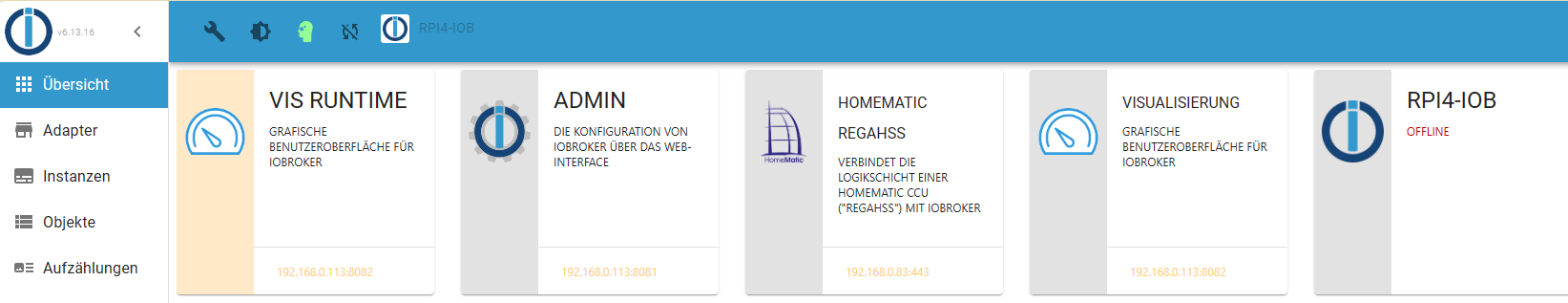

Hängt die SSD etwa am USB 3?@homoran Zwei Prozesse an der Spitze mit je um die 100% CPU (wie geht das denn?), und zwar iobroker.js-con und io.mqtt.0. Danach influxdb und javascript mit um die 25% CPU jeder.

mqtt.0 ist die Verbindung zu einer von beiden openWB's, und zwar die, wo ich mit dem Blockly unterwegs bin. Oh je! rpi-monitor zeigt eine CPU-Temperatur von 79 Grad an. Nochmal oh je! Ich schalte meine Blocklys einzeln aus, um die CPU-Last und -Temperatur zu checken ... nach dem Abendessen.

Das Blöde ist, dass ich an dieser Stelle noch einiges vor hatte. Ich wollte per Blockly PV-Überschuss für die Infrarot-Heizung nutzen.

-

@homoran Zwei Prozesse an der Spitze mit je um die 100% CPU (wie geht das denn?), und zwar iobroker.js-con und io.mqtt.0. Danach influxdb und javascript mit um die 25% CPU jeder.

mqtt.0 ist die Verbindung zu einer von beiden openWB's, und zwar die, wo ich mit dem Blockly unterwegs bin. Oh je! rpi-monitor zeigt eine CPU-Temperatur von 79 Grad an. Nochmal oh je! Ich schalte meine Blocklys einzeln aus, um die CPU-Last und -Temperatur zu checken ... nach dem Abendessen.

Das Blöde ist, dass ich an dieser Stelle noch einiges vor hatte. Ich wollte per Blockly PV-Überschuss für die Infrarot-Heizung nutzen.

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Zwei Prozesse an der Spitze

bitte immer alles zeigen!

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

je um die 100% CPU (wie geht das denn?)

das sind 100% von einem Kern, also 2 Von 4 Kernen schon mal voll ausgelastet.

kein Wunder dass es ihm warm wird. -

@homoran Zwei Prozesse an der Spitze mit je um die 100% CPU (wie geht das denn?), und zwar iobroker.js-con und io.mqtt.0. Danach influxdb und javascript mit um die 25% CPU jeder.

mqtt.0 ist die Verbindung zu einer von beiden openWB's, und zwar die, wo ich mit dem Blockly unterwegs bin. Oh je! rpi-monitor zeigt eine CPU-Temperatur von 79 Grad an. Nochmal oh je! Ich schalte meine Blocklys einzeln aus, um die CPU-Last und -Temperatur zu checken ... nach dem Abendessen.

Das Blöde ist, dass ich an dieser Stelle noch einiges vor hatte. Ich wollte per Blockly PV-Überschuss für die Infrarot-Heizung nutzen.

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

CPU-Temperatur von 79 Grad an.

passt zum diag

@vol907 sagte in iobroker plötzlich nicht mehr oder schwer erreichbar:

Arm frequency capping has occurred

Throttling has occurred

Soft temperature limit has occurred