NEWS

Verbindungsabbrüche mit Proxmox

-

Hallo,

leider habe ich in letzter Zeit immer Verbindungsabbrüche mit dem Iobroker. Der Iobroker läuft auf einem Intel Nuc 11 mit Proxmox. Zum Netzwerk, ich habe eine Fritzbox Cable Box und dahinter hängt ein Unifi Cloud Gateway Ultra.

Leider passiert jetzt immer öfters die Verbindung zum Iobroker verloren geht.Ich hänge mal ein paar Screenshots an. Ich weiß ja nicht welche Daten genau zur Lösung des Problems gebraucht werden.

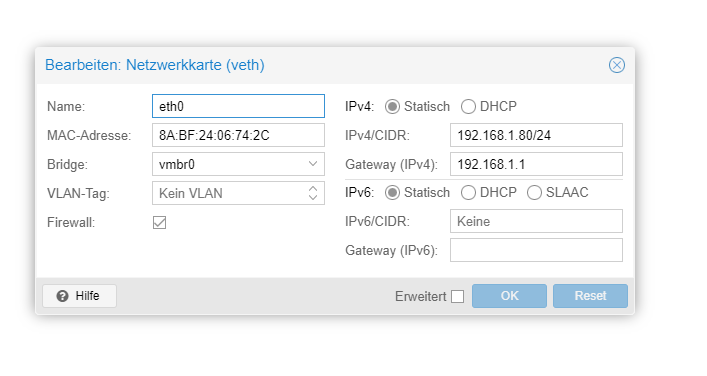

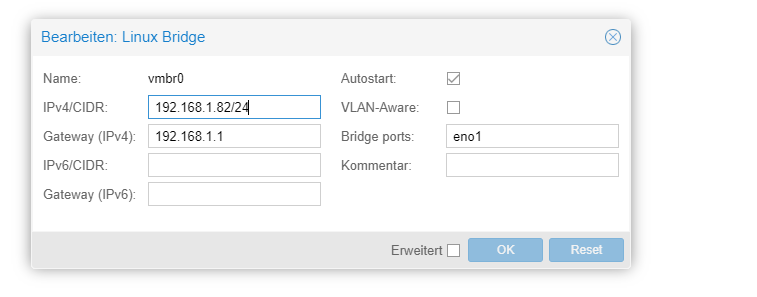

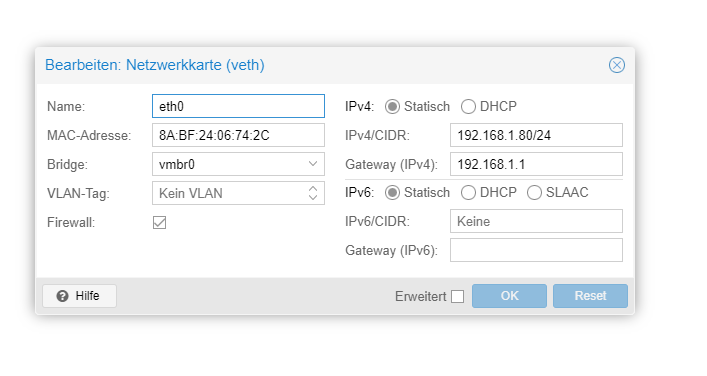

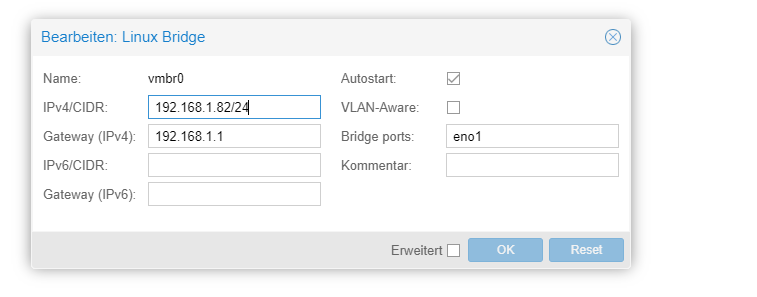

Netzwerk von der VM in Proxmox

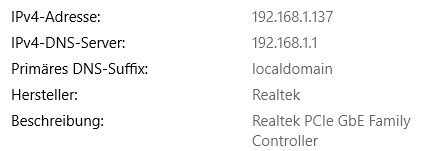

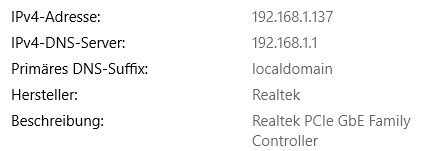

Netzerk vom Pc von dem aus ich auf den Iobroker zugreife

Netzwerk Proxmox

-

@lustig29 sagte in Verbindungsabbrüche mit Proxmox:

Leider passiert jetzt immer öfters die Verbindung zum Iobroker verloren geht.

Ohne logs wird es wohl hier nichts werden, und mit den Netzwerkeinstellungen wird es wohl auch nichts zu tun haben.

Lies mal bitte hier.

-

@lustig29 sagte in Verbindungsabbrüche mit Proxmox:

Leider passiert jetzt immer öfters die Verbindung zum Iobroker verloren geht.

Ohne logs wird es wohl hier nichts werden, und mit den Netzwerkeinstellungen wird es wohl auch nichts zu tun haben.

Lies mal bitte hier.

@meister-mopper Leider ist er gerade wieder abgestürzt. Keinen Zugriff auf Iobroker oder Proxmox

-

@lustig29 sagte in Verbindungsabbrüche mit Proxmox:

@meister-mopper Leider ist er gerade wieder abgestürzt. Keinen Zugriff auf Iobroker oder Proxmox

Dann sind die logs von Proxmox interessant.

-

@lustig29 sagte in Verbindungsabbrüche mit Proxmox:

@meister-mopper Leider ist er gerade wieder abgestürzt. Keinen Zugriff auf Iobroker oder Proxmox

Dann sind die logs von Proxmox interessant.

@meister-mopper Das sind doch die aus dem Fenster unten in der Mitte, oder? Wenn ich wieder Zugriff habe, stelle ich sie rein.

Im Moment komme ich wie gesagt gar nicht mehr drauf. Starte ihn jetzt mal neu.

-

@meister-mopper Das sind doch die aus dem Fenster unten in der Mitte, oder? Wenn ich wieder Zugriff habe, stelle ich sie rein.

Im Moment komme ich wie gesagt gar nicht mehr drauf. Starte ihn jetzt mal neu.

@lustig29 sagte in Verbindungsabbrüche mit Proxmox:

@meister-mopper Das sind doch die aus dem Fenster unten in der Mitte, oder? Wenn ich wieder Zugriff habe, stelle ich sie rein.

Im Moment komme ich wie gesagt gar nicht mehr drauf. Starte ihn jetzt mal neu.

Dann log dich mal über die ssh-Konsole (putty, powershell oder was auch immer) in deinen Proxmox node ein und zeige, was da passiert.

z. B.

thomas@HA-PVE-01:~$ tail -f /var/log/syslog 2024-07-11T19:20:50.744524+02:00 HA-PVE-01 systemd[3232055]: Reached target shutdown.target - Shutdown. 2024-07-11T19:20:50.744684+02:00 HA-PVE-01 systemd[3232055]: Finished systemd-exit.service - Exit the Session. 2024-07-11T19:20:50.744800+02:00 HA-PVE-01 systemd[3232055]: Reached target exit.target - Exit the Session. 2024-07-11T19:20:50.749727+02:00 HA-PVE-01 systemd[1]: user@0.service: Deactivated successfully. 2024-07-11T19:20:50.750050+02:00 HA-PVE-01 systemd[1]: Stopped user@0.service - User Manager for UID 0. 2024-07-11T19:20:50.758016+02:00 HA-PVE-01 systemd[1]: Stopping user-runtime-dir@0.service - User Runtime Directory /run/user/0... 2024-07-11T19:20:50.764763+02:00 HA-PVE-01 systemd[1]: run-user-0.mount: Deactivated successfully. 2024-07-11T19:20:50.765926+02:00 HA-PVE-01 systemd[1]: user-runtime-dir@0.service: Deactivated successfully. 2024-07-11T19:20:50.766121+02:00 HA-PVE-01 systemd[1]: Stopped user-runtime-dir@0.service - User Runtime Directory /run/user/0. 2024-07-11T19:20:50.767483+02:00 HA-PVE-01 systemd[1]: Removed slice user-0.slice - User Slice of UID 0.0der, um alles zu sehen

cat /var/log/syslog -

Hallo,

leider habe ich in letzter Zeit immer Verbindungsabbrüche mit dem Iobroker. Der Iobroker läuft auf einem Intel Nuc 11 mit Proxmox. Zum Netzwerk, ich habe eine Fritzbox Cable Box und dahinter hängt ein Unifi Cloud Gateway Ultra.

Leider passiert jetzt immer öfters die Verbindung zum Iobroker verloren geht.Ich hänge mal ein paar Screenshots an. Ich weiß ja nicht welche Daten genau zur Lösung des Problems gebraucht werden.

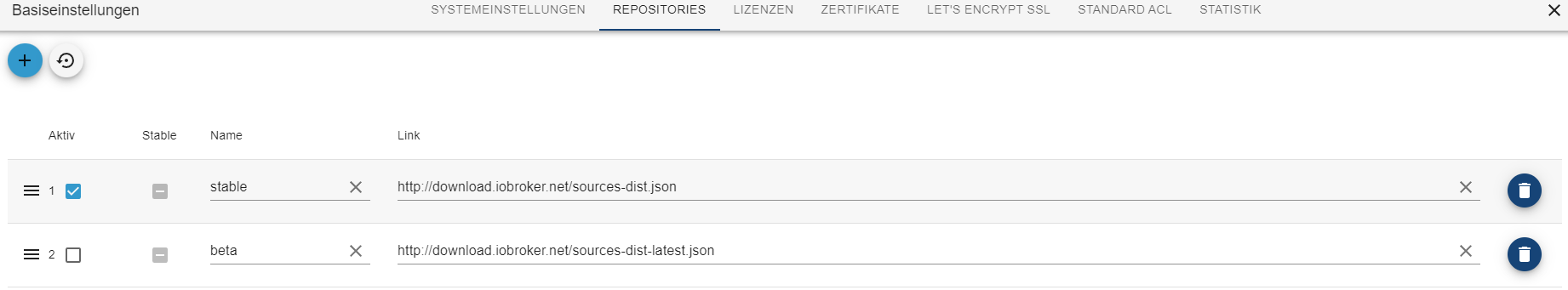

Netzwerk von der VM in Proxmox

Netzerk vom Pc von dem aus ich auf den Iobroker zugreife

Netzwerk Proxmox

@lustig29

Kommen sich irgendwie oder irgendwo Cloud Gateway und Fritte in die Quere? Ist die IP-Vergabe "sauber"? Ist ja immerhin ne Routerkaskade, im Zweifel mit Doppel-NAT. Ein IP-Konflickt könnte die nicht-Erreichbarkeit ggf auch erklären. -

@lustig29

Kommen sich irgendwie oder irgendwo Cloud Gateway und Fritte in die Quere? Ist die IP-Vergabe "sauber"? Ist ja immerhin ne Routerkaskade, im Zweifel mit Doppel-NAT. Ein IP-Konflickt könnte die nicht-Erreichbarkeit ggf auch erklären. -

@samson71 Hat sich erledigt. Hatte ein Netzwerkkabel falsch gesteckt. Somit wurden die Ip Adressen von der Fritzbox und dem Unifi vergeben.

-

@lustig29

Ich ahnte sowas in der Richtung ja. 2 Router in einem System sind schon eine Herausforderung. Aber gut dass das jetzt gelöst ist. -

@lustig29 sagte in Verbindungsabbrüche mit Proxmox:

@meister-mopper Das sind doch die aus dem Fenster unten in der Mitte, oder? Wenn ich wieder Zugriff habe, stelle ich sie rein.

Im Moment komme ich wie gesagt gar nicht mehr drauf. Starte ihn jetzt mal neu.

Dann log dich mal über die ssh-Konsole (putty, powershell oder was auch immer) in deinen Proxmox node ein und zeige, was da passiert.

z. B.

thomas@HA-PVE-01:~$ tail -f /var/log/syslog 2024-07-11T19:20:50.744524+02:00 HA-PVE-01 systemd[3232055]: Reached target shutdown.target - Shutdown. 2024-07-11T19:20:50.744684+02:00 HA-PVE-01 systemd[3232055]: Finished systemd-exit.service - Exit the Session. 2024-07-11T19:20:50.744800+02:00 HA-PVE-01 systemd[3232055]: Reached target exit.target - Exit the Session. 2024-07-11T19:20:50.749727+02:00 HA-PVE-01 systemd[1]: user@0.service: Deactivated successfully. 2024-07-11T19:20:50.750050+02:00 HA-PVE-01 systemd[1]: Stopped user@0.service - User Manager for UID 0. 2024-07-11T19:20:50.758016+02:00 HA-PVE-01 systemd[1]: Stopping user-runtime-dir@0.service - User Runtime Directory /run/user/0... 2024-07-11T19:20:50.764763+02:00 HA-PVE-01 systemd[1]: run-user-0.mount: Deactivated successfully. 2024-07-11T19:20:50.765926+02:00 HA-PVE-01 systemd[1]: user-runtime-dir@0.service: Deactivated successfully. 2024-07-11T19:20:50.766121+02:00 HA-PVE-01 systemd[1]: Stopped user-runtime-dir@0.service - User Runtime Directory /run/user/0. 2024-07-11T19:20:50.767483+02:00 HA-PVE-01 systemd[1]: Removed slice user-0.slice - User Slice of UID 0.0der, um alles zu sehen

cat /var/log/syslog@meister-mopper said in Verbindungsabbrüche mit Proxmox (Erledigt):

cat /var/log/syslog

2024-07-14T00:00:14.535906+02:00 pve systemd[1]: rsyslog.service: Sent signal SIGHUP to main process 574 (rsyslogd) on client request. 2024-07-14T00:00:14.536843+02:00 pve rsyslogd: [origin software="rsyslogd" swVersion="8.2302.0" x-pid="574" x-info="https://www.rsyslog.com"] rsyslogd was HUPed 2024-07-14T00:00:14.539451+02:00 pve systemd[1]: logrotate.service: Deactivated successfully. 2024-07-14T00:00:14.539648+02:00 pve systemd[1]: Finished logrotate.service - Rotate log files. 2024-07-14T00:00:14.659621+02:00 pve spiceproxy[962]: restarting server 2024-07-14T00:00:14.659731+02:00 pve spiceproxy[962]: starting 1 worker(s) 2024-07-14T00:00:14.661340+02:00 pve spiceproxy[962]: worker 66012 started 2024-07-14T00:00:14.910397+02:00 pve pveproxy[956]: restarting server 2024-07-14T00:00:14.910521+02:00 pve pveproxy[956]: starting 3 worker(s) 2024-07-14T00:00:14.910573+02:00 pve pveproxy[956]: worker 66013 started 2024-07-14T00:00:14.913512+02:00 pve pveproxy[956]: worker 66014 started 2024-07-14T00:00:14.916526+02:00 pve pveproxy[956]: worker 66015 started 2024-07-14T00:00:19.662458+02:00 pve spiceproxy[963]: worker exit 2024-07-14T00:00:19.671114+02:00 pve spiceproxy[962]: worker 963 finished 2024-07-14T00:00:19.917394+02:00 pve pveproxy[957]: worker exit 2024-07-14T00:00:19.917670+02:00 pve pveproxy[958]: worker exit 2024-07-14T00:00:19.917928+02:00 pve pveproxy[12340]: worker exit 2024-07-14T00:00:19.950456+02:00 pve pveproxy[956]: worker 12340 finished 2024-07-14T00:00:19.950626+02:00 pve pveproxy[956]: worker 958 finished 2024-07-14T00:00:19.953569+02:00 pve pveproxy[956]: worker 957 finished 2024-07-14T00:17:01.761976+02:00 pve CRON[68699]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T00:24:01.770880+02:00 pve CRON[69810]: (root) CMD (if [ $(date +%w) -eq 0 ] && [ -x /usr/lib/zfs-linux/scrub ]; then /usr/lib/zfs-linux/scrub; fi) 2024-07-14T01:17:01.809682+02:00 pve CRON[78291]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T01:34:49.683392+02:00 pve kernel: [28027.115921] perf: interrupt took too long (4324 > 4252), lowering kernel.perf_event_max_sample_rate to 46250 2024-07-14T02:17:01.849689+02:00 pve CRON[87881]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T02:43:54.968115+02:00 pve systemd[1]: Starting pve-daily-update.service - Daily PVE download activities... 2024-07-14T02:43:56.495660+02:00 pve pveupdate[92161]: <root@pam> starting task UPID:pve:00016824:003117F4:66931F4C:aptupdate::root@pam: 2024-07-14T02:43:57.889413+02:00 pve pveupdate[92196]: update new package list: /var/lib/pve-manager/pkgupdates 2024-07-14T02:44:00.774455+02:00 pve pveupdate[92161]: <root@pam> end task UPID:pve:00016824:003117F4:66931F4C:aptupdate::root@pam: OK 2024-07-14T02:44:00.815568+02:00 pve systemd[1]: pve-daily-update.service: Deactivated successfully. 2024-07-14T02:44:00.815692+02:00 pve systemd[1]: Finished pve-daily-update.service - Daily PVE download activities. 2024-07-14T02:44:00.815961+02:00 pve systemd[1]: pve-daily-update.service: Consumed 5.040s CPU time. 2024-07-14T02:47:48.760419+02:00 pve systemd[1]: Starting apt-daily.service - Daily apt download activities... 2024-07-14T02:47:49.156377+02:00 pve systemd[1]: apt-daily.service: Deactivated successfully. 2024-07-14T02:47:49.156587+02:00 pve systemd[1]: Finished apt-daily.service - Daily apt download activities. 2024-07-14T03:10:01.881594+02:00 pve CRON[96790]: (root) CMD (test -e /run/systemd/system || SERVICE_MODE=1 /sbin/e2scrub_all -A -r) 2024-07-14T03:10:54.960943+02:00 pve systemd[1]: Starting e2scrub_all.service - Online ext4 Metadata Check for All Filesystems... 2024-07-14T03:10:54.962940+02:00 pve systemd[1]: e2scrub_all.service: Deactivated successfully. 2024-07-14T03:10:54.963394+02:00 pve systemd[1]: Finished e2scrub_all.service - Online ext4 Metadata Check for All Filesystems. 2024-07-14T03:17:01.889180+02:00 pve CRON[97913]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T03:30:01.903232+02:00 pve CRON[99983]: (root) CMD (test -e /run/systemd/system || SERVICE_MODE=1 /usr/lib/x86_64-linux-gnu/e2fsprogs/e2scrub_all_cron) 2024-07-14T04:17:01.935516+02:00 pve CRON[107503]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T05:17:01.984064+02:00 pve CRON[117097]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T06:07:12.440421+02:00 pve systemd[1]: Starting apt-daily-upgrade.service - Daily apt upgrade and clean activities... 2024-07-14T06:07:12.833064+02:00 pve systemd[1]: apt-daily-upgrade.service: Deactivated successfully. 2024-07-14T06:07:12.833281+02:00 pve systemd[1]: Finished apt-daily-upgrade.service - Daily apt upgrade and clean activities. 2024-07-14T06:17:01.027303+02:00 pve CRON[126741]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T06:25:01.039726+02:00 pve CRON[128054]: (root) CMD (test -x /usr/sbin/anacron || { cd / && run-parts --report /etc/cron.daily; }) 2024-07-14T06:47:01.065556+02:00 pve CRON[131582]: (root) CMD (test -x /usr/sbin/anacron || { cd / && run-parts --report /etc/cron.weekly; }) 2024-07-14T07:17:01.092529+02:00 pve CRON[136381]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T07:56:12.436376+02:00 pve systemd[1]: Starting man-db.service - Daily man-db regeneration... 2024-07-14T07:56:12.724449+02:00 pve systemd[1]: man-db.service: Deactivated successfully. 2024-07-14T07:56:12.724686+02:00 pve systemd[1]: Finished man-db.service - Daily man-db regeneration. 2024-07-14T08:17:01.133335+02:00 pve CRON[145967]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T09:17:01.173028+02:00 pve CRON[155591]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T10:17:01.219237+02:00 pve CRON[165172]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T11:17:01.256411+02:00 pve CRON[174753]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T12:17:01.298733+02:00 pve CRON[184337]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T13:17:01.342604+02:00 pve CRON[193924]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T14:17:01.382525+02:00 pve CRON[203509]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T14:54:07.957706+02:00 pve pvestatd[918]: auth key pair too old, rotating.. 2024-07-14T15:14:54.964638+02:00 pve systemd[1]: Starting apt-daily.service - Daily apt download activities... 2024-07-14T15:14:55.356350+02:00 pve systemd[1]: apt-daily.service: Deactivated successfully. 2024-07-14T15:14:55.356584+02:00 pve systemd[1]: Finished apt-daily.service - Daily apt download activities. 2024-07-14T15:17:01.422743+02:00 pve CRON[213135]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T15:55:58.277154+02:00 pve pvedaemon[947]: <root@pam> successful auth for user 'root@pam' 2024-07-14T15:58:54.564988+02:00 pve pvedaemon[220000]: requesting reboot of CT 104: UPID:pve:00035B60:0079E003:6693D99E:vzreboot:104:root@pam: 2024-07-14T15:58:54.565371+02:00 pve pvedaemon[947]: <root@pam> starting task UPID:pve:00035B60:0079E003:6693D99E:vzreboot:104:root@pam: 2024-07-14T15:59:01.143428+02:00 pve kernel: [79878.579451] fwbr104i0: port 2(veth104i0) entered disabled state 2024-07-14T15:59:01.143442+02:00 pve kernel: [79878.580472] veth104i0 (unregistering): left allmulticast mode 2024-07-14T15:59:01.143443+02:00 pve kernel: [79878.580476] veth104i0 (unregistering): left promiscuous mode 2024-07-14T15:59:01.143443+02:00 pve kernel: [79878.580478] fwbr104i0: port 2(veth104i0) entered disabled state 2024-07-14T15:59:01.507428+02:00 pve kernel: [79878.944519] kauditd_printk_skb: 3 callbacks suppressed 2024-07-14T15:59:01.507456+02:00 pve kernel: [79878.944522] audit: type=1400 audit(1720965541.499:53): apparmor="STATUS" operation="profile_remove" profile="/usr/bin/lxc-start" name="lxc-104_</var/lib/lxc>" pid=220070 comm="apparmor_parser" 2024-07-14T15:59:01.521571+02:00 pve pvedaemon[948]: unable to get PID for CT 104 (not running?) 2024-07-14T15:59:02.766357+02:00 pve pvedaemon[948]: unable to get PID for CT 104 (not running?) 2024-07-14T15:59:02.803389+02:00 pve kernel: [79880.241184] EXT4-fs (dm-8): unmounting filesystem 9cf36612-be29-41df-9082-486a20927adc. 2024-07-14T15:59:02.843581+02:00 pve kernel: [79880.279645] fwbr104i0: port 1(fwln104i0) entered disabled state 2024-07-14T15:59:02.843595+02:00 pve kernel: [79880.279723] vmbr0: port 2(fwpr104p0) entered disabled state 2024-07-14T15:59:02.843597+02:00 pve kernel: [79880.280098] fwln104i0 (unregistering): left allmulticast mode 2024-07-14T15:59:02.843597+02:00 pve kernel: [79880.280102] fwln104i0 (unregistering): left promiscuous mode 2024-07-14T15:59:02.843598+02:00 pve kernel: [79880.280104] fwbr104i0: port 1(fwln104i0) entered disabled state 2024-07-14T15:59:02.871400+02:00 pve kernel: [79880.307915] fwpr104p0 (unregistering): left allmulticast mode 2024-07-14T15:59:02.871414+02:00 pve kernel: [79880.307923] fwpr104p0 (unregistering): left promiscuous mode 2024-07-14T15:59:02.871415+02:00 pve kernel: [79880.307926] vmbr0: port 2(fwpr104p0) entered disabled state 2024-07-14T15:59:03.054101+02:00 pve systemd[1]: pve-container@104.service: Deactivated successfully. 2024-07-14T15:59:03.212444+02:00 pve systemd[1]: Started pve-container@104.service - PVE LXC Container: 104. 2024-07-14T15:59:03.843352+02:00 pve kernel: [79881.282393] EXT4-fs (dm-8): mounted filesystem 9cf36612-be29-41df-9082-486a20927adc r/w with ordered data mode. Quota mode: none. 2024-07-14T15:59:04.227385+02:00 pve kernel: [79881.666241] audit: type=1400 audit(1720965544.219:54): apparmor="STATUS" operation="profile_load" profile="/usr/bin/lxc-start" name="lxc-104_</var/lib/lxc>" pid=220111 comm="apparmor_parser" 2024-07-14T15:59:04.915354+02:00 pve kernel: [79882.355075] vmbr0: port 2(fwpr104p0) entered blocking state 2024-07-14T15:59:04.915368+02:00 pve kernel: [79882.355083] vmbr0: port 2(fwpr104p0) entered disabled state 2024-07-14T15:59:04.915370+02:00 pve kernel: [79882.355109] fwpr104p0: entered allmulticast mode 2024-07-14T15:59:04.915370+02:00 pve kernel: [79882.355155] fwpr104p0: entered promiscuous mode 2024-07-14T15:59:04.915371+02:00 pve kernel: [79882.355207] vmbr0: port 2(fwpr104p0) entered blocking state 2024-07-14T15:59:04.915371+02:00 pve kernel: [79882.355210] vmbr0: port 2(fwpr104p0) entered forwarding state 2024-07-14T15:59:04.931403+02:00 pve kernel: [79882.368422] fwbr104i0: port 1(fwln104i0) entered blocking state 2024-07-14T15:59:04.931418+02:00 pve kernel: [79882.368429] fwbr104i0: port 1(fwln104i0) entered disabled state 2024-07-14T15:59:04.931419+02:00 pve kernel: [79882.368454] fwln104i0: entered allmulticast mode 2024-07-14T15:59:04.931420+02:00 pve kernel: [79882.368499] fwln104i0: entered promiscuous mode 2024-07-14T15:59:04.931420+02:00 pve kernel: [79882.368550] fwbr104i0: port 1(fwln104i0) entered blocking state 2024-07-14T15:59:04.931421+02:00 pve kernel: [79882.368553] fwbr104i0: port 1(fwln104i0) entered forwarding state 2024-07-14T15:59:04.943375+02:00 pve kernel: [79882.381978] fwbr104i0: port 2(veth104i0) entered blocking state 2024-07-14T15:59:04.943392+02:00 pve kernel: [79882.381986] fwbr104i0: port 2(veth104i0) entered disabled state 2024-07-14T15:59:04.943393+02:00 pve kernel: [79882.382012] veth104i0: entered allmulticast mode 2024-07-14T15:59:04.943394+02:00 pve kernel: [79882.382058] veth104i0: entered promiscuous mode 2024-07-14T15:59:04.995384+02:00 pve kernel: [79882.434976] eth0: renamed from veth7i06Am 2024-07-14T15:59:05.185330+02:00 pve pvedaemon[947]: <root@pam> end task UPID:pve:00035B60:0079E003:6693D99E:vzreboot:104:root@pam: OK 2024-07-14T15:59:05.539373+02:00 pve kernel: [79882.977789] audit: type=1400 audit(1720965545.531:55): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="lsb_release" pid=220249 comm="apparmor_parser" 2024-07-14T15:59:05.539391+02:00 pve kernel: [79882.977892] audit: type=1400 audit(1720965545.531:56): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="nvidia_modprobe" pid=220250 comm="apparmor_parser" 2024-07-14T15:59:05.539392+02:00 pve kernel: [79882.977897] audit: type=1400 audit(1720965545.531:57): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="nvidia_modprobe//kmod" pid=220250 comm="apparmor_parser" 2024-07-14T15:59:05.547367+02:00 pve kernel: [79882.984185] audit: type=1400 audit(1720965545.539:58): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="ubuntu_pro_apt_news" pid=220252 comm="apparmor_parser" 2024-07-14T15:59:05.547437+02:00 pve kernel: [79882.987396] audit: type=1400 audit(1720965545.543:59): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="/usr/lib/NetworkManager/nm-dhcp-client.action" pid=220251 comm="apparmor_parser" 2024-07-14T15:59:05.547439+02:00 pve kernel: [79882.987403] audit: type=1400 audit(1720965545.543:60): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="/usr/lib/NetworkManager/nm-dhcp-helper" pid=220251 comm="apparmor_parser" 2024-07-14T15:59:05.547440+02:00 pve kernel: [79882.987406] audit: type=1400 audit(1720965545.543:61): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="/usr/lib/connman/scripts/dhclient-script" pid=220251 comm="apparmor_parser" 2024-07-14T15:59:05.547440+02:00 pve kernel: [79882.987409] audit: type=1400 audit(1720965545.543:62): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="/{,usr/}sbin/dhclient" pid=220251 comm="apparmor_parser" 2024-07-14T15:59:05.567390+02:00 pve kernel: [79883.004630] fwbr104i0: port 2(veth104i0) entered blocking state 2024-07-14T15:59:05.567407+02:00 pve kernel: [79883.004637] fwbr104i0: port 2(veth104i0) entered forwarding state 2024-07-14T16:10:52.070638+02:00 pve pvedaemon[948]: <root@pam> successful auth for user 'root@pam' 2024-07-14T16:17:01.468156+02:00 pve CRON[225492]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T16:19:00.135735+02:00 pve pvedaemon[948]: <root@pam> starting task UPID:pve:0003728C:007BB6F0:6693DE54:vncshell::root@pam: 2024-07-14T16:19:00.138699+02:00 pve pvedaemon[225932]: starting termproxy UPID:pve:0003728C:007BB6F0:6693DE54:vncshell::root@pam: 2024-07-14T16:19:00.208362+02:00 pve pvedaemon[949]: <root@pam> successful auth for user 'root@pam' 2024-07-14T16:19:00.227108+02:00 pve systemd[1]: Created slice user-0.slice - User Slice of UID 0. 2024-07-14T16:19:00.252349+02:00 pve systemd[1]: Starting user-runtime-dir@0.service - User Runtime Directory /run/user/0... 2024-07-14T16:19:00.263297+02:00 pve systemd[1]: Finished user-runtime-dir@0.service - User Runtime Directory /run/user/0. 2024-07-14T16:19:00.264989+02:00 pve systemd[1]: Starting user@0.service - User Manager for UID 0... 2024-07-14T16:19:00.404248+02:00 pve systemd[225946]: Queued start job for default target default.target. 2024-07-14T16:19:00.428842+02:00 pve systemd[225946]: Created slice app.slice - User Application Slice. 2024-07-14T16:19:00.428968+02:00 pve systemd[225946]: Reached target paths.target - Paths. 2024-07-14T16:19:00.429016+02:00 pve systemd[225946]: Reached target timers.target - Timers. 2024-07-14T16:19:00.429858+02:00 pve systemd[225946]: Starting dbus.socket - D-Bus User Message Bus Socket... 2024-07-14T16:19:00.430084+02:00 pve systemd[225946]: Listening on dirmngr.socket - GnuPG network certificate management daemon. 2024-07-14T16:19:00.430224+02:00 pve systemd[225946]: Listening on gpg-agent-browser.socket - GnuPG cryptographic agent and passphrase cache (access for web browsers). 2024-07-14T16:19:00.430338+02:00 pve systemd[225946]: Listening on gpg-agent-extra.socket - GnuPG cryptographic agent and passphrase cache (restricted). 2024-07-14T16:19:00.430429+02:00 pve systemd[225946]: Listening on gpg-agent-ssh.socket - GnuPG cryptographic agent (ssh-agent emulation). 2024-07-14T16:19:00.430517+02:00 pve systemd[225946]: Listening on gpg-agent.socket - GnuPG cryptographic agent and passphrase cache. 2024-07-14T16:19:00.436459+02:00 pve systemd[225946]: Listening on dbus.socket - D-Bus User Message Bus Socket. 2024-07-14T16:19:00.436564+02:00 pve systemd[225946]: Reached target sockets.target - Sockets. 2024-07-14T16:19:00.436619+02:00 pve systemd[225946]: Reached target basic.target - Basic System. 2024-07-14T16:19:00.436672+02:00 pve systemd[225946]: Reached target default.target - Main User Target. 2024-07-14T16:19:00.436714+02:00 pve systemd[225946]: Startup finished in 162ms. 2024-07-14T16:19:00.436764+02:00 pve systemd[1]: Started user@0.service - User Manager for UID 0. 2024-07-14T16:19:00.437719+02:00 pve systemd[1]: Started session-56.scope - Session 56 of User root. -

@meister-mopper said in Verbindungsabbrüche mit Proxmox (Erledigt):

cat /var/log/syslog

2024-07-14T00:00:14.535906+02:00 pve systemd[1]: rsyslog.service: Sent signal SIGHUP to main process 574 (rsyslogd) on client request. 2024-07-14T00:00:14.536843+02:00 pve rsyslogd: [origin software="rsyslogd" swVersion="8.2302.0" x-pid="574" x-info="https://www.rsyslog.com"] rsyslogd was HUPed 2024-07-14T00:00:14.539451+02:00 pve systemd[1]: logrotate.service: Deactivated successfully. 2024-07-14T00:00:14.539648+02:00 pve systemd[1]: Finished logrotate.service - Rotate log files. 2024-07-14T00:00:14.659621+02:00 pve spiceproxy[962]: restarting server 2024-07-14T00:00:14.659731+02:00 pve spiceproxy[962]: starting 1 worker(s) 2024-07-14T00:00:14.661340+02:00 pve spiceproxy[962]: worker 66012 started 2024-07-14T00:00:14.910397+02:00 pve pveproxy[956]: restarting server 2024-07-14T00:00:14.910521+02:00 pve pveproxy[956]: starting 3 worker(s) 2024-07-14T00:00:14.910573+02:00 pve pveproxy[956]: worker 66013 started 2024-07-14T00:00:14.913512+02:00 pve pveproxy[956]: worker 66014 started 2024-07-14T00:00:14.916526+02:00 pve pveproxy[956]: worker 66015 started 2024-07-14T00:00:19.662458+02:00 pve spiceproxy[963]: worker exit 2024-07-14T00:00:19.671114+02:00 pve spiceproxy[962]: worker 963 finished 2024-07-14T00:00:19.917394+02:00 pve pveproxy[957]: worker exit 2024-07-14T00:00:19.917670+02:00 pve pveproxy[958]: worker exit 2024-07-14T00:00:19.917928+02:00 pve pveproxy[12340]: worker exit 2024-07-14T00:00:19.950456+02:00 pve pveproxy[956]: worker 12340 finished 2024-07-14T00:00:19.950626+02:00 pve pveproxy[956]: worker 958 finished 2024-07-14T00:00:19.953569+02:00 pve pveproxy[956]: worker 957 finished 2024-07-14T00:17:01.761976+02:00 pve CRON[68699]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T00:24:01.770880+02:00 pve CRON[69810]: (root) CMD (if [ $(date +%w) -eq 0 ] && [ -x /usr/lib/zfs-linux/scrub ]; then /usr/lib/zfs-linux/scrub; fi) 2024-07-14T01:17:01.809682+02:00 pve CRON[78291]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T01:34:49.683392+02:00 pve kernel: [28027.115921] perf: interrupt took too long (4324 > 4252), lowering kernel.perf_event_max_sample_rate to 46250 2024-07-14T02:17:01.849689+02:00 pve CRON[87881]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T02:43:54.968115+02:00 pve systemd[1]: Starting pve-daily-update.service - Daily PVE download activities... 2024-07-14T02:43:56.495660+02:00 pve pveupdate[92161]: <root@pam> starting task UPID:pve:00016824:003117F4:66931F4C:aptupdate::root@pam: 2024-07-14T02:43:57.889413+02:00 pve pveupdate[92196]: update new package list: /var/lib/pve-manager/pkgupdates 2024-07-14T02:44:00.774455+02:00 pve pveupdate[92161]: <root@pam> end task UPID:pve:00016824:003117F4:66931F4C:aptupdate::root@pam: OK 2024-07-14T02:44:00.815568+02:00 pve systemd[1]: pve-daily-update.service: Deactivated successfully. 2024-07-14T02:44:00.815692+02:00 pve systemd[1]: Finished pve-daily-update.service - Daily PVE download activities. 2024-07-14T02:44:00.815961+02:00 pve systemd[1]: pve-daily-update.service: Consumed 5.040s CPU time. 2024-07-14T02:47:48.760419+02:00 pve systemd[1]: Starting apt-daily.service - Daily apt download activities... 2024-07-14T02:47:49.156377+02:00 pve systemd[1]: apt-daily.service: Deactivated successfully. 2024-07-14T02:47:49.156587+02:00 pve systemd[1]: Finished apt-daily.service - Daily apt download activities. 2024-07-14T03:10:01.881594+02:00 pve CRON[96790]: (root) CMD (test -e /run/systemd/system || SERVICE_MODE=1 /sbin/e2scrub_all -A -r) 2024-07-14T03:10:54.960943+02:00 pve systemd[1]: Starting e2scrub_all.service - Online ext4 Metadata Check for All Filesystems... 2024-07-14T03:10:54.962940+02:00 pve systemd[1]: e2scrub_all.service: Deactivated successfully. 2024-07-14T03:10:54.963394+02:00 pve systemd[1]: Finished e2scrub_all.service - Online ext4 Metadata Check for All Filesystems. 2024-07-14T03:17:01.889180+02:00 pve CRON[97913]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T03:30:01.903232+02:00 pve CRON[99983]: (root) CMD (test -e /run/systemd/system || SERVICE_MODE=1 /usr/lib/x86_64-linux-gnu/e2fsprogs/e2scrub_all_cron) 2024-07-14T04:17:01.935516+02:00 pve CRON[107503]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T05:17:01.984064+02:00 pve CRON[117097]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T06:07:12.440421+02:00 pve systemd[1]: Starting apt-daily-upgrade.service - Daily apt upgrade and clean activities... 2024-07-14T06:07:12.833064+02:00 pve systemd[1]: apt-daily-upgrade.service: Deactivated successfully. 2024-07-14T06:07:12.833281+02:00 pve systemd[1]: Finished apt-daily-upgrade.service - Daily apt upgrade and clean activities. 2024-07-14T06:17:01.027303+02:00 pve CRON[126741]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T06:25:01.039726+02:00 pve CRON[128054]: (root) CMD (test -x /usr/sbin/anacron || { cd / && run-parts --report /etc/cron.daily; }) 2024-07-14T06:47:01.065556+02:00 pve CRON[131582]: (root) CMD (test -x /usr/sbin/anacron || { cd / && run-parts --report /etc/cron.weekly; }) 2024-07-14T07:17:01.092529+02:00 pve CRON[136381]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T07:56:12.436376+02:00 pve systemd[1]: Starting man-db.service - Daily man-db regeneration... 2024-07-14T07:56:12.724449+02:00 pve systemd[1]: man-db.service: Deactivated successfully. 2024-07-14T07:56:12.724686+02:00 pve systemd[1]: Finished man-db.service - Daily man-db regeneration. 2024-07-14T08:17:01.133335+02:00 pve CRON[145967]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T09:17:01.173028+02:00 pve CRON[155591]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T10:17:01.219237+02:00 pve CRON[165172]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T11:17:01.256411+02:00 pve CRON[174753]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T12:17:01.298733+02:00 pve CRON[184337]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T13:17:01.342604+02:00 pve CRON[193924]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T14:17:01.382525+02:00 pve CRON[203509]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T14:54:07.957706+02:00 pve pvestatd[918]: auth key pair too old, rotating.. 2024-07-14T15:14:54.964638+02:00 pve systemd[1]: Starting apt-daily.service - Daily apt download activities... 2024-07-14T15:14:55.356350+02:00 pve systemd[1]: apt-daily.service: Deactivated successfully. 2024-07-14T15:14:55.356584+02:00 pve systemd[1]: Finished apt-daily.service - Daily apt download activities. 2024-07-14T15:17:01.422743+02:00 pve CRON[213135]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T15:55:58.277154+02:00 pve pvedaemon[947]: <root@pam> successful auth for user 'root@pam' 2024-07-14T15:58:54.564988+02:00 pve pvedaemon[220000]: requesting reboot of CT 104: UPID:pve:00035B60:0079E003:6693D99E:vzreboot:104:root@pam: 2024-07-14T15:58:54.565371+02:00 pve pvedaemon[947]: <root@pam> starting task UPID:pve:00035B60:0079E003:6693D99E:vzreboot:104:root@pam: 2024-07-14T15:59:01.143428+02:00 pve kernel: [79878.579451] fwbr104i0: port 2(veth104i0) entered disabled state 2024-07-14T15:59:01.143442+02:00 pve kernel: [79878.580472] veth104i0 (unregistering): left allmulticast mode 2024-07-14T15:59:01.143443+02:00 pve kernel: [79878.580476] veth104i0 (unregistering): left promiscuous mode 2024-07-14T15:59:01.143443+02:00 pve kernel: [79878.580478] fwbr104i0: port 2(veth104i0) entered disabled state 2024-07-14T15:59:01.507428+02:00 pve kernel: [79878.944519] kauditd_printk_skb: 3 callbacks suppressed 2024-07-14T15:59:01.507456+02:00 pve kernel: [79878.944522] audit: type=1400 audit(1720965541.499:53): apparmor="STATUS" operation="profile_remove" profile="/usr/bin/lxc-start" name="lxc-104_</var/lib/lxc>" pid=220070 comm="apparmor_parser" 2024-07-14T15:59:01.521571+02:00 pve pvedaemon[948]: unable to get PID for CT 104 (not running?) 2024-07-14T15:59:02.766357+02:00 pve pvedaemon[948]: unable to get PID for CT 104 (not running?) 2024-07-14T15:59:02.803389+02:00 pve kernel: [79880.241184] EXT4-fs (dm-8): unmounting filesystem 9cf36612-be29-41df-9082-486a20927adc. 2024-07-14T15:59:02.843581+02:00 pve kernel: [79880.279645] fwbr104i0: port 1(fwln104i0) entered disabled state 2024-07-14T15:59:02.843595+02:00 pve kernel: [79880.279723] vmbr0: port 2(fwpr104p0) entered disabled state 2024-07-14T15:59:02.843597+02:00 pve kernel: [79880.280098] fwln104i0 (unregistering): left allmulticast mode 2024-07-14T15:59:02.843597+02:00 pve kernel: [79880.280102] fwln104i0 (unregistering): left promiscuous mode 2024-07-14T15:59:02.843598+02:00 pve kernel: [79880.280104] fwbr104i0: port 1(fwln104i0) entered disabled state 2024-07-14T15:59:02.871400+02:00 pve kernel: [79880.307915] fwpr104p0 (unregistering): left allmulticast mode 2024-07-14T15:59:02.871414+02:00 pve kernel: [79880.307923] fwpr104p0 (unregistering): left promiscuous mode 2024-07-14T15:59:02.871415+02:00 pve kernel: [79880.307926] vmbr0: port 2(fwpr104p0) entered disabled state 2024-07-14T15:59:03.054101+02:00 pve systemd[1]: pve-container@104.service: Deactivated successfully. 2024-07-14T15:59:03.212444+02:00 pve systemd[1]: Started pve-container@104.service - PVE LXC Container: 104. 2024-07-14T15:59:03.843352+02:00 pve kernel: [79881.282393] EXT4-fs (dm-8): mounted filesystem 9cf36612-be29-41df-9082-486a20927adc r/w with ordered data mode. Quota mode: none. 2024-07-14T15:59:04.227385+02:00 pve kernel: [79881.666241] audit: type=1400 audit(1720965544.219:54): apparmor="STATUS" operation="profile_load" profile="/usr/bin/lxc-start" name="lxc-104_</var/lib/lxc>" pid=220111 comm="apparmor_parser" 2024-07-14T15:59:04.915354+02:00 pve kernel: [79882.355075] vmbr0: port 2(fwpr104p0) entered blocking state 2024-07-14T15:59:04.915368+02:00 pve kernel: [79882.355083] vmbr0: port 2(fwpr104p0) entered disabled state 2024-07-14T15:59:04.915370+02:00 pve kernel: [79882.355109] fwpr104p0: entered allmulticast mode 2024-07-14T15:59:04.915370+02:00 pve kernel: [79882.355155] fwpr104p0: entered promiscuous mode 2024-07-14T15:59:04.915371+02:00 pve kernel: [79882.355207] vmbr0: port 2(fwpr104p0) entered blocking state 2024-07-14T15:59:04.915371+02:00 pve kernel: [79882.355210] vmbr0: port 2(fwpr104p0) entered forwarding state 2024-07-14T15:59:04.931403+02:00 pve kernel: [79882.368422] fwbr104i0: port 1(fwln104i0) entered blocking state 2024-07-14T15:59:04.931418+02:00 pve kernel: [79882.368429] fwbr104i0: port 1(fwln104i0) entered disabled state 2024-07-14T15:59:04.931419+02:00 pve kernel: [79882.368454] fwln104i0: entered allmulticast mode 2024-07-14T15:59:04.931420+02:00 pve kernel: [79882.368499] fwln104i0: entered promiscuous mode 2024-07-14T15:59:04.931420+02:00 pve kernel: [79882.368550] fwbr104i0: port 1(fwln104i0) entered blocking state 2024-07-14T15:59:04.931421+02:00 pve kernel: [79882.368553] fwbr104i0: port 1(fwln104i0) entered forwarding state 2024-07-14T15:59:04.943375+02:00 pve kernel: [79882.381978] fwbr104i0: port 2(veth104i0) entered blocking state 2024-07-14T15:59:04.943392+02:00 pve kernel: [79882.381986] fwbr104i0: port 2(veth104i0) entered disabled state 2024-07-14T15:59:04.943393+02:00 pve kernel: [79882.382012] veth104i0: entered allmulticast mode 2024-07-14T15:59:04.943394+02:00 pve kernel: [79882.382058] veth104i0: entered promiscuous mode 2024-07-14T15:59:04.995384+02:00 pve kernel: [79882.434976] eth0: renamed from veth7i06Am 2024-07-14T15:59:05.185330+02:00 pve pvedaemon[947]: <root@pam> end task UPID:pve:00035B60:0079E003:6693D99E:vzreboot:104:root@pam: OK 2024-07-14T15:59:05.539373+02:00 pve kernel: [79882.977789] audit: type=1400 audit(1720965545.531:55): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="lsb_release" pid=220249 comm="apparmor_parser" 2024-07-14T15:59:05.539391+02:00 pve kernel: [79882.977892] audit: type=1400 audit(1720965545.531:56): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="nvidia_modprobe" pid=220250 comm="apparmor_parser" 2024-07-14T15:59:05.539392+02:00 pve kernel: [79882.977897] audit: type=1400 audit(1720965545.531:57): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="nvidia_modprobe//kmod" pid=220250 comm="apparmor_parser" 2024-07-14T15:59:05.547367+02:00 pve kernel: [79882.984185] audit: type=1400 audit(1720965545.539:58): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="ubuntu_pro_apt_news" pid=220252 comm="apparmor_parser" 2024-07-14T15:59:05.547437+02:00 pve kernel: [79882.987396] audit: type=1400 audit(1720965545.543:59): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="/usr/lib/NetworkManager/nm-dhcp-client.action" pid=220251 comm="apparmor_parser" 2024-07-14T15:59:05.547439+02:00 pve kernel: [79882.987403] audit: type=1400 audit(1720965545.543:60): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="/usr/lib/NetworkManager/nm-dhcp-helper" pid=220251 comm="apparmor_parser" 2024-07-14T15:59:05.547440+02:00 pve kernel: [79882.987406] audit: type=1400 audit(1720965545.543:61): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="/usr/lib/connman/scripts/dhclient-script" pid=220251 comm="apparmor_parser" 2024-07-14T15:59:05.547440+02:00 pve kernel: [79882.987409] audit: type=1400 audit(1720965545.543:62): apparmor="STATUS" operation="profile_load" label="lxc-104_</var/lib/lxc>//&:lxc-104_<-var-lib-lxc>:unconfined" name="/{,usr/}sbin/dhclient" pid=220251 comm="apparmor_parser" 2024-07-14T15:59:05.567390+02:00 pve kernel: [79883.004630] fwbr104i0: port 2(veth104i0) entered blocking state 2024-07-14T15:59:05.567407+02:00 pve kernel: [79883.004637] fwbr104i0: port 2(veth104i0) entered forwarding state 2024-07-14T16:10:52.070638+02:00 pve pvedaemon[948]: <root@pam> successful auth for user 'root@pam' 2024-07-14T16:17:01.468156+02:00 pve CRON[225492]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) 2024-07-14T16:19:00.135735+02:00 pve pvedaemon[948]: <root@pam> starting task UPID:pve:0003728C:007BB6F0:6693DE54:vncshell::root@pam: 2024-07-14T16:19:00.138699+02:00 pve pvedaemon[225932]: starting termproxy UPID:pve:0003728C:007BB6F0:6693DE54:vncshell::root@pam: 2024-07-14T16:19:00.208362+02:00 pve pvedaemon[949]: <root@pam> successful auth for user 'root@pam' 2024-07-14T16:19:00.227108+02:00 pve systemd[1]: Created slice user-0.slice - User Slice of UID 0. 2024-07-14T16:19:00.252349+02:00 pve systemd[1]: Starting user-runtime-dir@0.service - User Runtime Directory /run/user/0... 2024-07-14T16:19:00.263297+02:00 pve systemd[1]: Finished user-runtime-dir@0.service - User Runtime Directory /run/user/0. 2024-07-14T16:19:00.264989+02:00 pve systemd[1]: Starting user@0.service - User Manager for UID 0... 2024-07-14T16:19:00.404248+02:00 pve systemd[225946]: Queued start job for default target default.target. 2024-07-14T16:19:00.428842+02:00 pve systemd[225946]: Created slice app.slice - User Application Slice. 2024-07-14T16:19:00.428968+02:00 pve systemd[225946]: Reached target paths.target - Paths. 2024-07-14T16:19:00.429016+02:00 pve systemd[225946]: Reached target timers.target - Timers. 2024-07-14T16:19:00.429858+02:00 pve systemd[225946]: Starting dbus.socket - D-Bus User Message Bus Socket... 2024-07-14T16:19:00.430084+02:00 pve systemd[225946]: Listening on dirmngr.socket - GnuPG network certificate management daemon. 2024-07-14T16:19:00.430224+02:00 pve systemd[225946]: Listening on gpg-agent-browser.socket - GnuPG cryptographic agent and passphrase cache (access for web browsers). 2024-07-14T16:19:00.430338+02:00 pve systemd[225946]: Listening on gpg-agent-extra.socket - GnuPG cryptographic agent and passphrase cache (restricted). 2024-07-14T16:19:00.430429+02:00 pve systemd[225946]: Listening on gpg-agent-ssh.socket - GnuPG cryptographic agent (ssh-agent emulation). 2024-07-14T16:19:00.430517+02:00 pve systemd[225946]: Listening on gpg-agent.socket - GnuPG cryptographic agent and passphrase cache. 2024-07-14T16:19:00.436459+02:00 pve systemd[225946]: Listening on dbus.socket - D-Bus User Message Bus Socket. 2024-07-14T16:19:00.436564+02:00 pve systemd[225946]: Reached target sockets.target - Sockets. 2024-07-14T16:19:00.436619+02:00 pve systemd[225946]: Reached target basic.target - Basic System. 2024-07-14T16:19:00.436672+02:00 pve systemd[225946]: Reached target default.target - Main User Target. 2024-07-14T16:19:00.436714+02:00 pve systemd[225946]: Startup finished in 162ms. 2024-07-14T16:19:00.436764+02:00 pve systemd[1]: Started user@0.service - User Manager for UID 0. 2024-07-14T16:19:00.437719+02:00 pve systemd[1]: Started session-56.scope - Session 56 of User root. -

Ich musste den Beitrag nochmal aktivieren. Es kam eben leider schon wieder zum Abbruch zum Iobroker.

Cannot read compact mode by host "system.host.IobrokerHauptinstallation": timeout@lustig29 sagte in Verbindungsabbrüche mit Proxmox:

Ich musste den Beitrag nochmal aktivieren. Es kam eben leider schon wieder zum Abbruch zum Iobroker.

Cannot read compact mode by host "system.host.IobrokerHauptinstallation": timeoutwo bekommst du denn diese Meldung? im Browser im Admin Tab ?

Oder aus der Console?

Welches BS, welche Versionen von iobroker laufen denn da?

Am besten mal "iob diag" in der console eingeben, und den Output hier bitte posten.. dann kann man mehr dazu sagen.. -

Ich musste den Beitrag nochmal aktivieren. Es kam eben leider schon wieder zum Abbruch zum Iobroker.

Cannot read compact mode by host "system.host.IobrokerHauptinstallation": timeout -

@lustig29 sagte in Verbindungsabbrüche mit Proxmox:

Ich musste den Beitrag nochmal aktivieren. Es kam eben leider schon wieder zum Abbruch zum Iobroker.

Cannot read compact mode by host "system.host.IobrokerHauptinstallation": timeoutwo bekommst du denn diese Meldung? im Browser im Admin Tab ?

Oder aus der Console?

Welches BS, welche Versionen von iobroker laufen denn da?

Am besten mal "iob diag" in der console eingeben, und den Output hier bitte posten.. dann kann man mehr dazu sagen..@ilovegym Ja, genau. Die Meldung kommt im Browser, wenn sich der Kreis dreht.

Copy text starting here: ======================= SUMMARY ======================= v.2024-05-22 Static hostname: IobrokerHauptinstallation Icon name: computer-container Chassis: container Virtualization: lxc Operating System: Ubuntu 22.04.4 LTS Kernel: Linux 6.5.13-3-pve Architecture: x86-64 Installation: lxc Kernel: x86_64 Userland: 64 bit Timezone: Europe/Berlin (CEST, +0200) User-ID: 0 Display-Server: false Boot Target: graphical.target Pending OS-Updates: 51 Error: Object "system.repositories" not found Pending iob updates: 0 Nodejs-Installation: /usr/bin/nodejs v18.20.2 /usr/bin/node v18.20.2 /usr/bin/npm 10.5.0 /usr/bin/npx 10.5.0 /usr/bin/corepack 0.25.2 Recommended versions are nodejs 18.20.4 and npm 10.7.0 Your nodejs installation is correct MEMORY: total used free shared buff/cache available Mem: 11G 3.8G 6.7G 0.0K 994M 7.7G Swap: 8.2G 0B 8.2G Total: 19G 3.8G 14G Active iob-Instances: 54 List is empty ioBroker Core: js-controller 5.0.19 admin 6.17.14 ioBroker Status: iobroker is running on this host. Objects type: jsonl States type: jsonl Status admin and web instance: + system.adapter.admin.0 : admin : IobrokerHauptinstallation - enabled, port: 8081, bind: 0.0.0.0, run as: admin + system.adapter.web.0 : web : IobrokerHauptinstallation - enabled, port: 8082, bind: 0.0.0.0, run as: admin Objects: 25318 States: 21417 Size of iob-Database: 18M /opt/iobroker/iobroker-data/objects.jsonl 9.5M /opt/iobroker/iobroker-data/states.jsonl ********************************************************************* Some problems detected, please run iob fix and try to have them fixed ********************************************************************* =================== END OF SUMMARY ======================= Mark text until here for copying ===

-

@ilovegym Ja, genau. Die Meldung kommt im Browser, wenn sich der Kreis dreht.

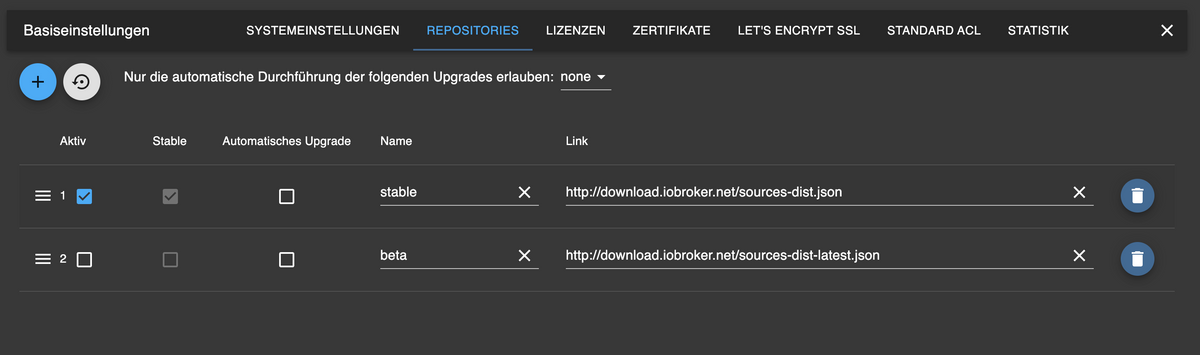

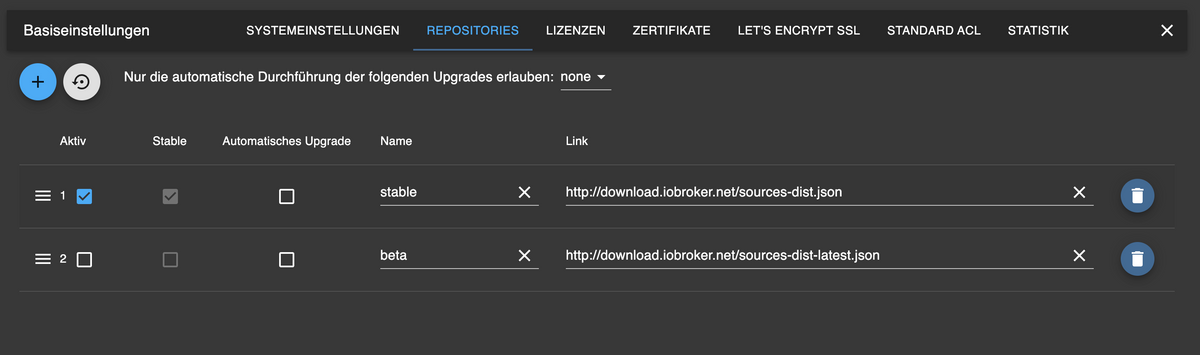

Copy text starting here: ======================= SUMMARY ======================= v.2024-05-22 Static hostname: IobrokerHauptinstallation Icon name: computer-container Chassis: container Virtualization: lxc Operating System: Ubuntu 22.04.4 LTS Kernel: Linux 6.5.13-3-pve Architecture: x86-64 Installation: lxc Kernel: x86_64 Userland: 64 bit Timezone: Europe/Berlin (CEST, +0200) User-ID: 0 Display-Server: false Boot Target: graphical.target Pending OS-Updates: 51 Error: Object "system.repositories" not found Pending iob updates: 0 Nodejs-Installation: /usr/bin/nodejs v18.20.2 /usr/bin/node v18.20.2 /usr/bin/npm 10.5.0 /usr/bin/npx 10.5.0 /usr/bin/corepack 0.25.2 Recommended versions are nodejs 18.20.4 and npm 10.7.0 Your nodejs installation is correct MEMORY: total used free shared buff/cache available Mem: 11G 3.8G 6.7G 0.0K 994M 7.7G Swap: 8.2G 0B 8.2G Total: 19G 3.8G 14G Active iob-Instances: 54 List is empty ioBroker Core: js-controller 5.0.19 admin 6.17.14 ioBroker Status: iobroker is running on this host. Objects type: jsonl States type: jsonl Status admin and web instance: + system.adapter.admin.0 : admin : IobrokerHauptinstallation - enabled, port: 8081, bind: 0.0.0.0, run as: admin + system.adapter.web.0 : web : IobrokerHauptinstallation - enabled, port: 8082, bind: 0.0.0.0, run as: admin Objects: 25318 States: 21417 Size of iob-Database: 18M /opt/iobroker/iobroker-data/objects.jsonl 9.5M /opt/iobroker/iobroker-data/states.jsonl ********************************************************************* Some problems detected, please run iob fix and try to have them fixed ********************************************************************* =================== END OF SUMMARY ======================= Mark text until here for copying ===

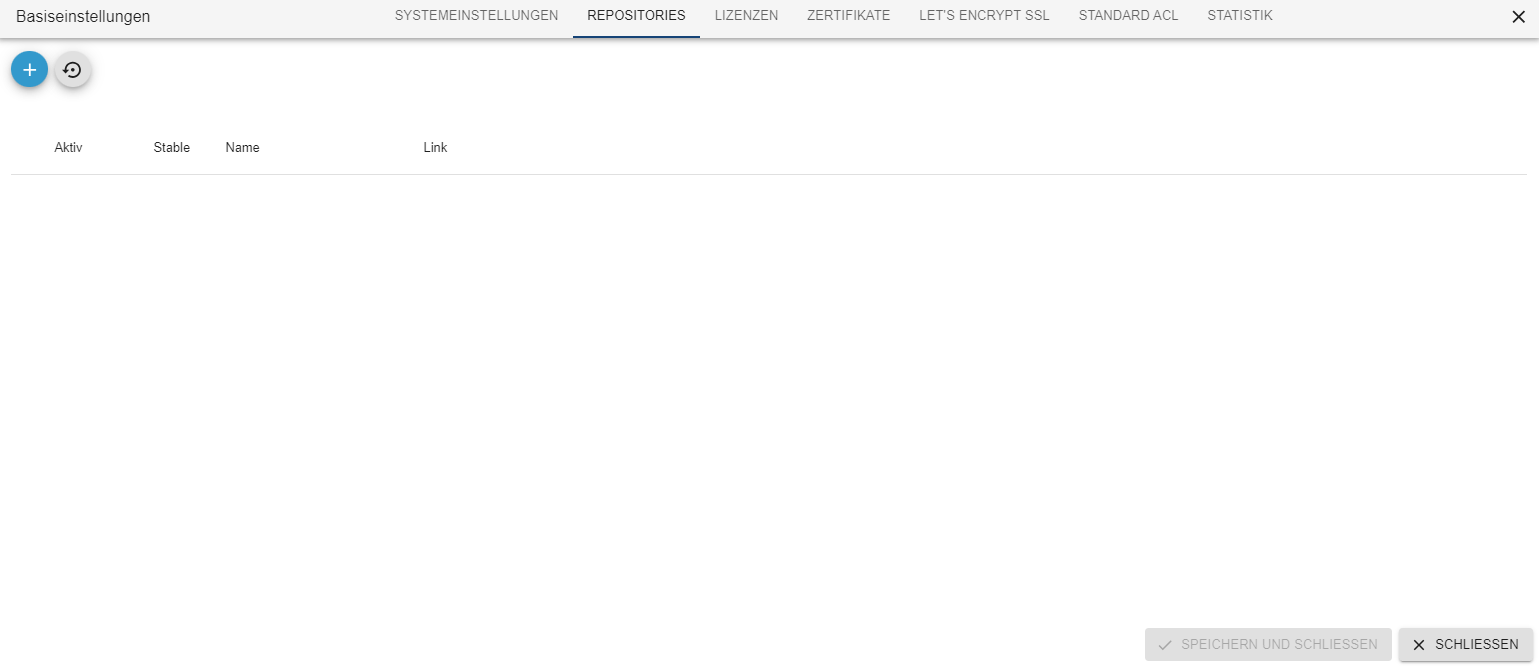

hmm mach mal deine 51 Updates und schau, dass dein Repo erreichbar ist..

-

hmm mach mal deine 51 Updates und schau, dass dein Repo erreichbar ist..

-

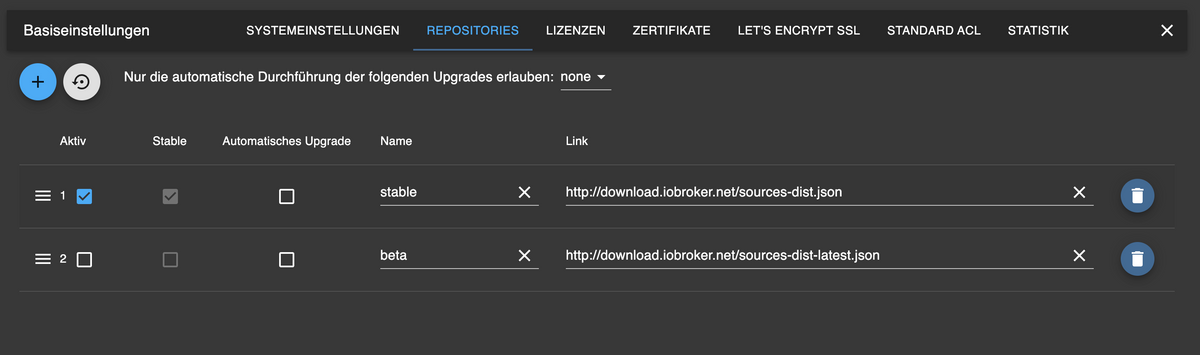

warum ist das leer.. haste mal deinen Monk aufraeumen lassen ? :D

-

warum ist das leer.. haste mal deinen Monk aufraeumen lassen ? :D

-

warum ist das leer.. haste mal deinen Monk aufraeumen lassen ? :D